# Tensorflow

# Simple Linear Regression.

# Minimal Example

- Import the relevant libraries

import numpy as np

import matplotlib.pyplot as plt

from mpl_toolkits.mplot3d import Axes3D

2

3

- Generate random input data to train on

observations = 1000

xs = np.random.uniform(low=-10, high=10, size=(observations, 1))

zs = np.random.uniform(-10,10,(observations, 1))

inputs = np.column_stack((xs, zs))

print(inputs.shape)

print(inputs)

2

3

4

5

6

7

8

Output is a matrix of size (1000,2)

(1000, 2)

[[ 7.44651066 3.0441044 ]

[ 3.18741031 -6.10663328]

[ 7.47234553 6.86829353]

...

[ 4.3408767 2.59859389]

[ 5.96692549 -1.95235124]

[ 6.43664934 -8.52279315]]

2

3

4

5

6

7

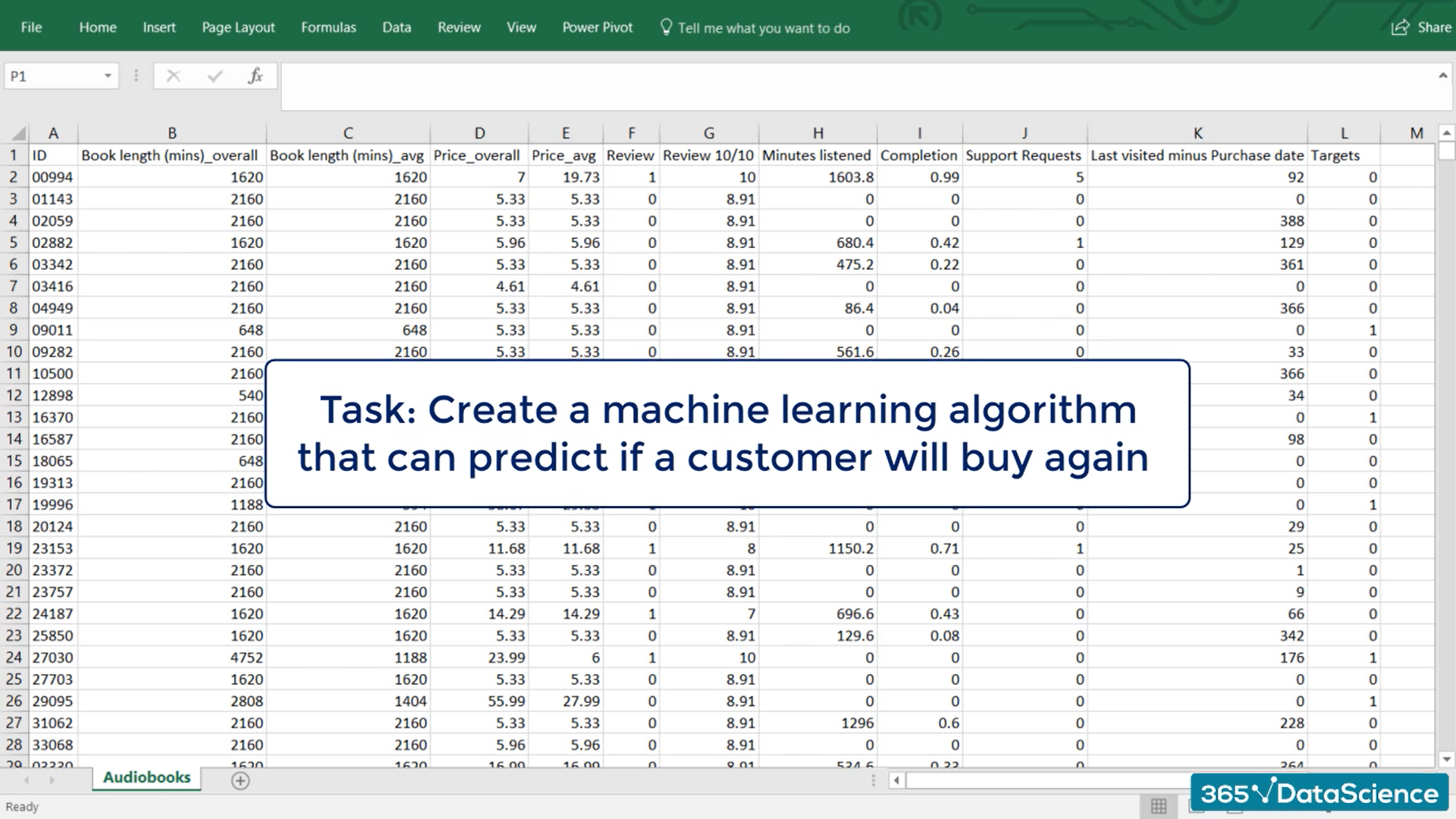

| Elements of the model in supervised learning | Status |

|---|---|

| inputs | done |

| weights | Computer |

| biases | Computer |

| outputs | Computer |

| targets | to do |

Targets =

Where 2 is the first weight 3 is the second weight and 5 is the baias.

The noise is introduced to randomize the data.

- Create the targets we will aim at

noise = np.random.uniform(-1, 1, (observations, 1))

targets = 2*xs - 3*zs + 5 + noise

# the targets are a linear combination of two vectors 1000x1

# a scalar and noise 1000x1, their shape should be 1000x1

print(targets.shape)

2

3

4

5

6

7

8

Output the shape of the targets, a matrix 1000x1

(1000, 1)

- Plot the training data

# In order to use the 3D plot, the objects should have a certain shape, so we reshape the targets.

# The proper method to use is reshape and takes as arguments the dimensions in which we want to fit the object.

targets = targets.reshape(observations,)

# Plotting according to the conventional matplotlib.pyplot syntax

# Declare the figure

fig = plt.figure()

# A method allowing us to create the 3D plot

ax = fig.add_subplot(111, projection='3d')

# Choose the axes.

ax.plot(xs, zs, targets)

# Set labels

ax.set_xlabel('xs')

ax.set_ylabel('zs')

ax.set_zlabel('Targets')

# You can fiddle with the azim parameter to plot the data from different angles. Just change the value of azim=100

# to azim = 0 ; azim = 200, or whatever. Check and see what happens.

ax.view_init(azim=100)

# So far we were just describing the plot. This method actually shows the plot.

plt.show()

# We reshape the targets back to the shape that they were in before plotting.

# This reshaping is a side-effect of the 3D plot. Sorry for that.

targets = targets.reshape(observations,1)

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

Clean code

targets = targets.reshape(observations,)

fig = plt.figure()

ax = fig.add_subplot(111, projection='3d')

ax.plot(xs, zs, targets)

ax.set_xlabel('xs')

ax.set_ylabel('zs')

ax.set_zlabel('Targets')

ax.view_init(azim=100)

plt.show()

targets = targets.reshape(observations, 1)

2

3

4

5

6

7

8

9

10

- Create weights

# our initial weights and biases will be picked randomly from

# the interval minus 0.1 to 0.1.

init_range = 0.1

#The size of the weights matrix is two by one as we

# have two variables so there are two weights one for#

#each input variable and a single output.

weights = np.random.uniform(-init_range, init_range, size=(2, 1))

print(f'Weights: {weights}')

2

3

4

5

6

7

8

9

- Create Biases

#Let's declare the bias and illogically the appropriate shape is one by one.

#So the bias is a scalar in machine learning.

#There are many biases as there are outputs.

#Each bias refers to an output.

biases = np.random.uniform(-init_range, init_range, size=1)

print(f'Biases: {biases}')

2

3

4

5

6

7

Weights: [[-0.07021836]

[ 0.00626743]]

Biases: [-0.01464248]

2

3

- Set a learning rate

learning_rate = 0.02

So we are all set. We have inputs targets and arbitrary numbers for weights and biases. What is left is to vary the weights and biases so our outputs are closest to the targets as we know by now. The problem boils down to minimizing the loss function with respect to the weights and the biases. And because this is a regression we'll use one half the L2 norm loss function.

Next let's make our model learn.

Since this is an iterative problem. We must create a loop which will apply our update rule and calculate the last function.

I'll use a for loop with 100 iterations to complete this task. Let's see the game plan will follow:

At each iteration We will calculate the outputs

and compare them to the targets through the last function.

We will print the last for each iteration so we know how the algorithm is doing.

Finally we will adjust the weights and biases to get a better fit of the data.

At the next iteration these updated weights and biases will provide different outputs.

Then the procedure will be repeated.

Now the dot product of the input times the weights is 1000 by two times to buy one. So a 1000 by 1 matrix when we add the bias which is a scalar. Python adds the element wise. This means it is added to each element of the output matrix.

for i in range(100):

outputs = np.dot(inputs, weights) + biases

2

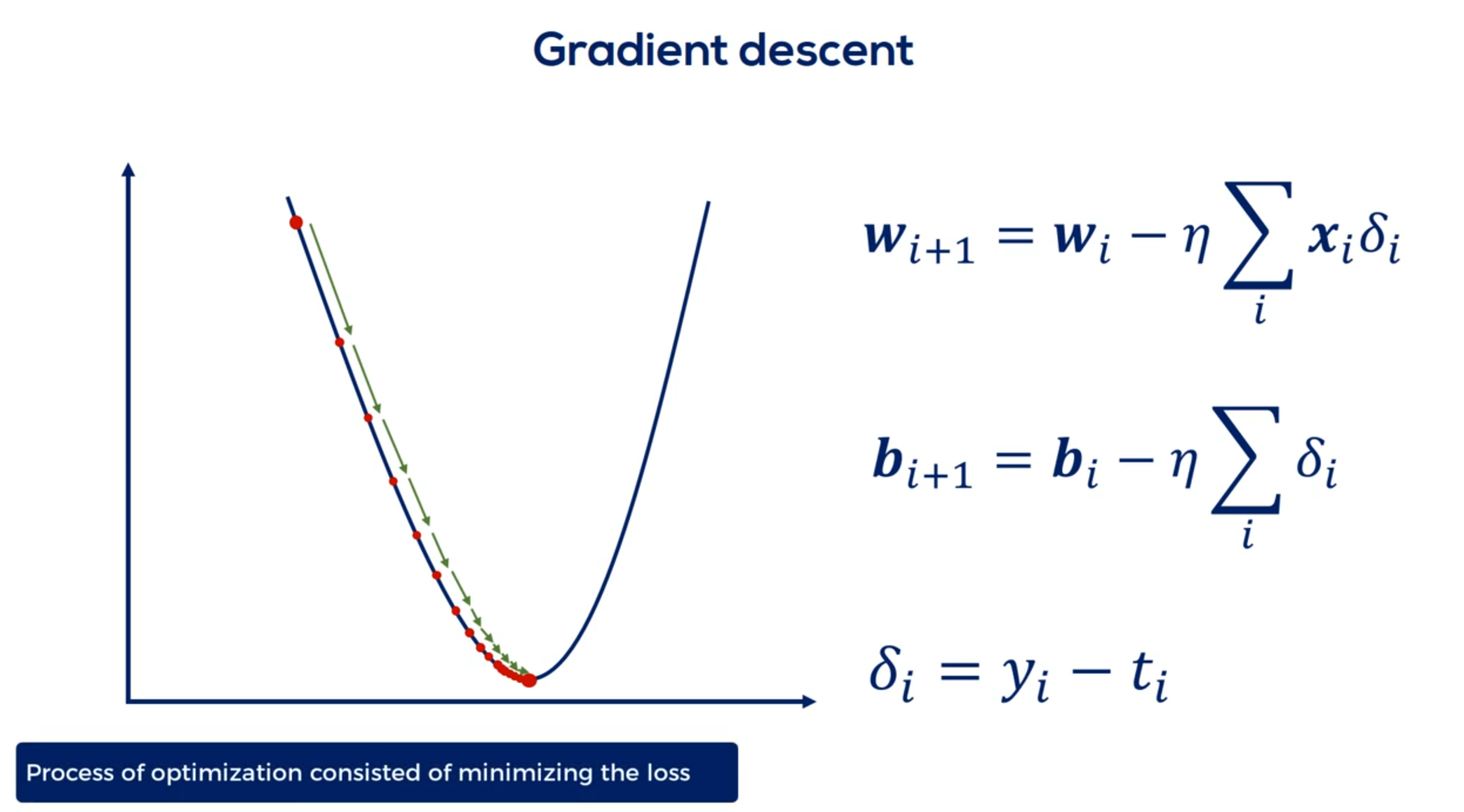

OK for simplicity let's declare a variable called deltas which will record the difference between the outputs and the targets. We already introduce such variable in the gradient descent lecture deltas equals outputs minus targets. That's useful as it is a part of the update rule. Then we must calculate the loss.

deltas = outputs - targets

L2-norm loss formula:

We said we will use half the L2 norm loss. Python actually speaking deltas is a 1000 by one array. We are interested in the sum of its terms squared. Following the formula for the L2 norm loss there is a num PI method called sum which will allow us to sum all the values in the array the L2 norm requires these values to be squared. So the code looks like this. And P does some of Delta squared. We then divide the whole expression by two to get the elegant update rules from the gradient descent. Let's further augment the loss by dividing it by the number of observations we have. This would give us the average loss per observation or the mean loss. Similarily to the division by 2. This does not change the logic of the last function. It is still lower than some more accurate results that will be obtained. This little improvement makes the learning independent of the number of observations instead of adjusting the learning rate. We adjust the loss that that's valuable as the same learning rate should give us similar results for both 1000 and 1 million observations.

loss = np.sum(deltas ** 2) / 2 / observations

We'll print the last we've obtained each step. That's done as we want to keep an eye on whether it is decreasing as iterations are performed. If it is decreasing our machine learning algorithm functions well.

print(loss)

Finally we must update the weights and biases so they are ready for the next iteration using the same rescaling trick. I'll also reskill the deltas. This is yet another way to make the algorithm more universal. So the new variable is deltas underscored skilled and equals deltas divided by observations.

deltas_scaled = deltas / observations

Let's update the weights. We will follow the gradient descent logic.

The new weights are equal to the old weights minus the learning rate times the dot product of the inputs and the Deltas underscored scaled. The shape of the weights is two by one the shape of the inputs is one thousand by two and that of the Delta skilled is one thousand by one. Obviously we cannot simply multiply the inputs and the deltas. This is an issue that may arise occasionally due to the linear algebra involved to fix it. We must transpose the inputs matrix using the object but the method. Now the major C's are compatible. By 1000 times 1000 by one is equal to 2 by 1.

weights = weights - learning_rate * np.dot(inputs.T, deltas_scaled)

TIP

Often when dealing with matrices you find the correct way to code it through dimensionality checks and compatability errors.

However transposing major C's doesn't affect the information they hold so we can do it freely.

All right let's update the biases. The new biases are equal to the old biases minus the learning rate times the sum of the deltas as explained in the gradient descent lecture.

biases = biases - learning_rate * np.sum(deltas_scaled)

This is the entire algorithm. Let's recap what it does:

- first it calculates the outputs forgiven weights and biases.

- Second it calculates a loss function that compares the outputs to the targets.

- Third it prints the loss. So we can later analyze it and

- forth, We update the weights and the bias is following the gradient descent methodology.

Let's run the code. What we get is a list of numbers that appears to be in descending order right. These are the values of our average last function. It started from a high value and at each iteration it became lower and lower until it reached a point where it almost stopped changing. This means we have minimized or almost minimize the loss function with respect to the weights and biases. Therefore we have found a linear function that fits the model Well

113.1346113499832

108.21425084240616

103.88888353315217

99.7849517016046

95.84949440054764

92.0702726846745

88.4404105995388

84.95392016265656

81.60512775430881

78.38859366840362

75.29909427907384

72.33161242080419

69.4813290972211

66.74361563721006

64.11402617596718

61.58829043492039

59.162306787044116

56.83213559608488

54.59399281885322

52.444243860188884

50.3793976706198

48.39610107712963

46.491133337827655

44.66140091167794

42.90393243479416

41.21587389514219

39.594483997814095

38.03712971334737

36.54128200186008

35.104511706058084

33.72448560644502

32.39896263232881

31.125790222471856

29.902900829474543

28.728308562215652

27.600105960897142

26.516460899456188

25.475613610314117

24.475873826630895

23.515618037423987

22.593286851094458

21.707382463078588

20.856466223512808

20.03915630096189

19.25412543841651

18.500098797916074

17.77585189029656

17.080208586701485

16.41203920862674

15.77025869339785

15.153824832100188

14.561736577101016

13.993032416414623

13.446788812270727

12.922118701350518

12.418170054254773

11.934124491864695

11.469195956348635

11.022629434656377

10.59369973242813

10.181710296327049

9.785992082882911

9.40590247200998

9.040824223434672

8.690164474338356

8.353353776587563

8.029845171988013

7.719113304060869

7.42065356489872

7.133981275715881

6.858630899762206

6.594155286322381

6.34012494457287

6.096127346117317

5.861766255067884

5.636661084584468

5.420446278826976

5.212770719316917

5.013297154744328

4.8217016532940695

4.637673076602049

4.460912574487216

4.291133099638719

4.128058941470141

3.9714252783838333

3.8209777477182216

3.676472032679767

3.537673465588702

3.4043566467943345

3.276305078640973

3.153310813890127

3.0351741180280087

2.921703144909945

2.812713625215003

2.7080285672048383

2.607477969300881

2.5108985440130636

2.4181334527717953

2.3290320512325318

2.2434496446393806

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

The weights and the biases are optimize. But so are the outputs. Since the optimization process has ended. We can check these values here. We observe the values from the last iteration of the for loop. The one that gave us the lowest last function in the memory of the computer the weights biases and outputs variables are optimized as of now. Congratulations you learn how to create your first machine learning algorithm.

Still let's spend an extra minute on that. I'd like to print the weights and the bias's the weights seem about right. The bias is close to five as we wanted but not really. That's because we use too few iterations or an inappropriate learning rate. Let's rerun the code for the loop. This will continue optimizing the algorithm for another hundred iterations. We can see the bias improves when we increase the number of iterations. We strongly encourage you to play around with the code and find the optimal number of iterations for the problem. Try different values for observations learning rate number of iterations maybe even initial range for initializing the weights and biases cool.

print(f'Weights: {weights}')

print(f'Biases: {biases}')

2

Targets =

Weights: [[ 1.9962384 ]

[-3.00212515]]

Biases: [5.28171043]

2

3

Weights: [[ 1.99842328]

[-3.00330109]]

Biases: [5.01471378]

2

3

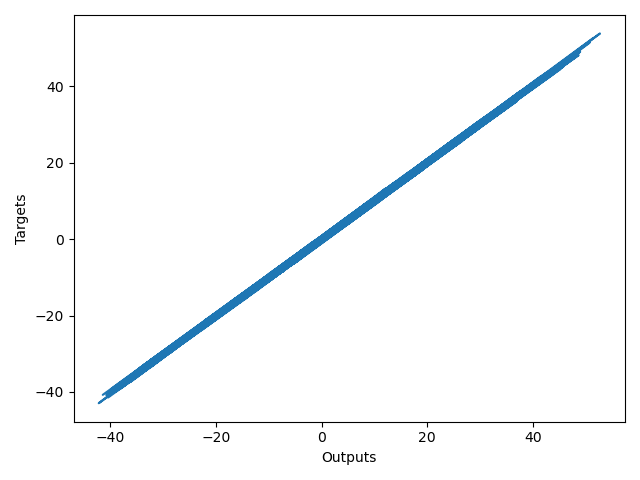

Finally I'd like to show you the plot of the output at the last iteration against the targets. The closer this plot is to a 45 degree line the closer the outputs are to the targets. Obviously our model worked like a charm.

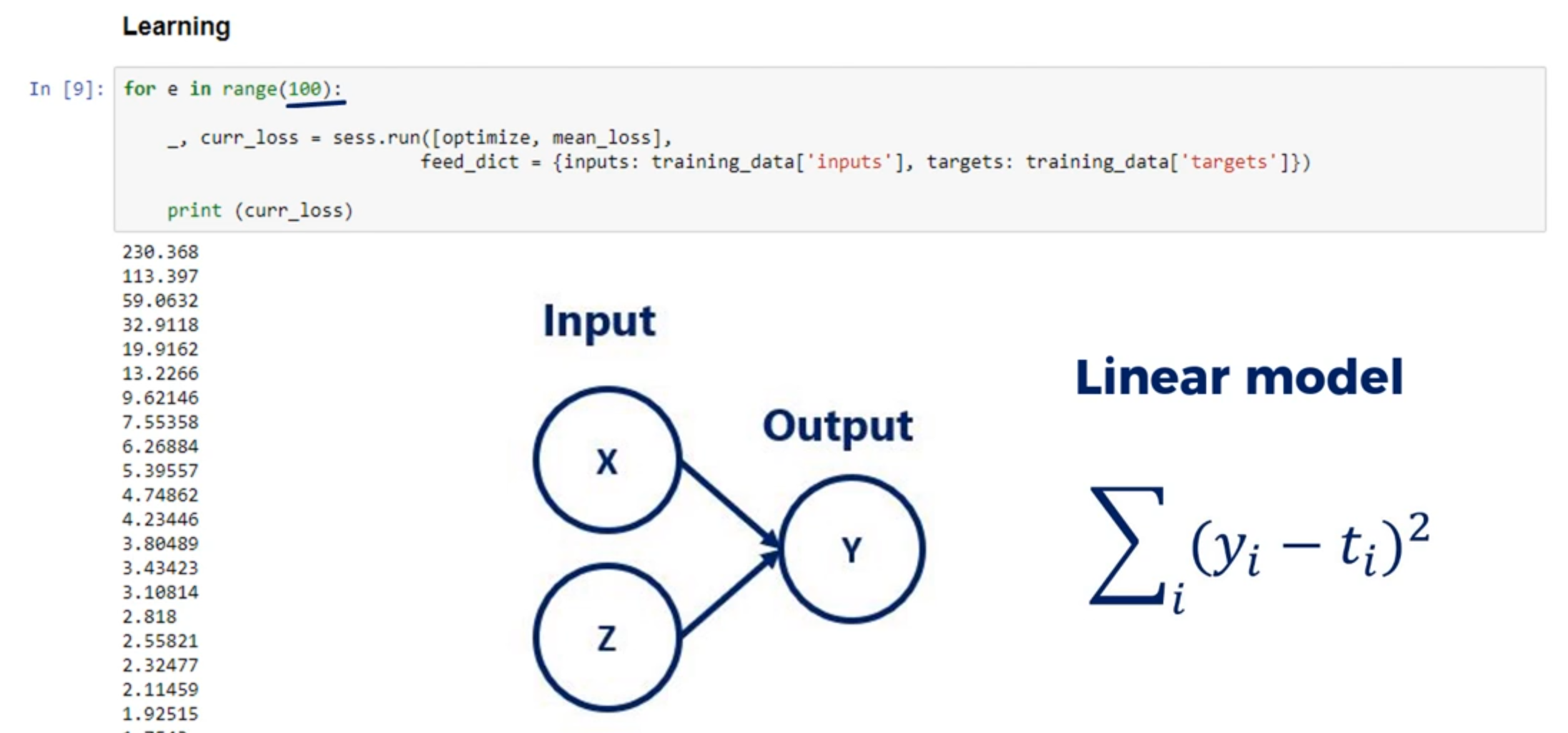

# Solving the simple example using TensorFlow

#%%

import numpy as np

import matplotlib.pyplot as plt

import tensorflow as tf

#%%

# 2. Data generation

observations = 1000

xs = np.random.uniform(low=-10, high=10, size=(observations, 1))

zs = np.random.uniform(-10, 10, (observations, 1))

generated_inputs = np.column_stack((xs, zs))

noise = np.random.uniform(-1, 1, (observations, 1))

generated_targets = 2*xs - 3*zs + 5 + noise

np.savez('TF_intro', inputs=generated_inputs, targets=generated_targets)

#%%

# 3. Solving with TensorFlow

training_data = np.load('TF_intro.npz')

input_size = 2

output_size = 1

# tf.keras.Sequential() function that specifies how the model

# will be laid down ('stack layers')

# Linear combination + Output = Layer*

# The tf.keras.layers.Dense(output size)

# takes the inputs provided to the model

# and calculates the dot product of the inputs and weights and adds the bias

# It would be the output = np.dot(inputs, weights) + bias

model = tf.keras.Sequential([

tf.keras.layers.Dense(output_size)

])

# model.compile(optimizer, loss) configures the model for training

# https://www.tensorflow.org/api_docs/python/tf/keras/optimizers

# L2-norm loss = Least sum of squares (least sum of squared error)

# scaling by #observations = average (mean)

model.compile(optimizer='sgd', loss='mean_squared_error')

# What we've got left is to indicate to the model which data to fit

# modelf.fit(inouts, targets) fits (trains) the model.

# Epoch = iteration over the full dataset

model.fit(training_data['inputs'], training_data['targets'], epochs=100, verbose=2)

#%%

# 4. Extract the weights and bias

model.layers[0].get_weights()

weights = model.layers[0].get_weights()[0]

print(f'weights: {weights}')

biases = model.layers[0].get_weights()[1]

print(f'biases: {biases}')

#%%

# 5. Extract the outputs (make predictions)

# model.predict_on_batch(data) calculates the outputs given inputs

# these are the values that were compared to the targets to evaluate the loss function

print(model.predict_on_batch(training_data['inputs']).round(1))

print(training_data['targets'].round(1))

#%%

# 6. Plotting

# The line should be as close to 45 as possible

plt.plot(np.squeeze(model.predict_on_batch(training_data['inputs'])), np.squeeze(training_data['targets']))

plt.xlabel('Outputs')

plt.ylabel('Targets')

plt.show()

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

# Making the model closer to the Numpy example

import numpy as np

import matplotlib.pyplot as plt

import tensorflow as tf

#%%

# 2. Data generation

observations = 1000

xs = np.random.uniform(low=-10, high=10, size=(observations, 1))

zs = np.random.uniform(-10, 10, (observations, 1))

generated_inputs = np.column_stack((xs, zs))

noise = np.random.uniform(-1, 1, (observations, 1))

generated_targets = 2*xs - 3*zs + 5 + noise

np.savez('TF_intro', inputs=generated_inputs, targets=generated_targets)

#%%

# 3. Solving with TensorFlow

training_data = np.load('TF_intro.npz')

input_size = 2

output_size = 1

# tf.keras.Sequential() function that specifies how the model

# will be laid down ('stack layers')

# Linear combination + Output = Layer*

# The tf.keras.layers.Dense(output size)

# takes the inputs provided to the model

# and calculates the dot product of the inputs and weights and adds the bias

# It would be the output = np.dot(inputs, weights) + bias

# tf.keras.layers.Dense(output_size, kernel_initializer, bias_initializer)

# function that is laying down the model (used tp 'stack layers') and initialize weights

model = tf.keras.Sequential([

tf.keras.layers.Dense(output_size,

kernel_initializer=tf.random_uniform_initializer(minval=-0.1, maxval=0.1),

bias_initializer=tf.random_uniform_initializer(minval=-0.1, maxval=0.1))

])

custom_optimizer = tf.keras.optimizers.SGD(learning_rate=0.02)

# model.compile(optimizer, loss) configures the model for training

# https://www.tensorflow.org/api_docs/python/tf/keras/optimizers

# L2-norm loss = Least sum of squares (least sum of squared error)

# scaling by #observations = average (mean)

model.compile(optimizer=custom_optimizer, loss='mean_squared_error')

# What we've got left is to indicate to the model which data to fit

# modelf.fit(inouts, targets) fits (trains) the model.

# Epoch = iteration over the full dataset

model.fit(training_data['inputs'], training_data['targets'], epochs=100, verbose=2)

#%%

# 4. Extract the weights and bias

model.layers[0].get_weights()

weights = model.layers[0].get_weights()[0]

print(f'weights: {weights}')

biases = model.layers[0].get_weights()[1]

print(f'biases: {biases}')

#%%

# 5. Extract the outputs (make predictions)

# model.predict_on_batch(data) calculates the outputs given inputs

# these are the values that were compared to the targets to evaluate the loss function

print(model.predict_on_batch(training_data['inputs']).round(1))

print(training_data['targets'].round(1))

#%%

# 6. Plotting

# The line should be as close to 45 as possible

plt.plot(np.squeeze(model.predict_on_batch(training_data['inputs'])), np.squeeze(training_data['targets']))

plt.xlabel('Outputs')

plt.ylabel('Targets')

plt.show()

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

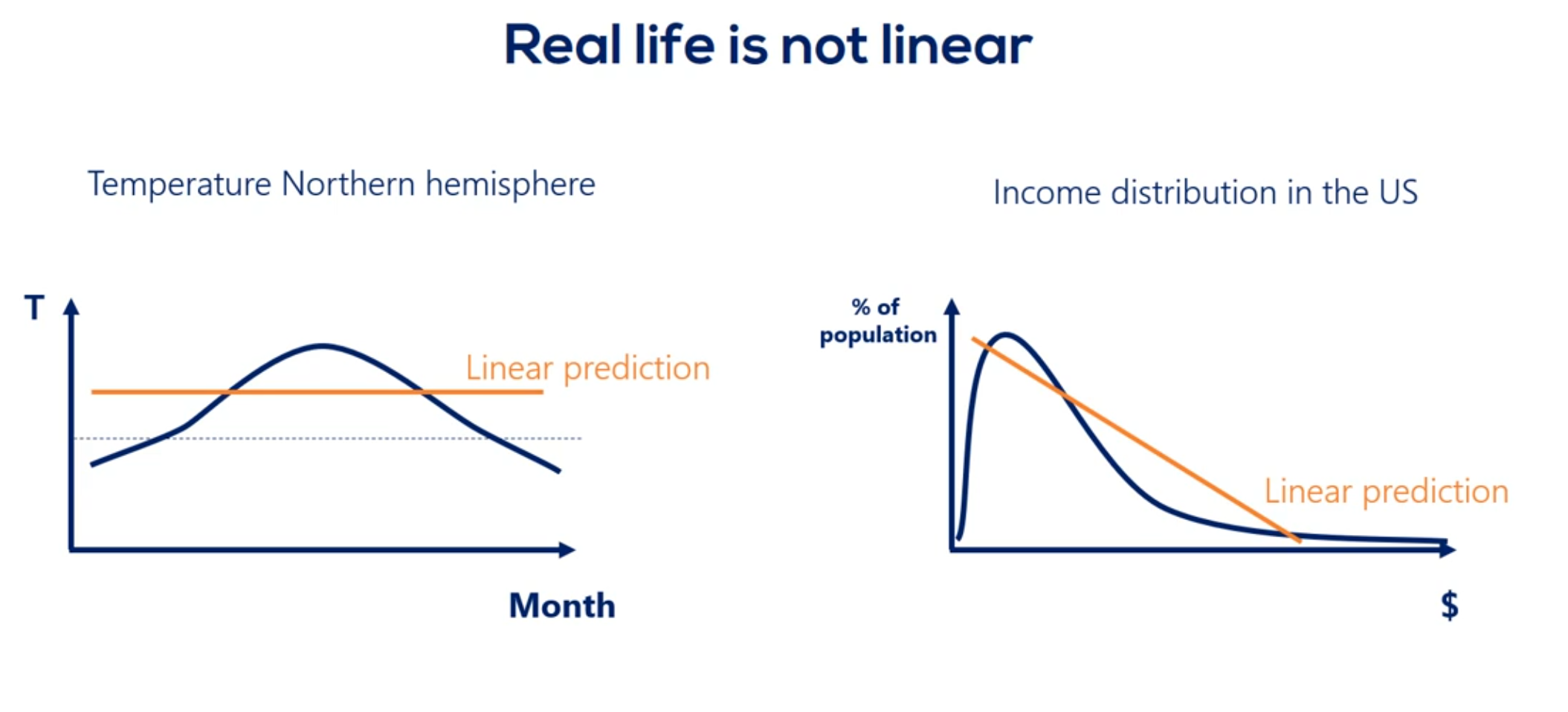

# Going deeper Introduction to deep neural networks

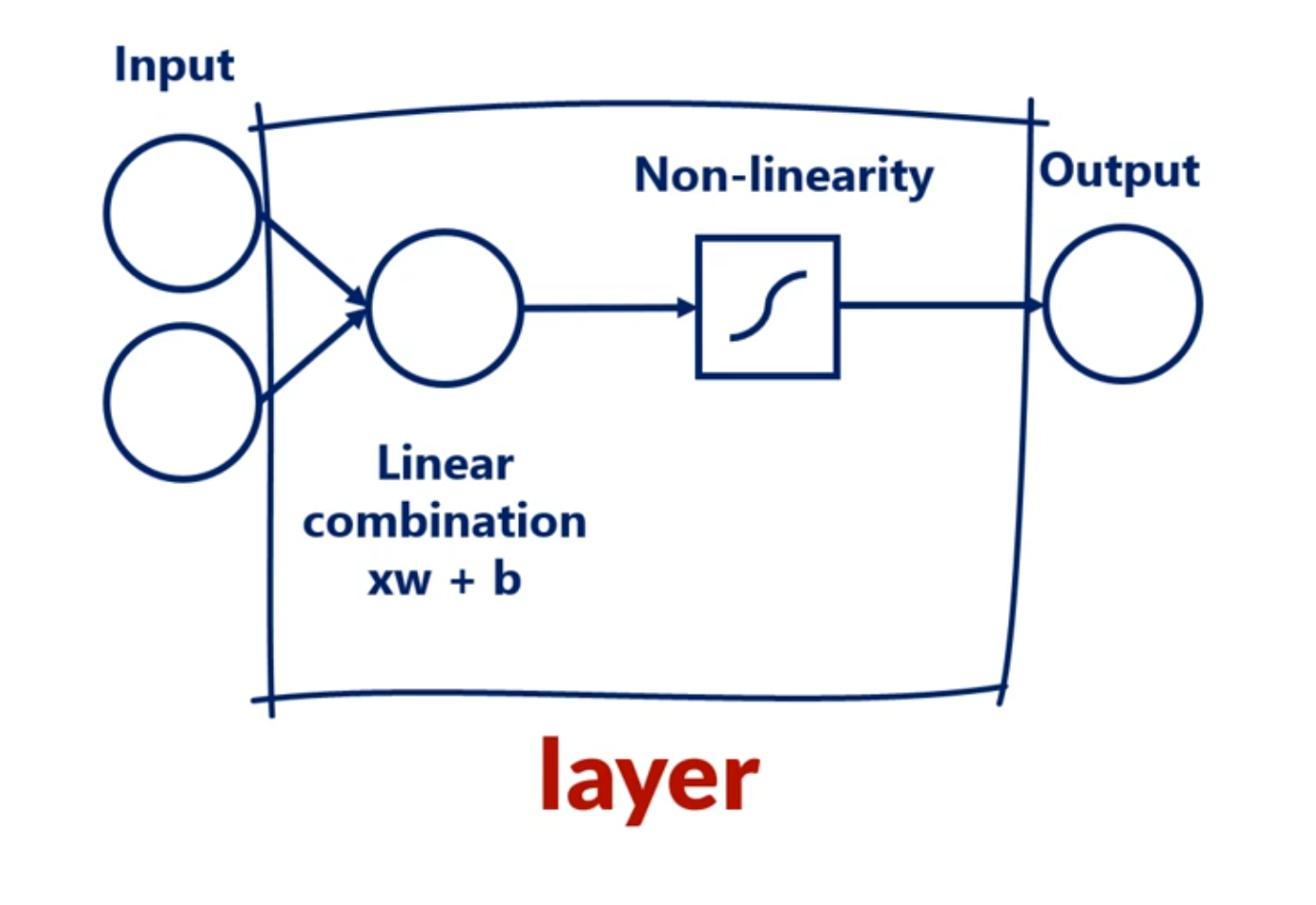

# Layers

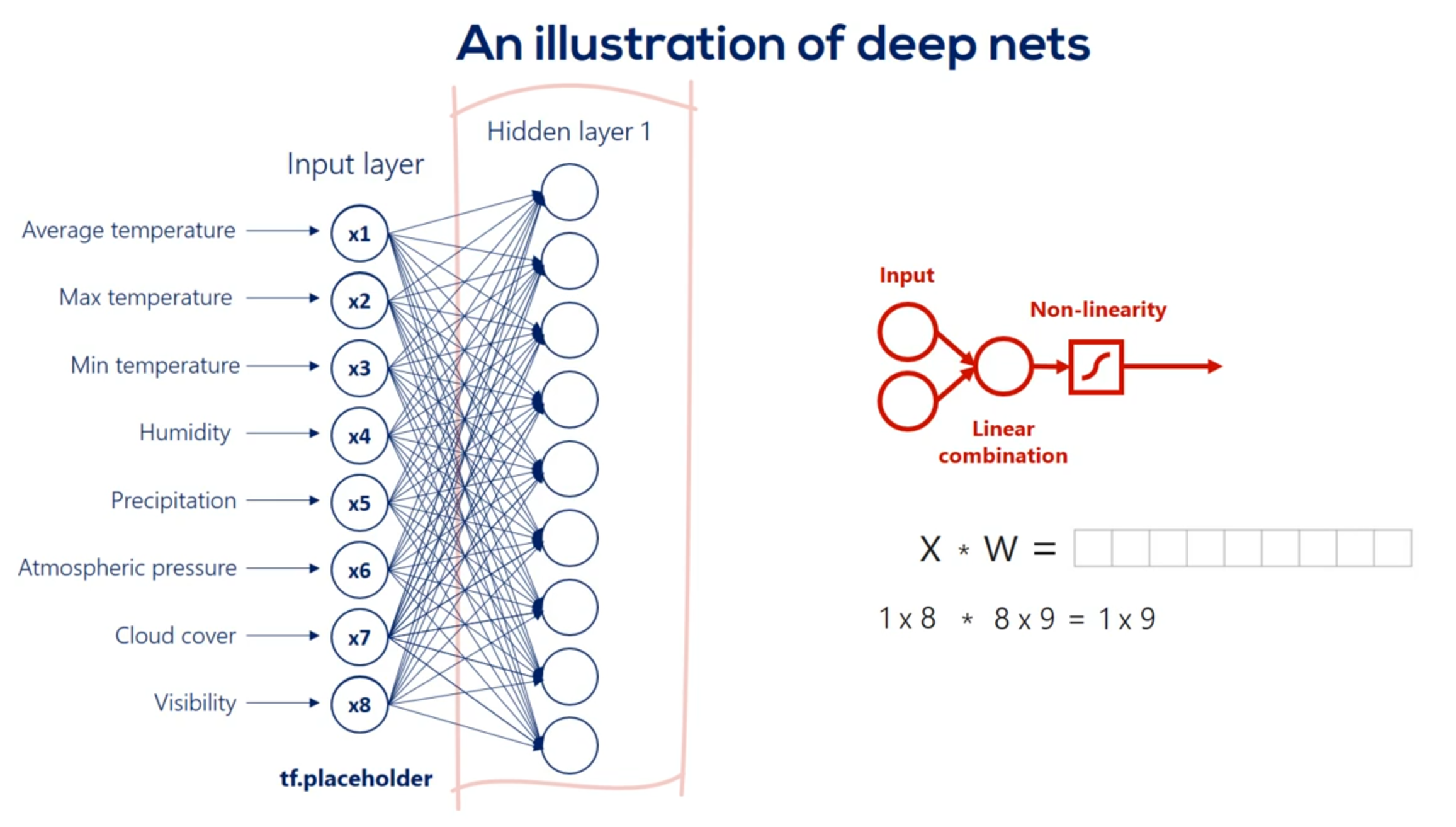

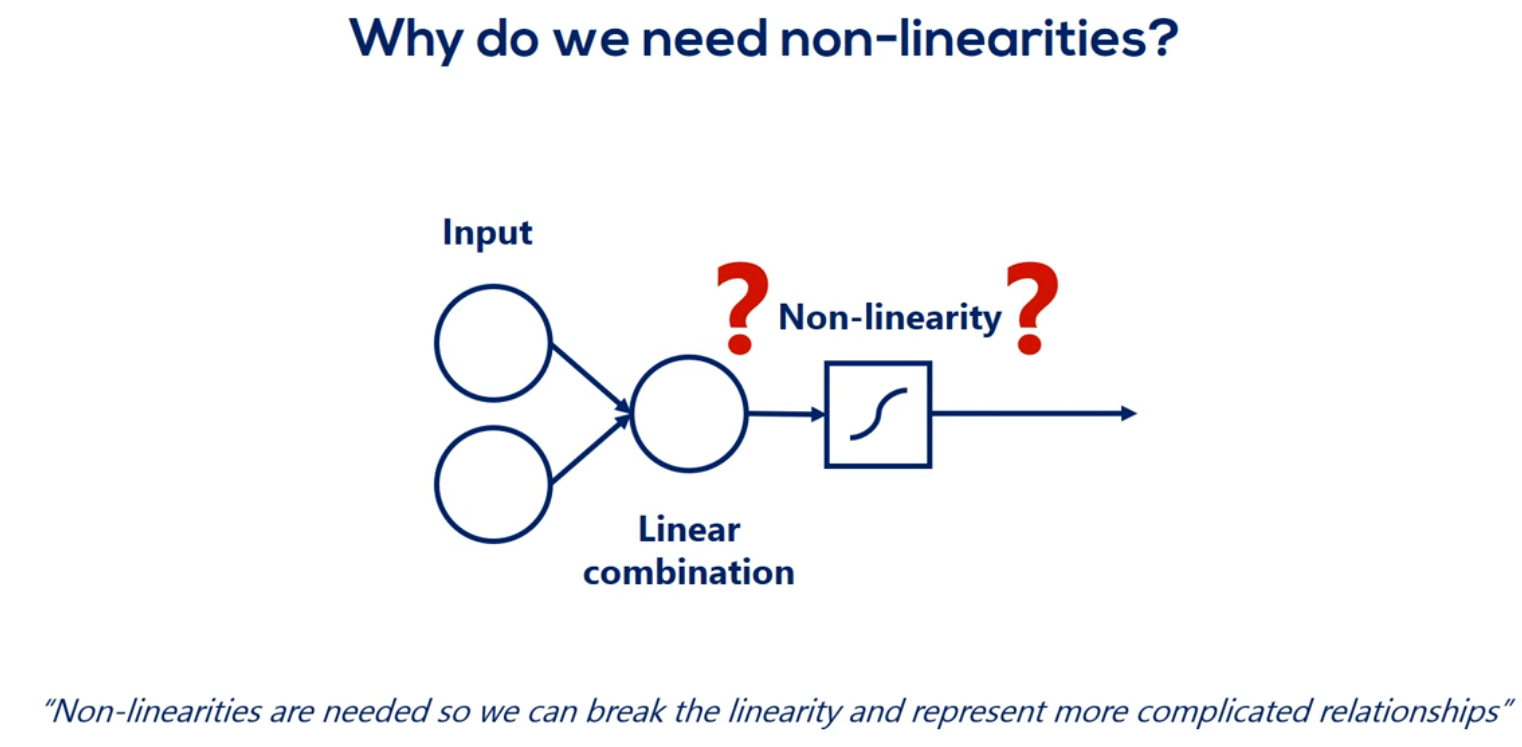

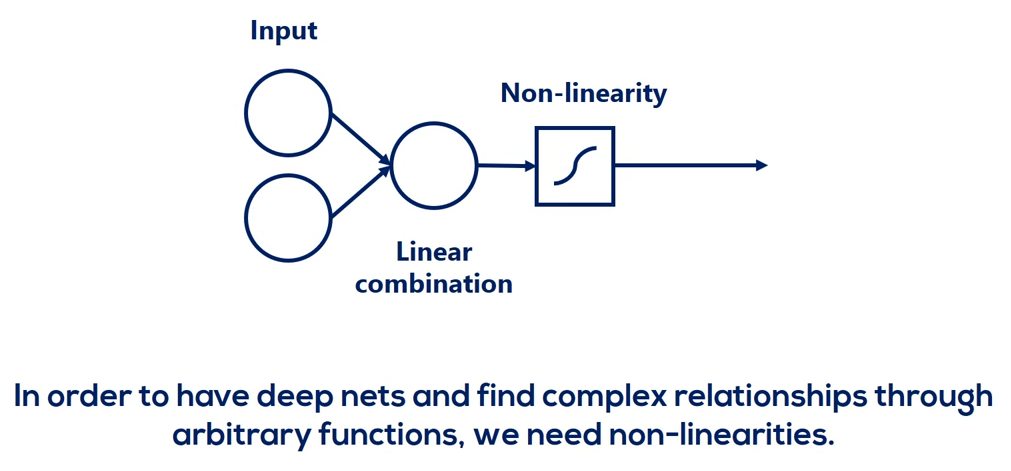

Mixing linear combinations and non-linearities allows us to model arbitrary functions.

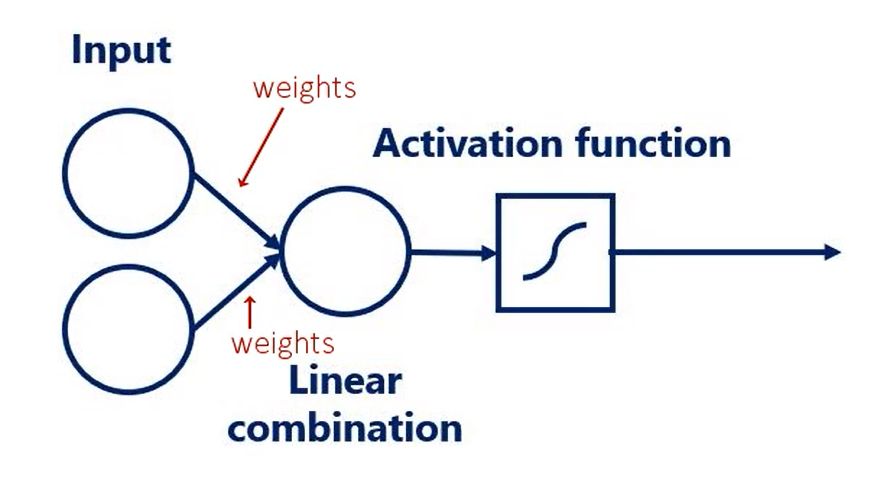

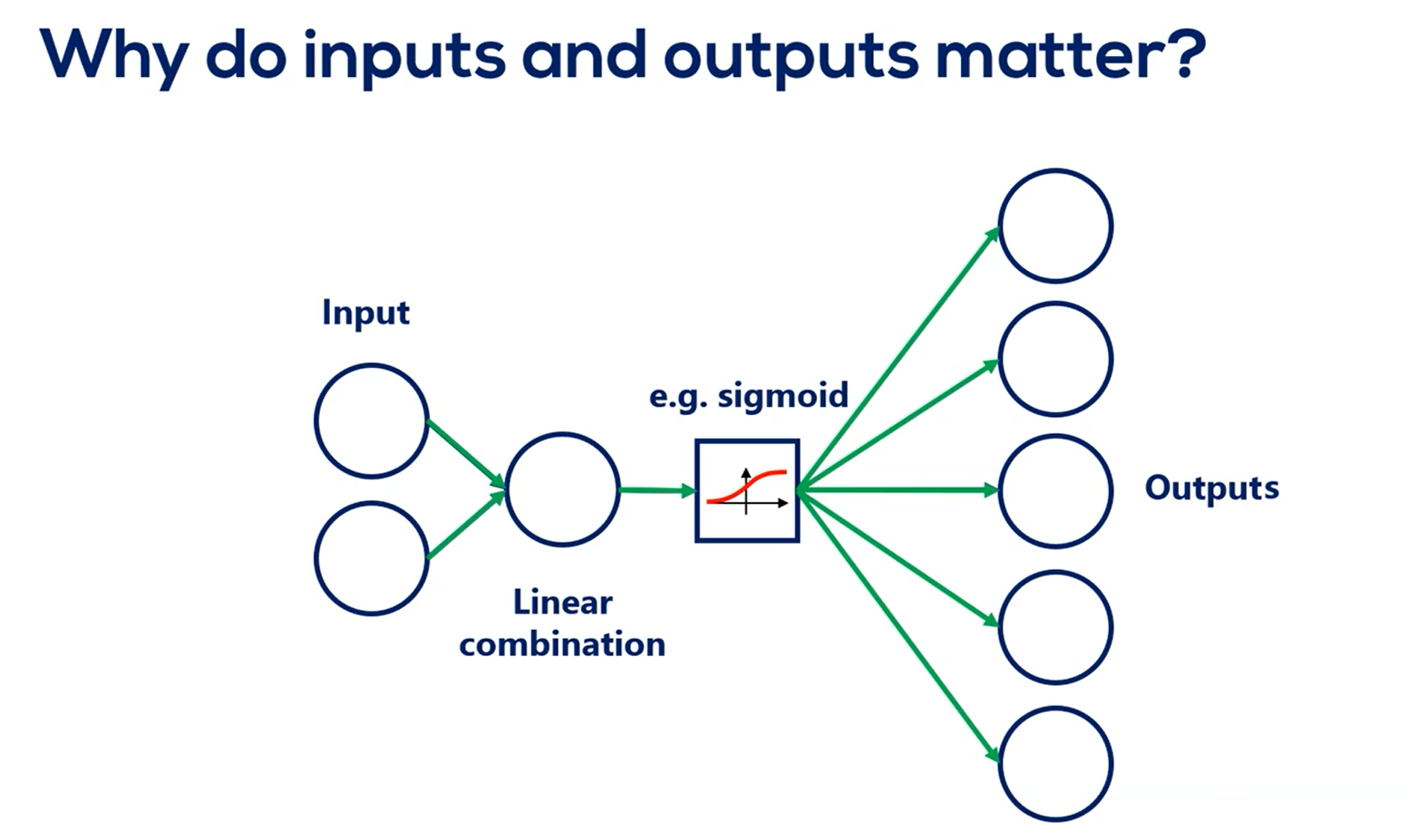

The layer is the building block of neural networks. The initial linear combinations and the added non-linearity form a layer.

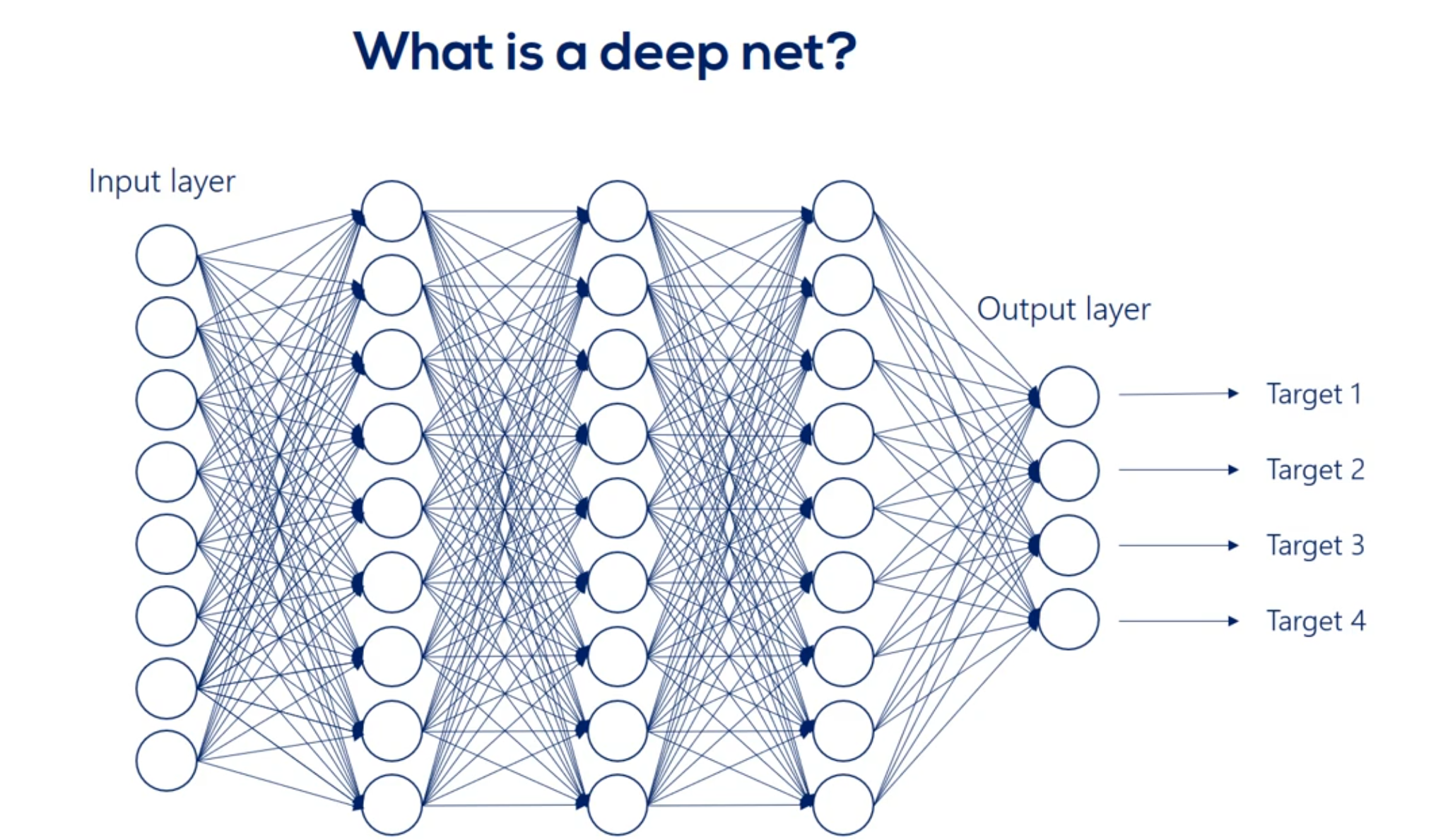

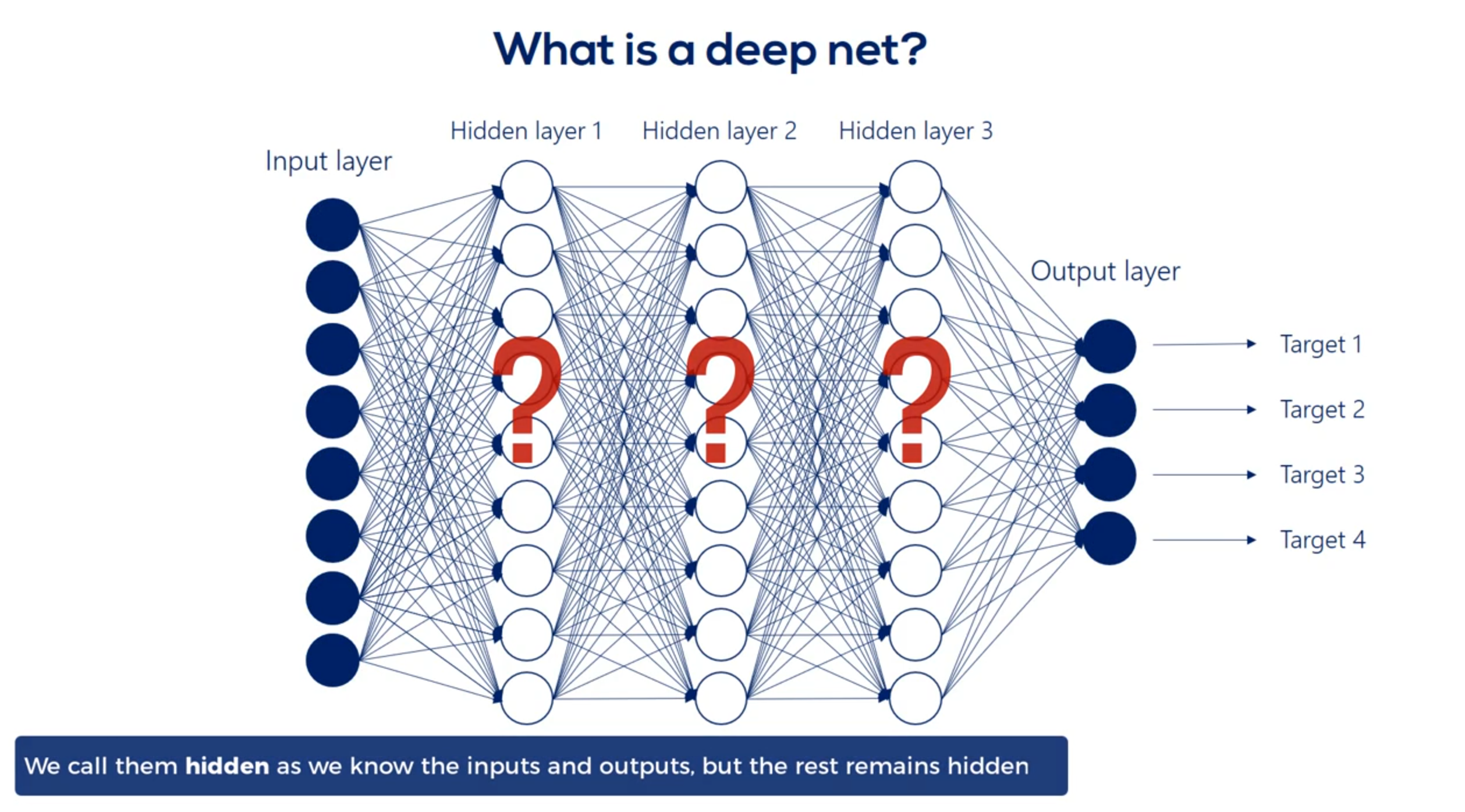

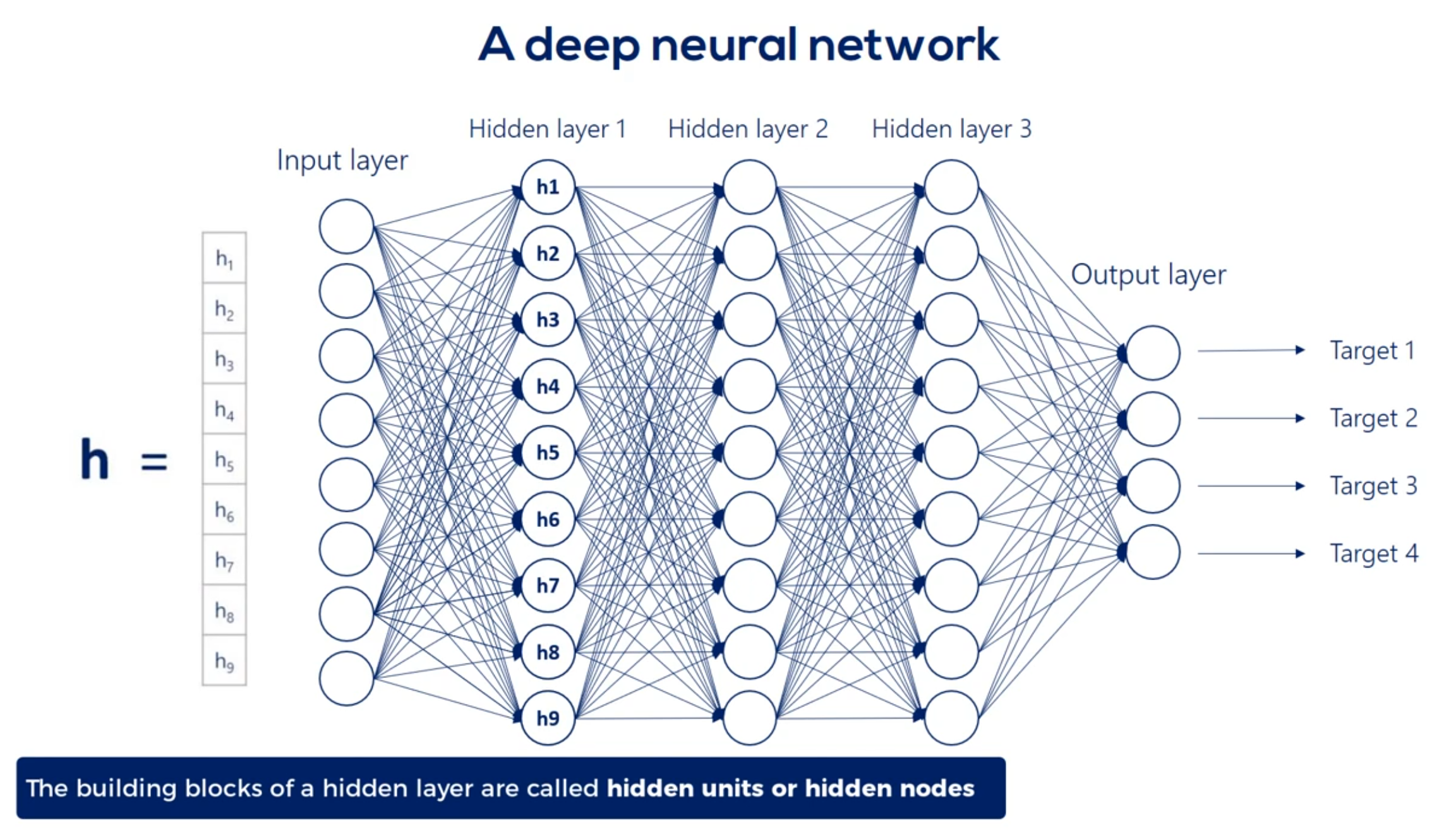

# What's Deep Net?

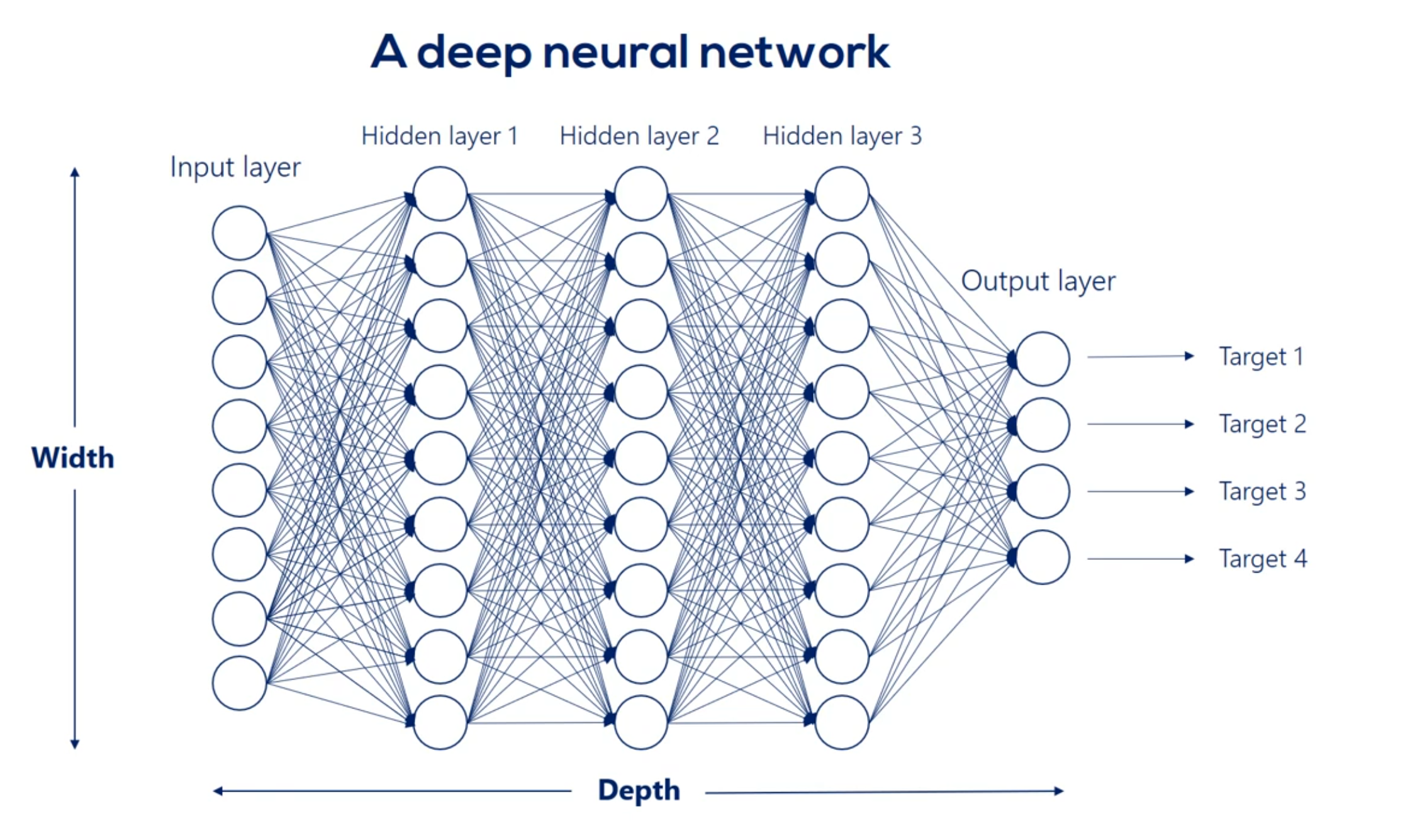

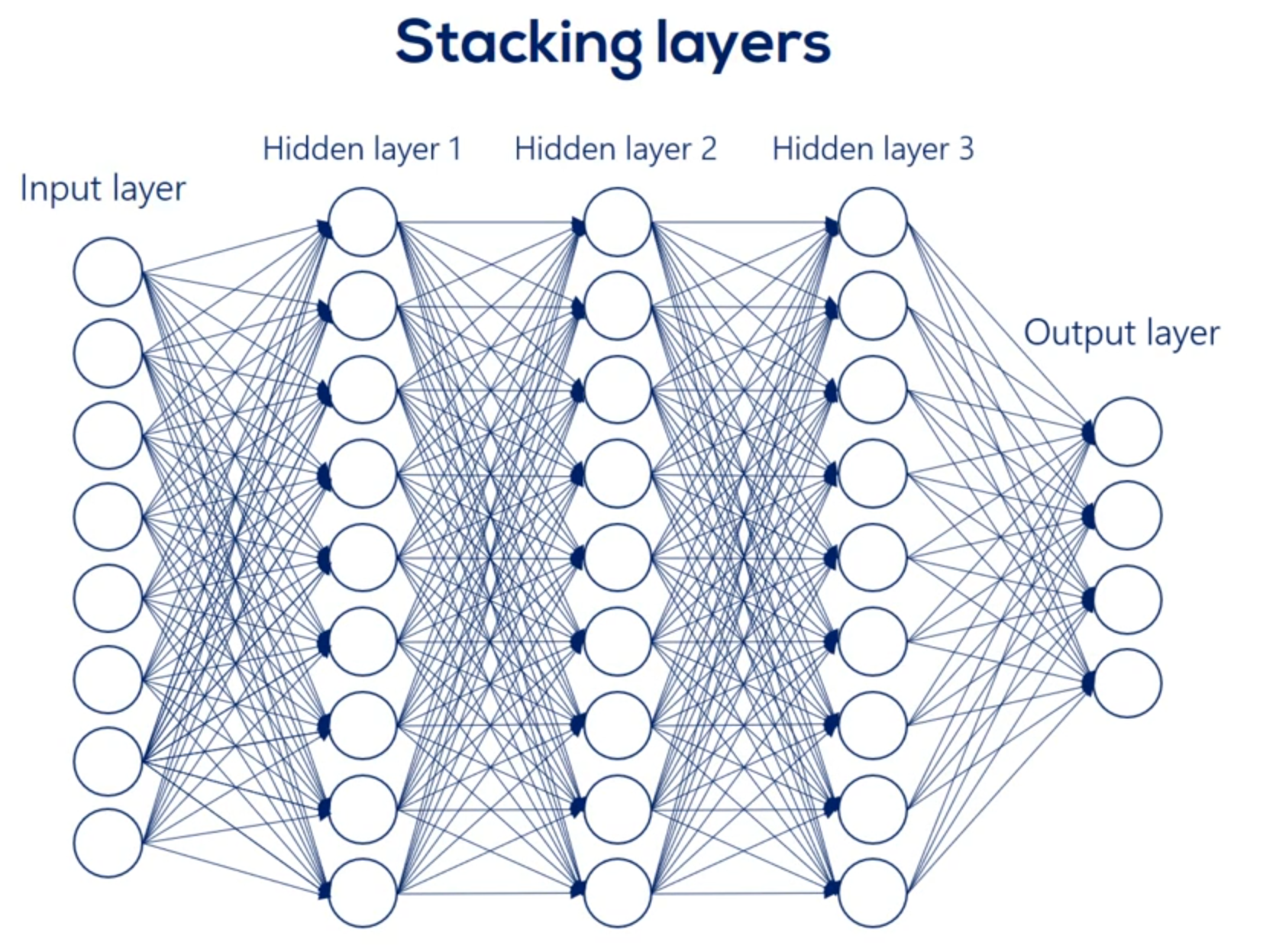

When we have more then one layer, we are talking about neural network.

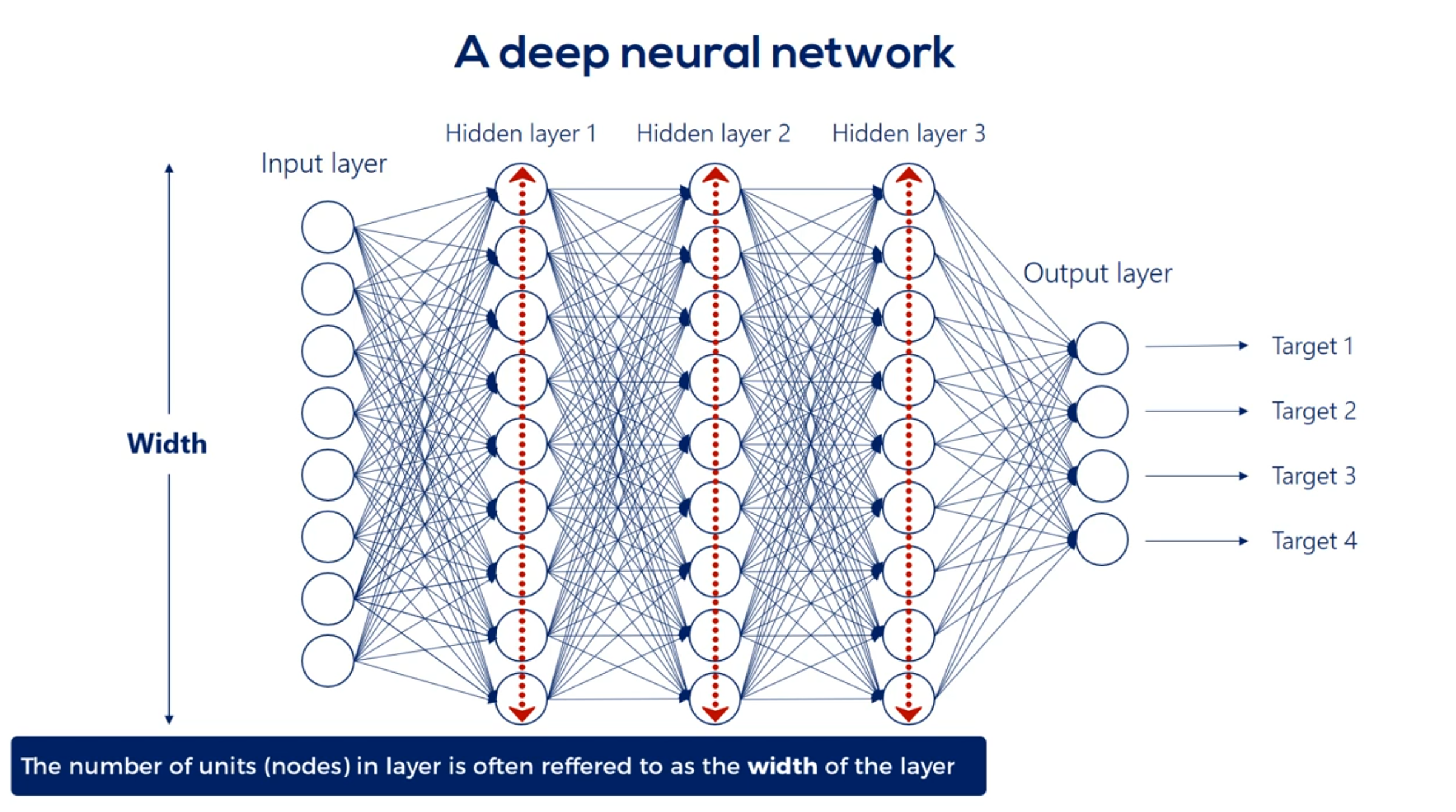

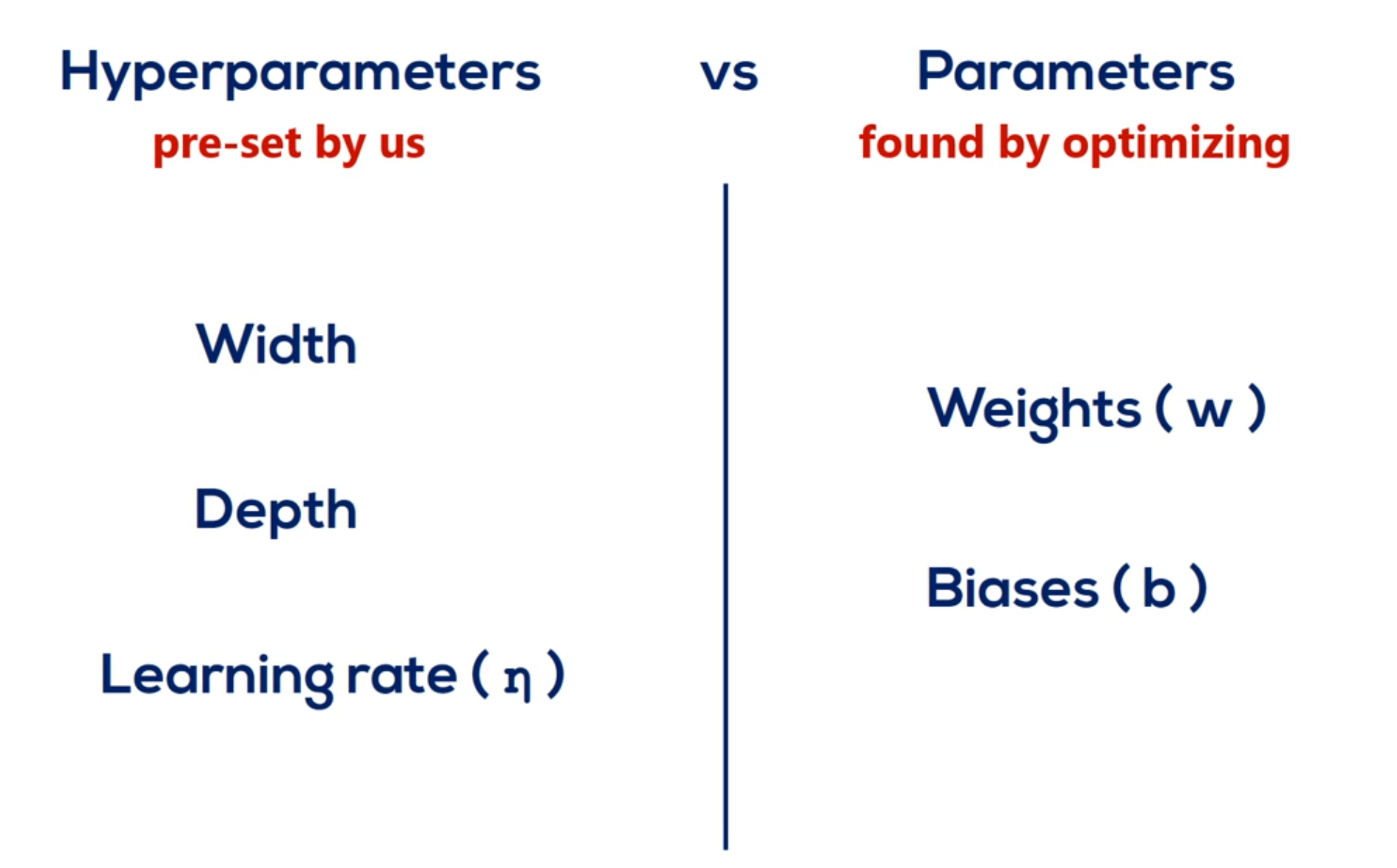

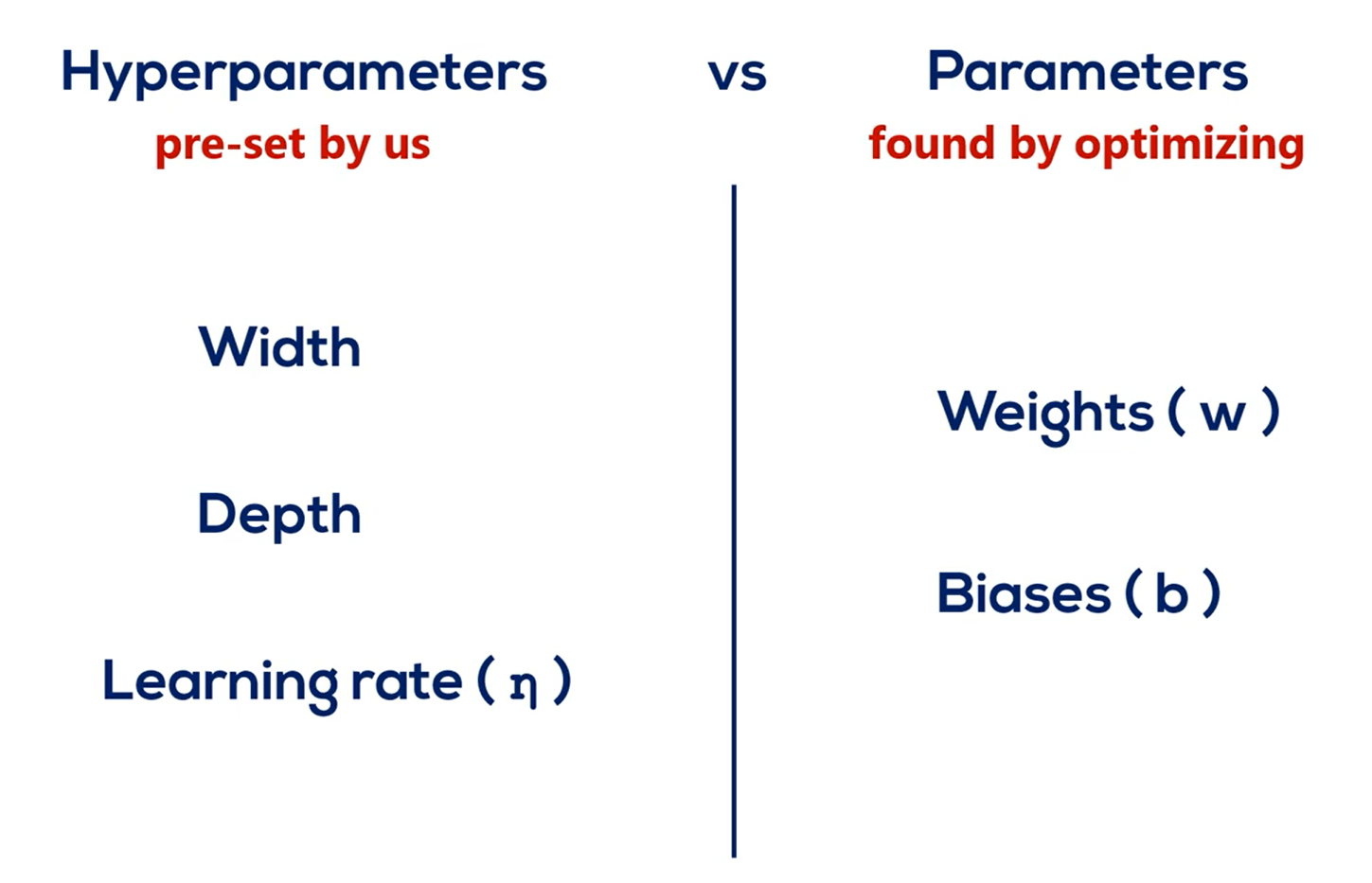

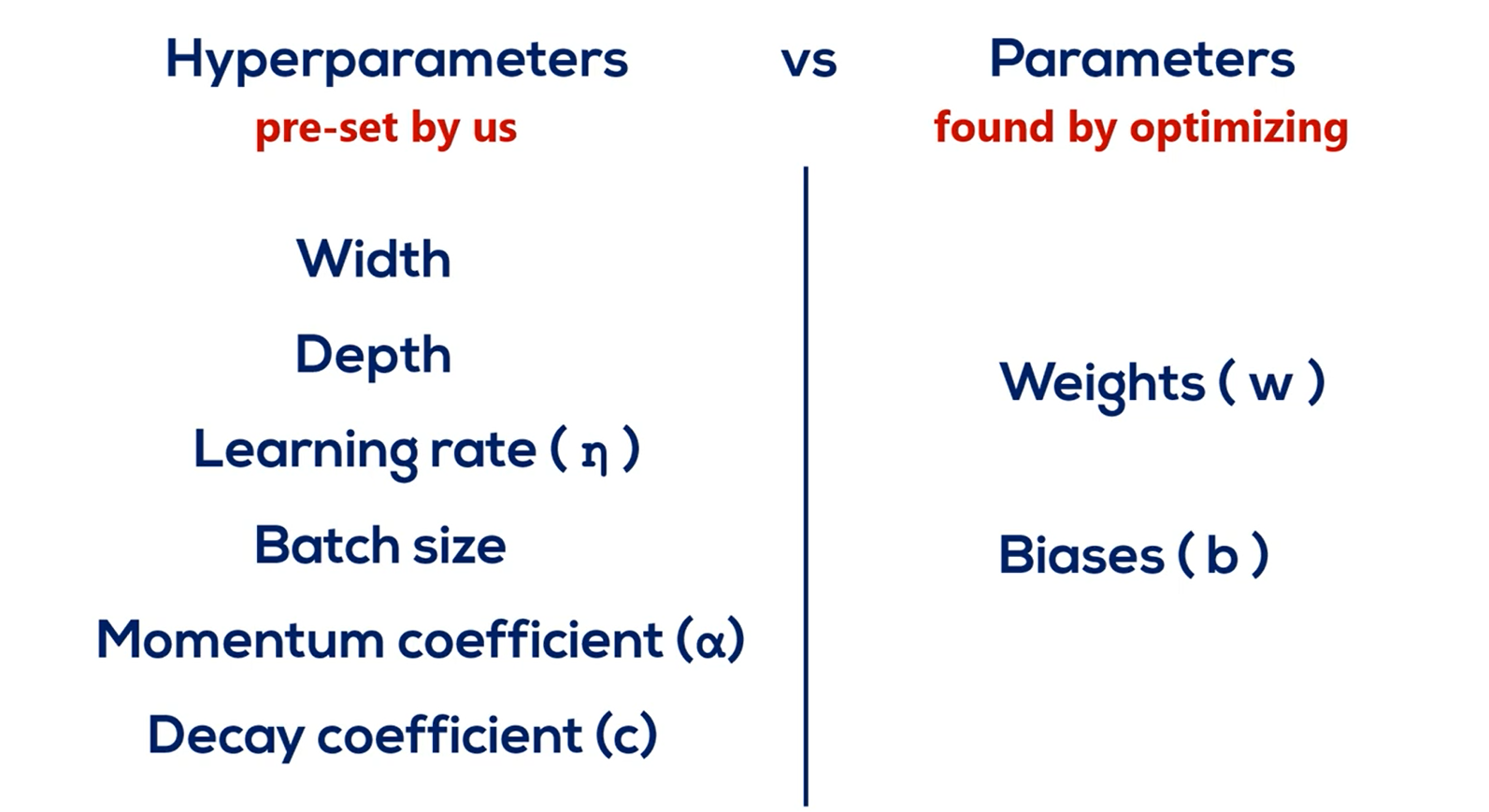

We refer to the width and depth (but not only) as hyperparameters

Each arrow represents the mathematical transformation of a certain value

So a certain way is applied then a non-linearity is added know that the non-linearity doesn't change the shape of the expression it only changes its linearity.

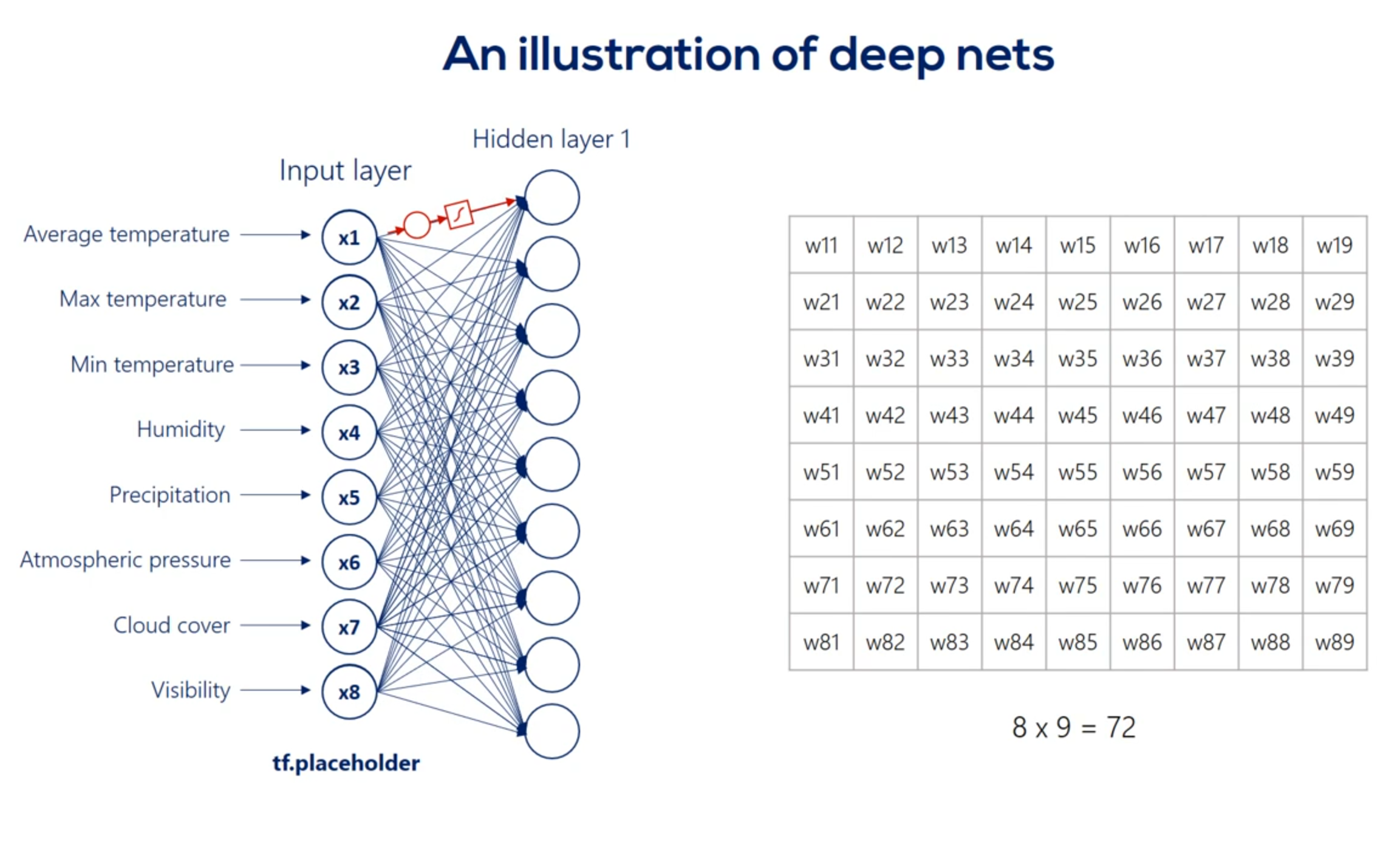

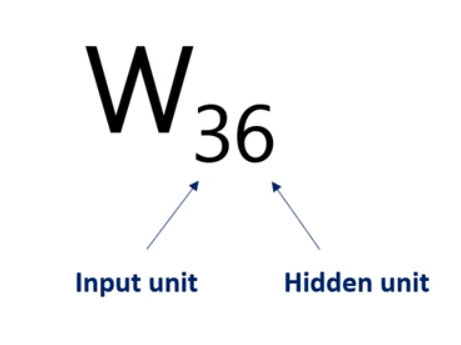

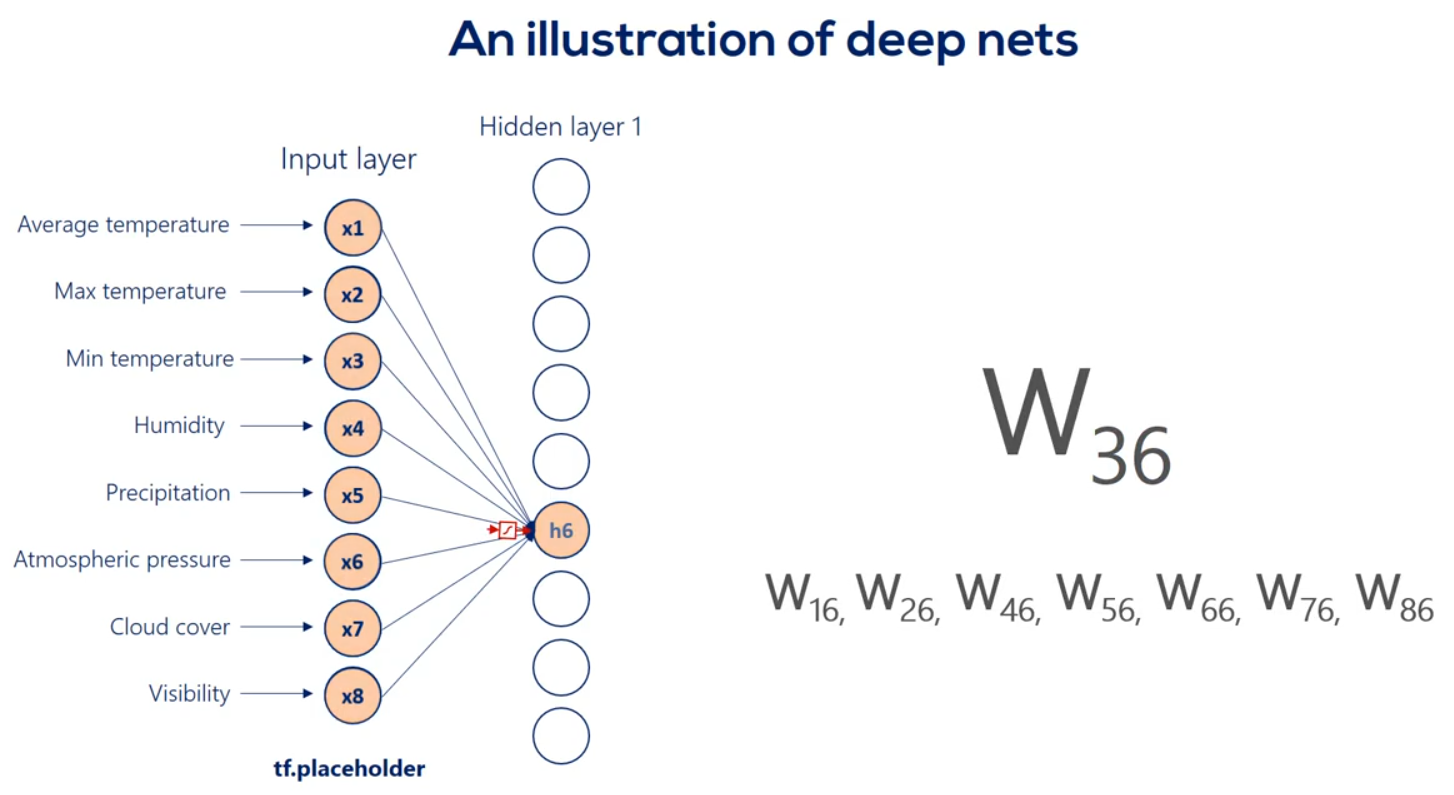

For example weight 3 6 is applied to the third input and is involved in calculating the 6th hidden unit in the same way, Weights 1 6 2 6 and so on until 8 6 all participate in computing the sixth hit and unit. They are linearly combined. And then nonlinearity is added in order to produce the sixth hidden unit in the same way we get each of the other hidden units.

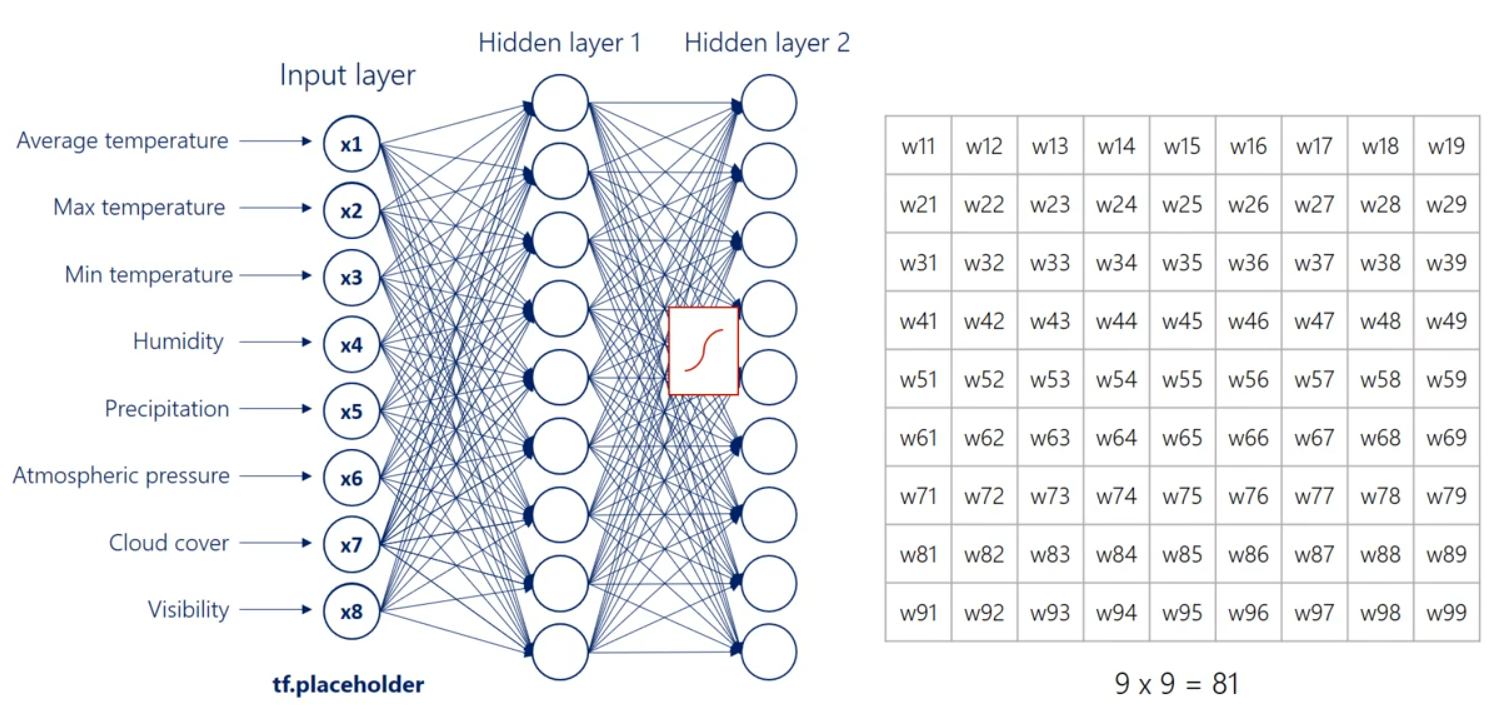

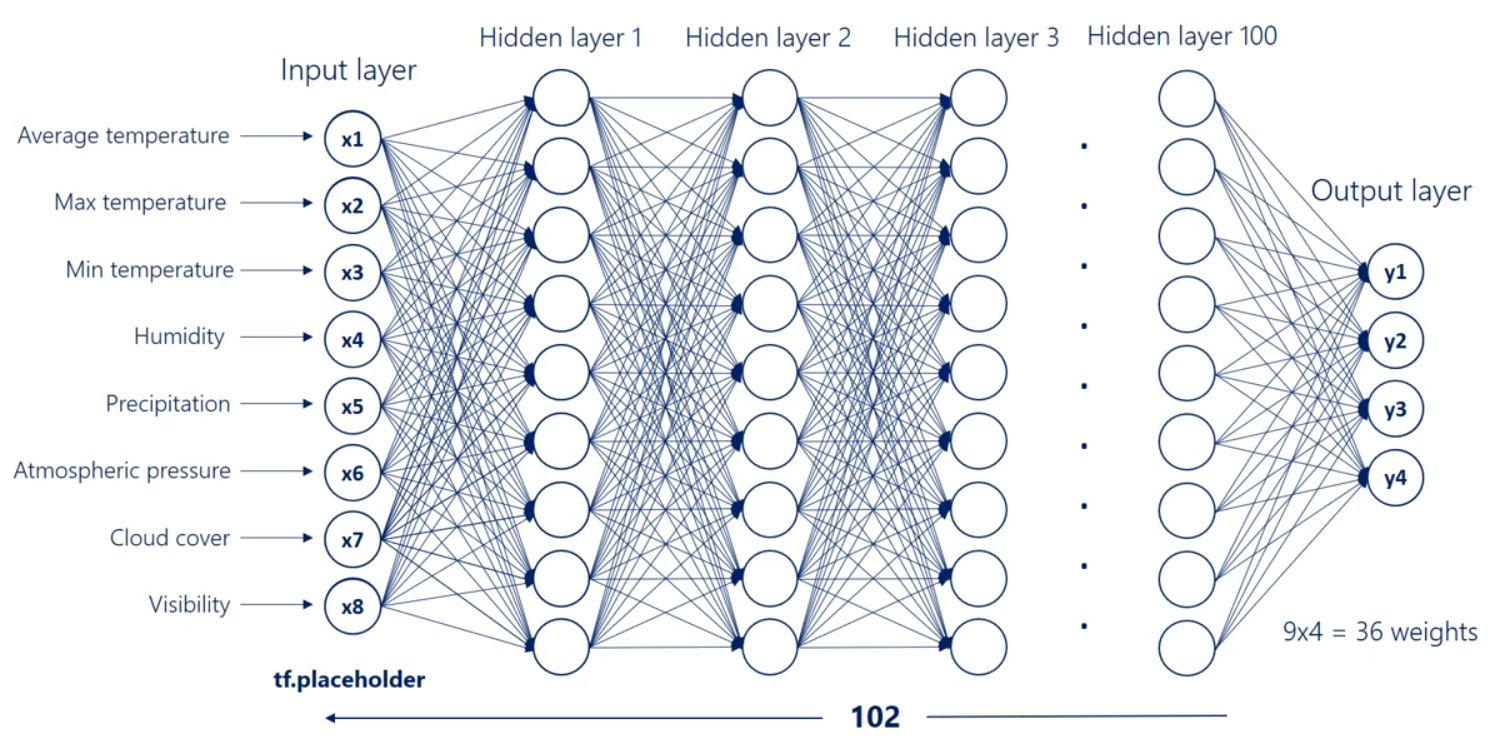

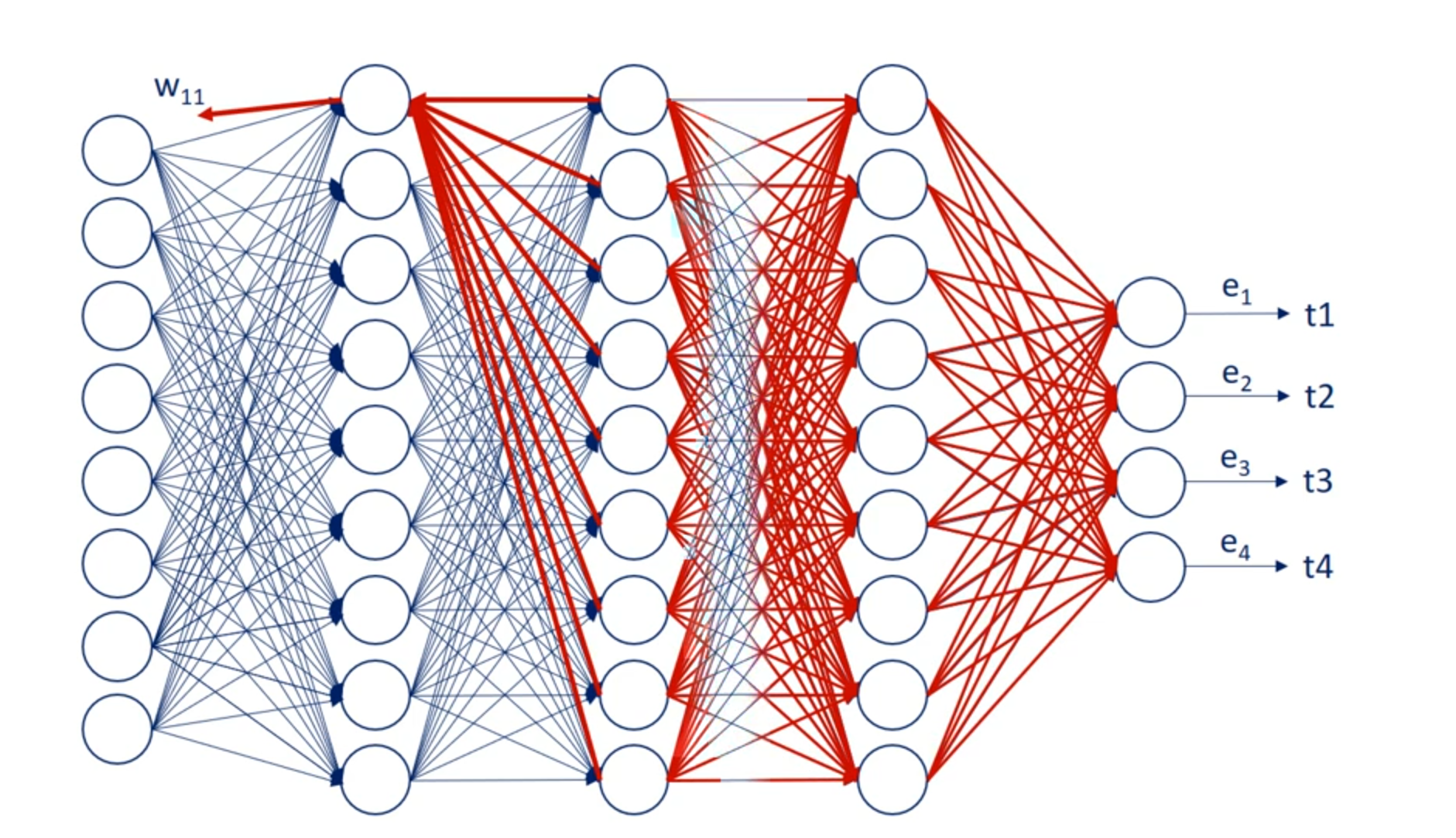

Well then we have the first hit and we're using the same logic we can linearly combine the hidden units and apply a nonlinearity right. Indeed this time though there are nine input hit in units and none output hit in units. Therefore the weights will be contained in a 9 by 9 matrix and there will be 81 arrows. Finally we applied nonlinearity and we reached the second hidden layer. We can go on and on and on like this we can add a hundred hidden layers if we want. That's a question of how deep we want our deep net to be.

Finally we'll have the last hidden layer when we apply the operation once again we will reach the output layer the output units depend on the number of outputs we would like to have in this picture. There are four. They may be the temperature humidity precipitation and pressure for the next day. To reach this point we will have a nine by four Waite's matrix which refers to 36 arrows or 36 weights exactly what we expected.

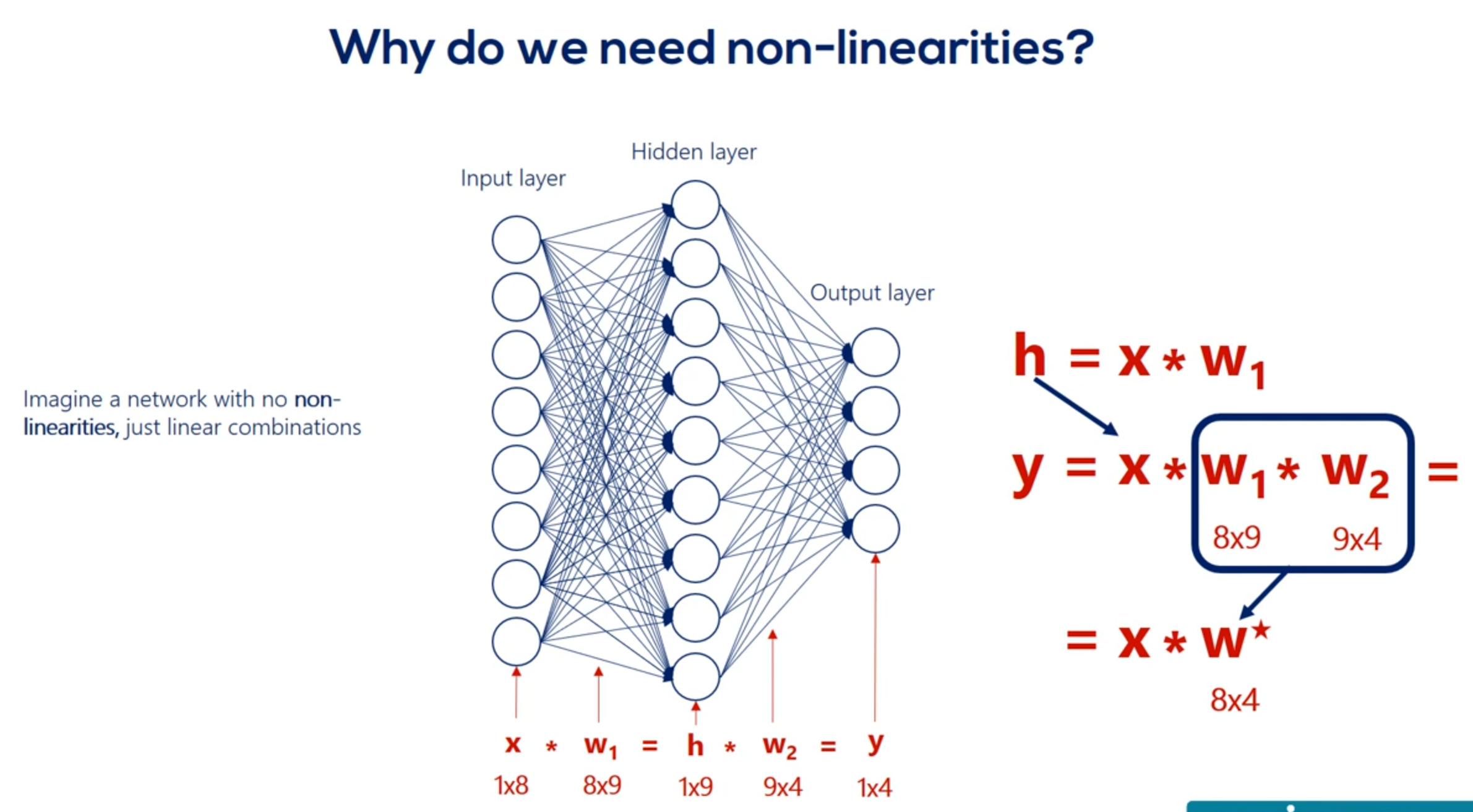

# Why do we need non-linearities

All right as before our optimization goal is finding values for major cities that would allow us to convert inputs into correct outputs as best as we can. This time though we are not using a single linear model but a complex infrastructure with a much higher probability of delivering a meaningful result.

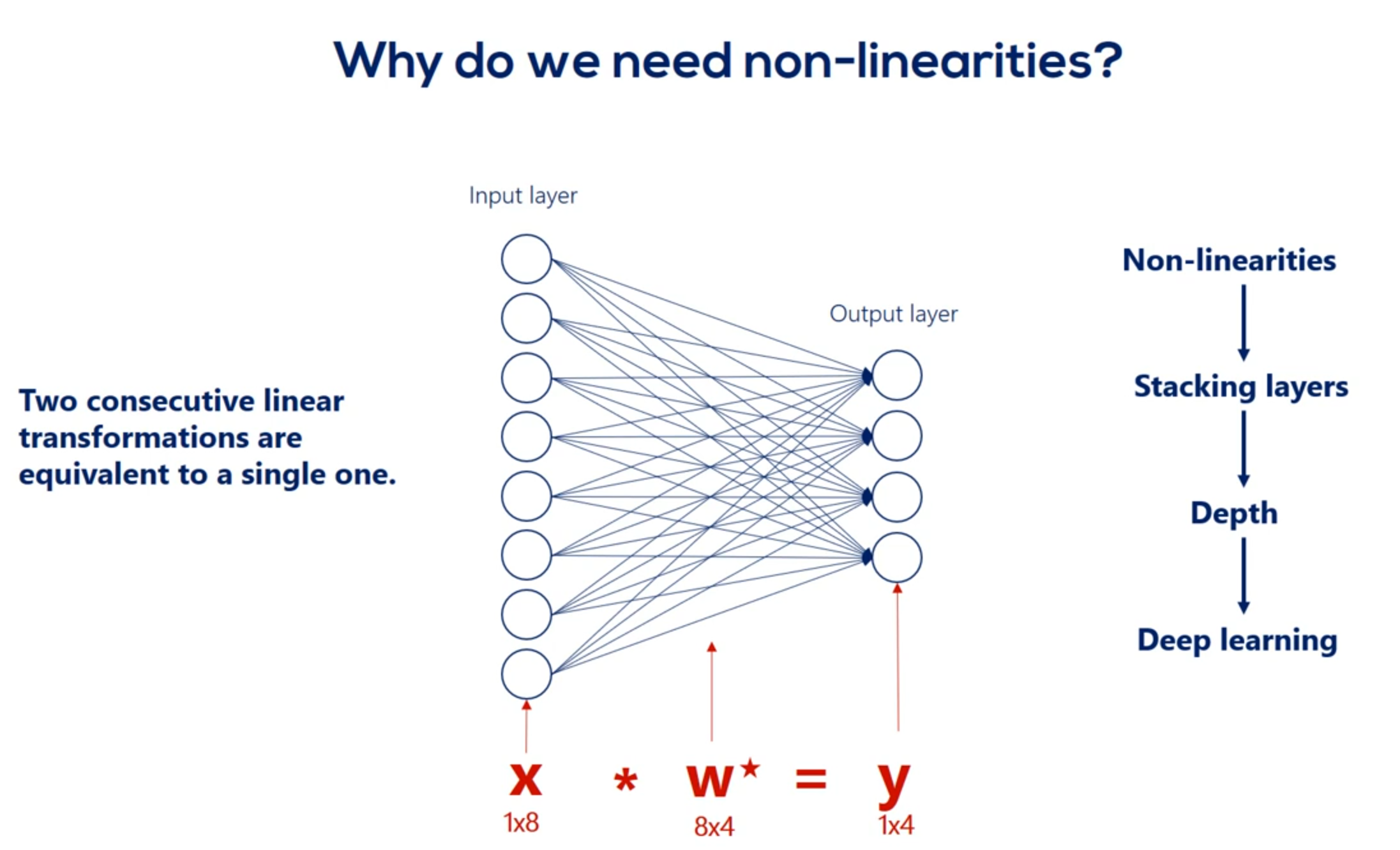

We said non-linearities are needed so we can represent more complicated relationships. While that is true it isn't the full picture an important consequence of including non-linearities is the ability to stack Layers stacking layers is the process of placing one layer after the other in a meaningful way. Remember that it's fundamental.

We cannot stack layers when we only have linear relationships.

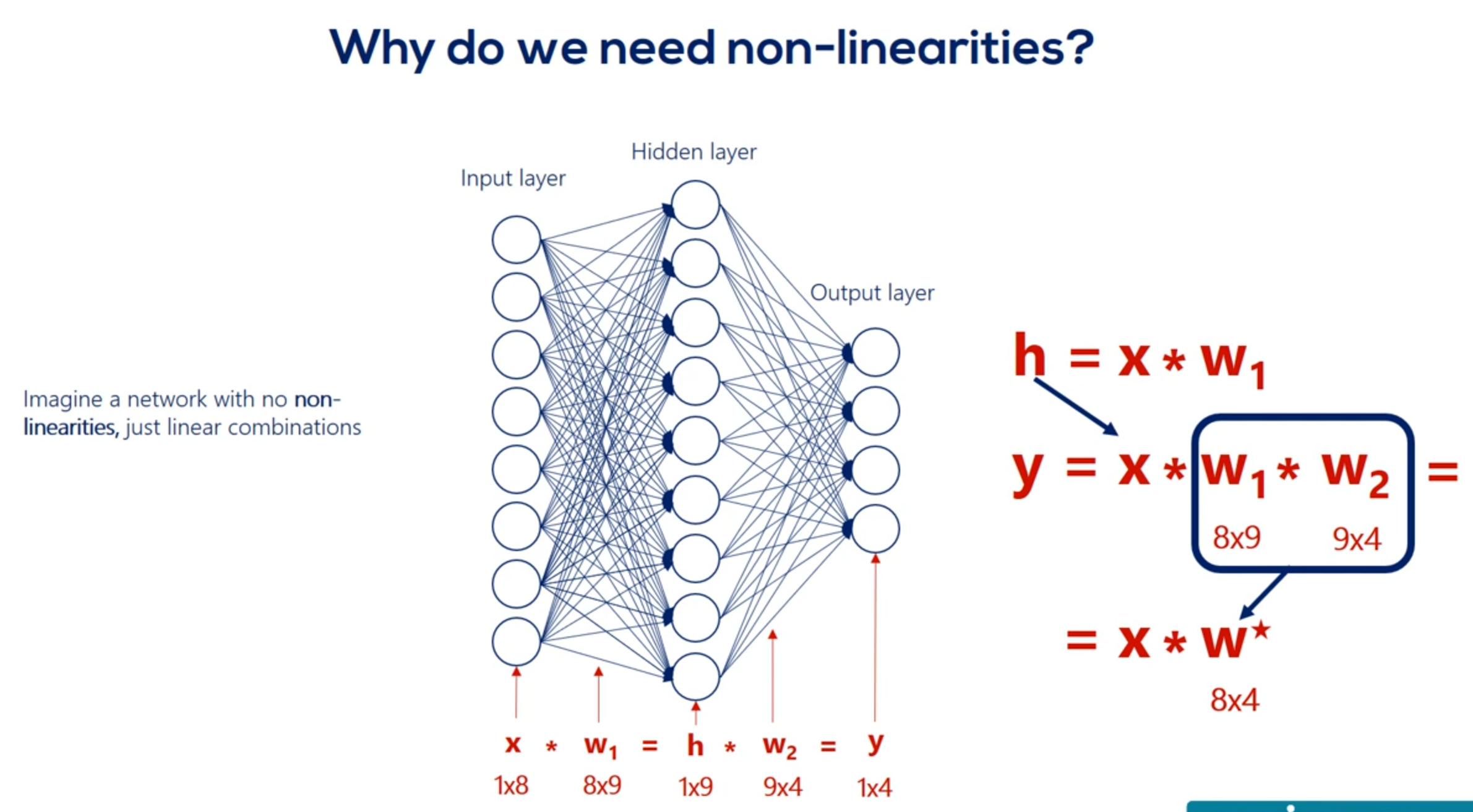

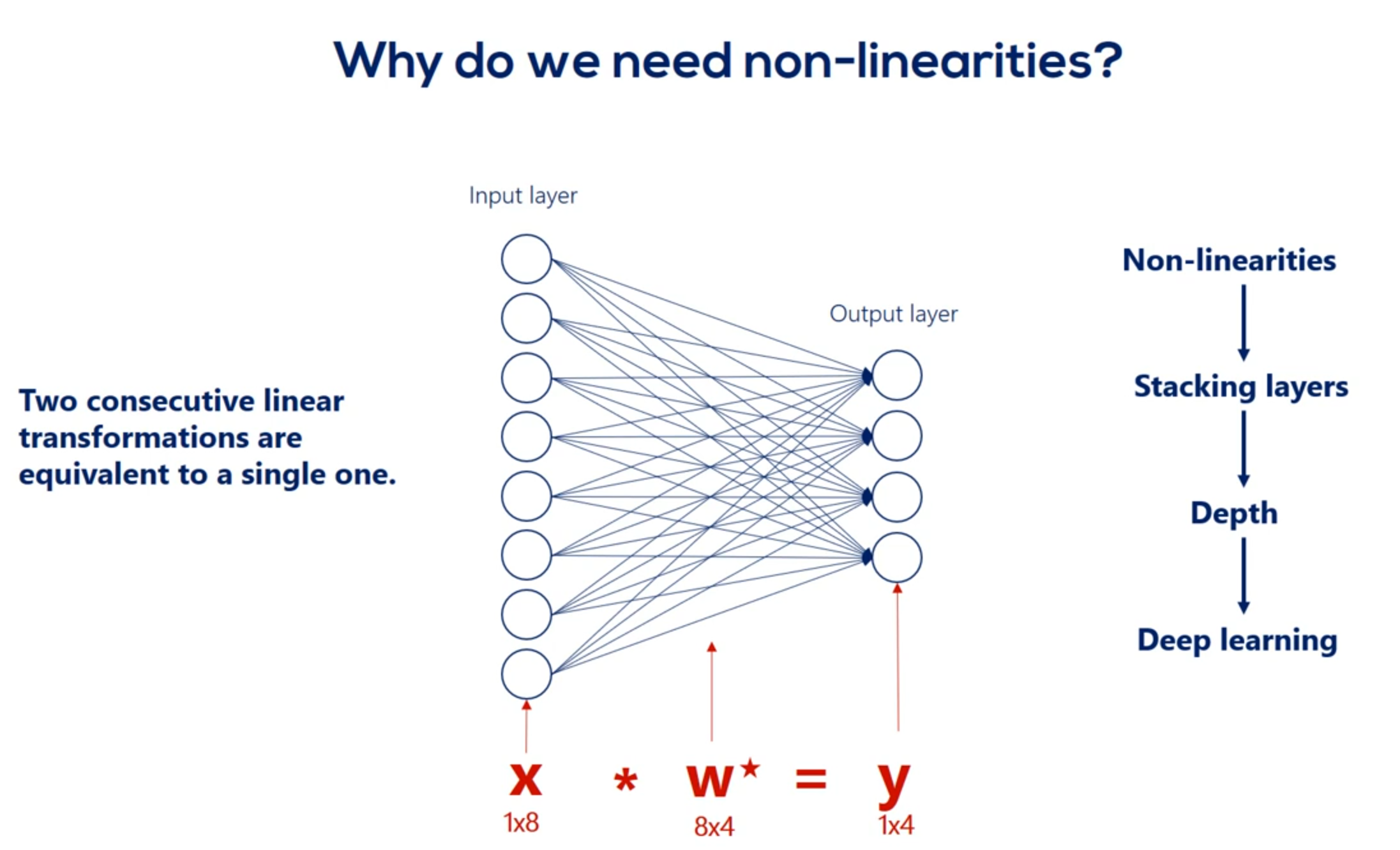

The point we will make is that we cannot stack Lears when we have only linear relationships. Let's prove it. Imagine we have a single hidden layer and there are no non-linearities. So our picture looks this way. There are eight input nodes nine head and nodes in the hidden layer and four output nodes. Therefore we have an eight by nine Waites matrix. When your relationship between the input layer and the hidden layer Let's call this matrix W. one. The hidden units age according to the linear model H is equal to x times w 1. Let's ignore the biases for a while. So our hidden units are summarized in the matrix H with a shape of one by nine. Now let's get to the output layer from the hidden layer once again according to the linear model Y is equal to h times W2 we have W2 as these weights are different. We already know the H matrix is equal to x times. W1 Right. Let's replace h in this equation Y is equal to x times w 1 times. W2 but w 1 and w 2 can be multiplied right. What we get is a combined matrix W star with dimensions 8 by 4 well then our deep net can be simplified into a linear model which looks this way y equals x times w star knowing that we realize the hidden layer is completely useless in this case. We can just train this simple linear model and we would get the same result in mathematics. This seems like an obvious fact but in machine learning it is not so clear from the beginning. The two consecutive linear transformations are equivalent to a single one. Even if we add 100 layers the problem would be simplified to a single transformation. That is the reason we need non-linearities. Without them stacking layers one after the other is meaningless and without stacking layers we will have no depth. What's more with no depth. Each and every problem will equal the simple linear example we did earlier. And many practitioners would tell you it was borderline machine learning. All right let's summarize in one sentence.

You have deep nets and find complex relationships through arbitrary functions. We need non-linearities.

Point taken.

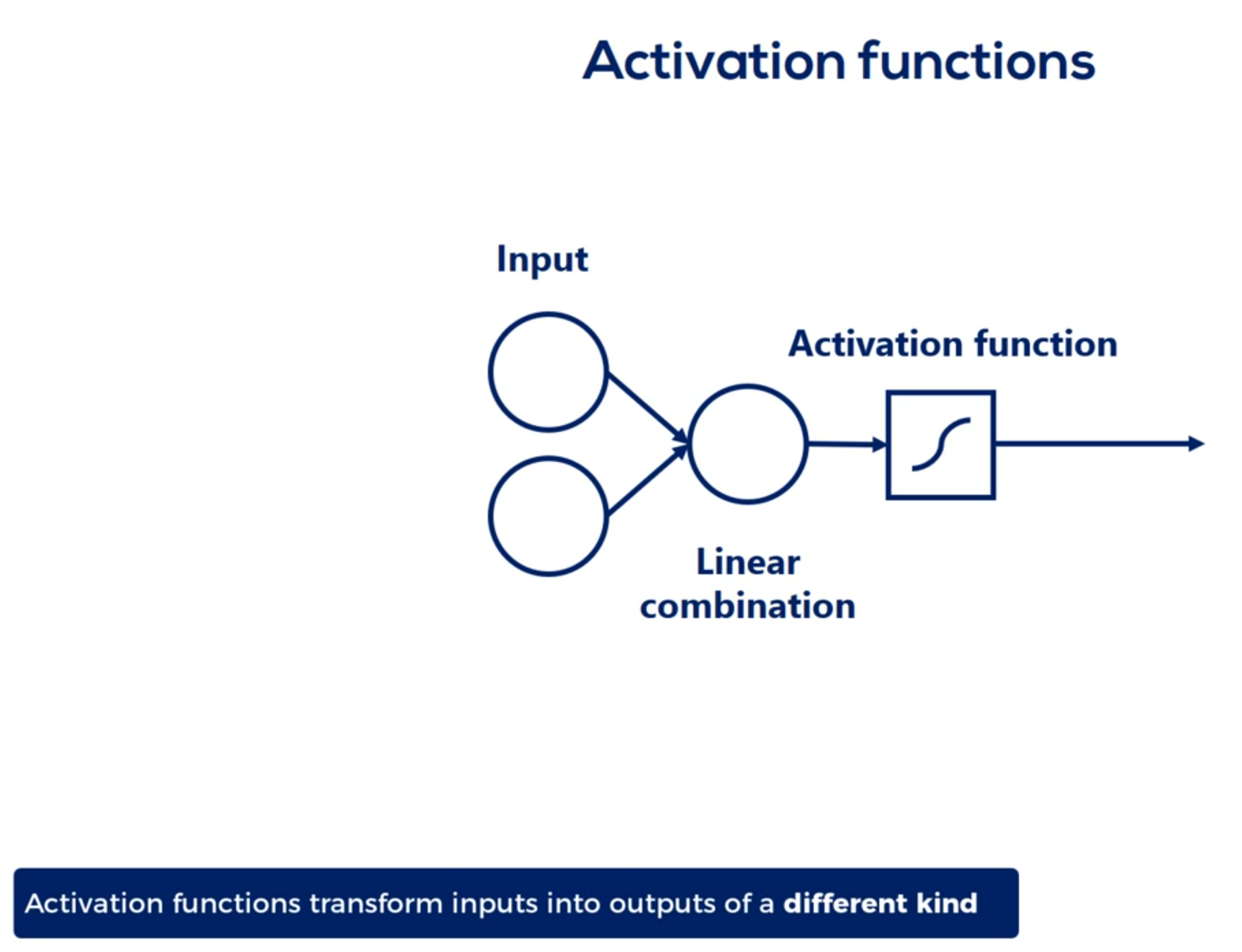

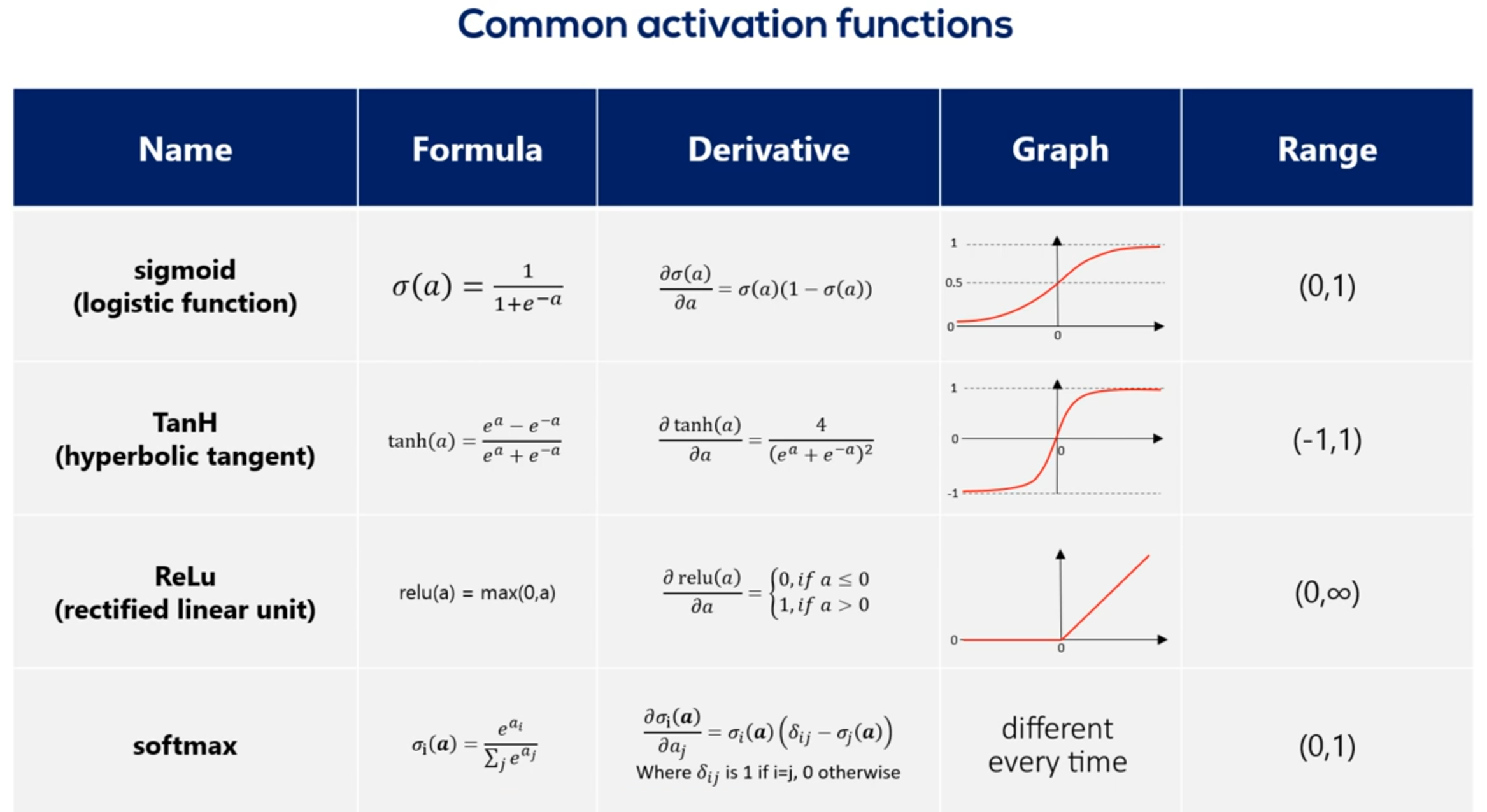

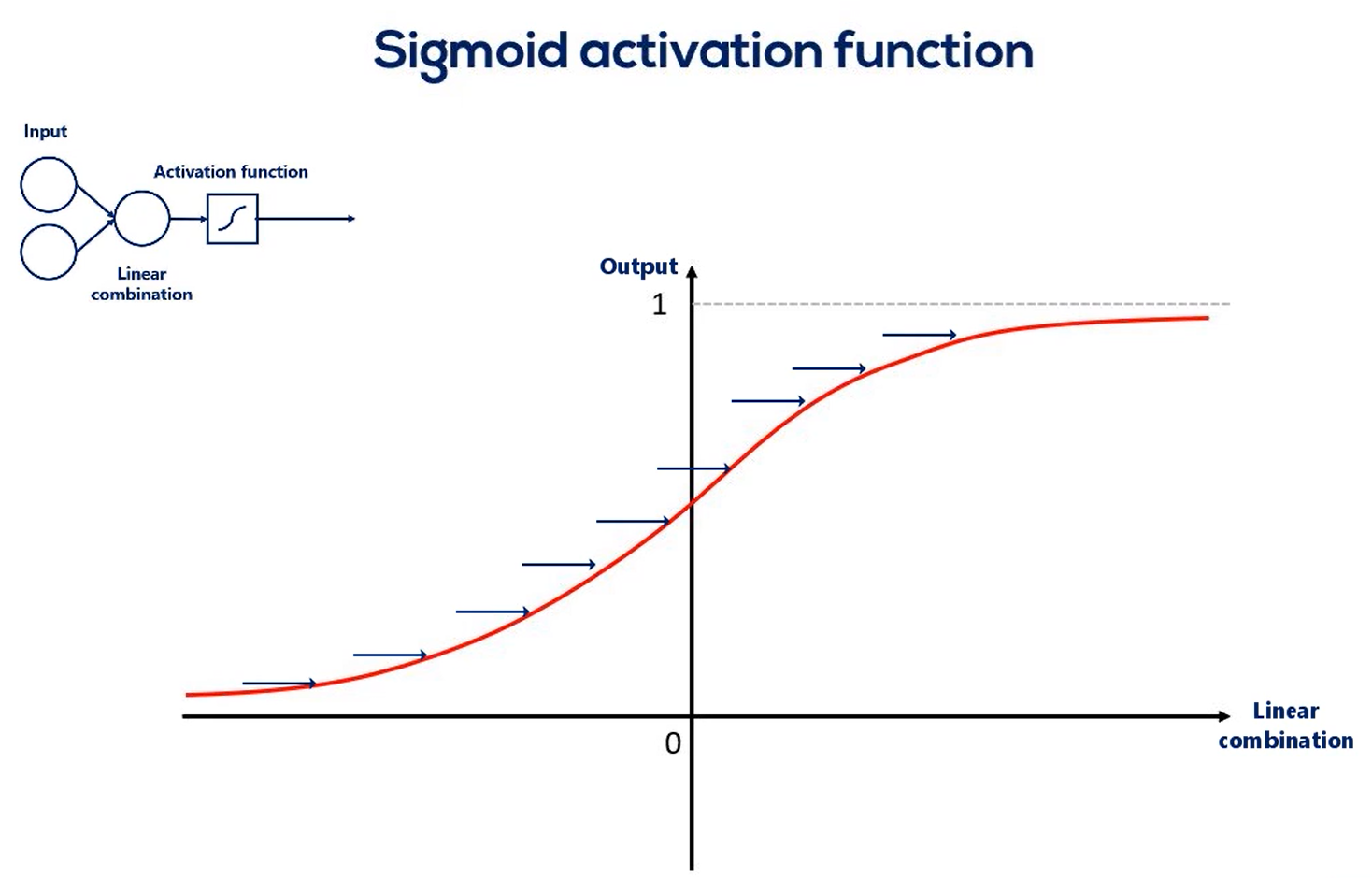

Non-linearities are also called activation functions. Henceforth that's how we will refer to them activation functions transform inputs into outputs of a different kind.

# Activation functions

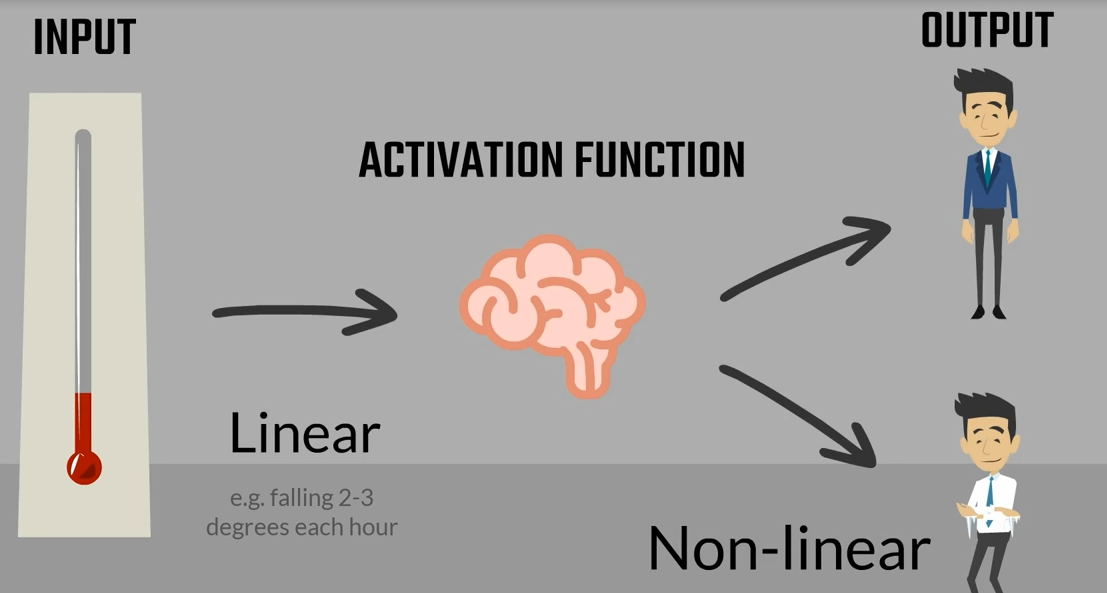

Think about the temperature outside. I assume you wake up and the sun is shining. So you put on some light clothes you go out and feel warm and comfortable. You carry your jacket in your hands in the afternoon the temperature starts decreasing. Initially you don't feel a difference at some point though your brain says it's getting cold. You listen to your brain and put on your jacket the input you got was the change in the temperature the activation function transformed this input into an action put on the jacket or continue carrying it. This is also the output after the transformation. It is a binary variable jacket or no jacket. That's the basic logic behind nonlinearities the change in the temperature was following a linear model

as it was steadily decreasing the activation function transformed this relationship into an output linked to the temperature but was of a different kind

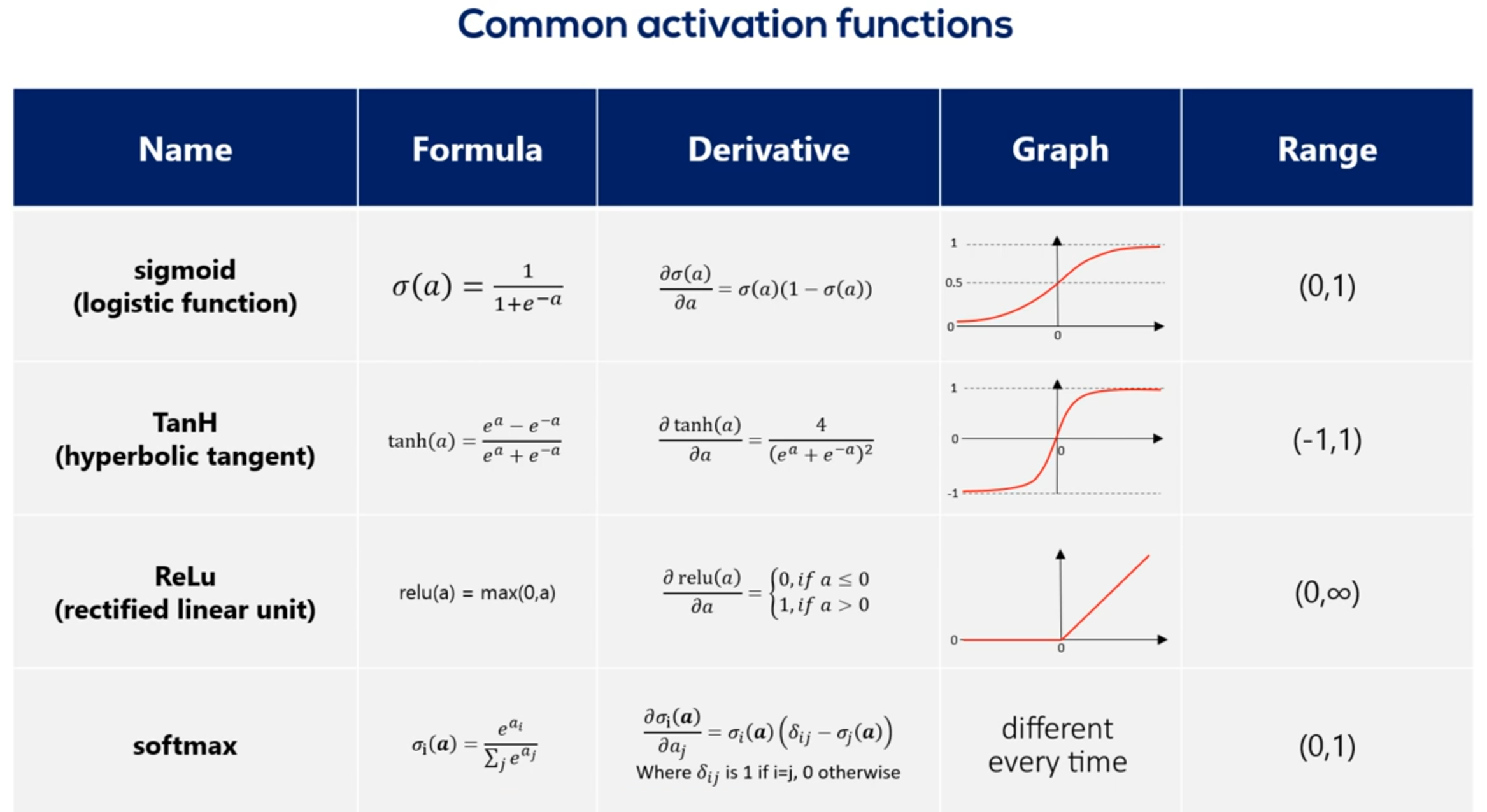

its derivative as you may recall the derivative is an essential part of the gradient descent. Naturally when we work with tenths or flow we won't need to calculate the derivative as tenths or flow. Does that automatically Anyhow the purpose of this lesson is understanding these functions. There are graphs and ranges in a way that would allow us to acquire intuition about the way they behave. Here's the functions graph. And finally we have it's range. Once we have applied the sigmoid as an activator all the outputs will be contained in the range from 0 to 1. So the output is somewhat standardized. All right here are the other three common activators the Tench also known as the hyperbolic tangent. The real Lu aka the rectified linear unit and the soft Max activator you can see their formulas derivatives graphs and ranges. The saaf next graph is not missing. The reason we don't have it here is that it is different every time. Pause this video for a while and examine the table in more detail. You can also find this table in the course notes. So all these functions are activators right. What makes them similar. Well let's look at their graphs all Armano tonic continuous and differentiable. These are important properties needed for the optimization process as we are not there yet. We will leave this issue for later.

Before we conclude I would like to make this remark. Activation functions are also called transfer functions because of the transformation properties. The two terms are used interchangeably in machine learning context but have differences in other fields. Therefore to avoid confusion we will stick to the term activation functions.

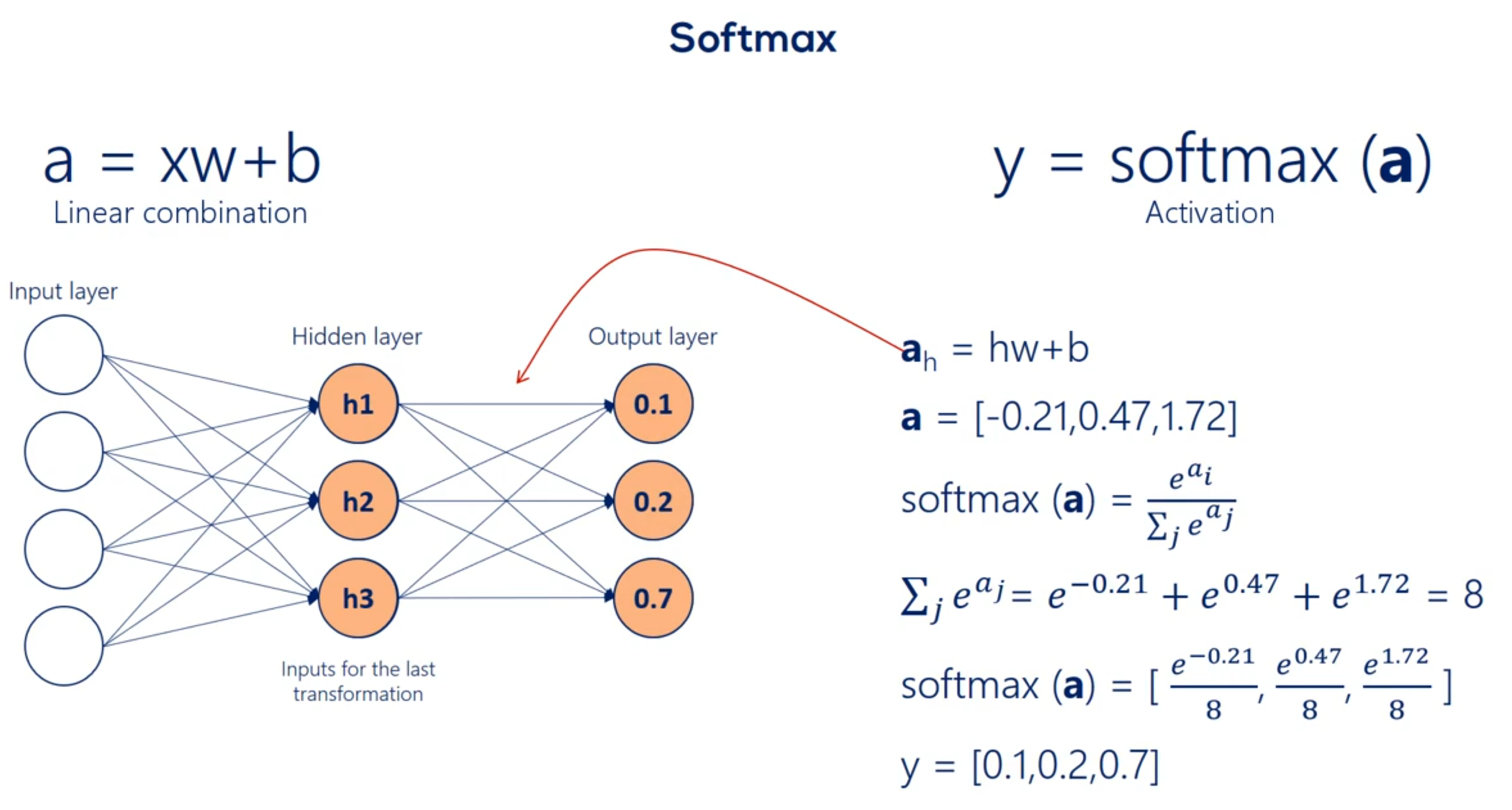

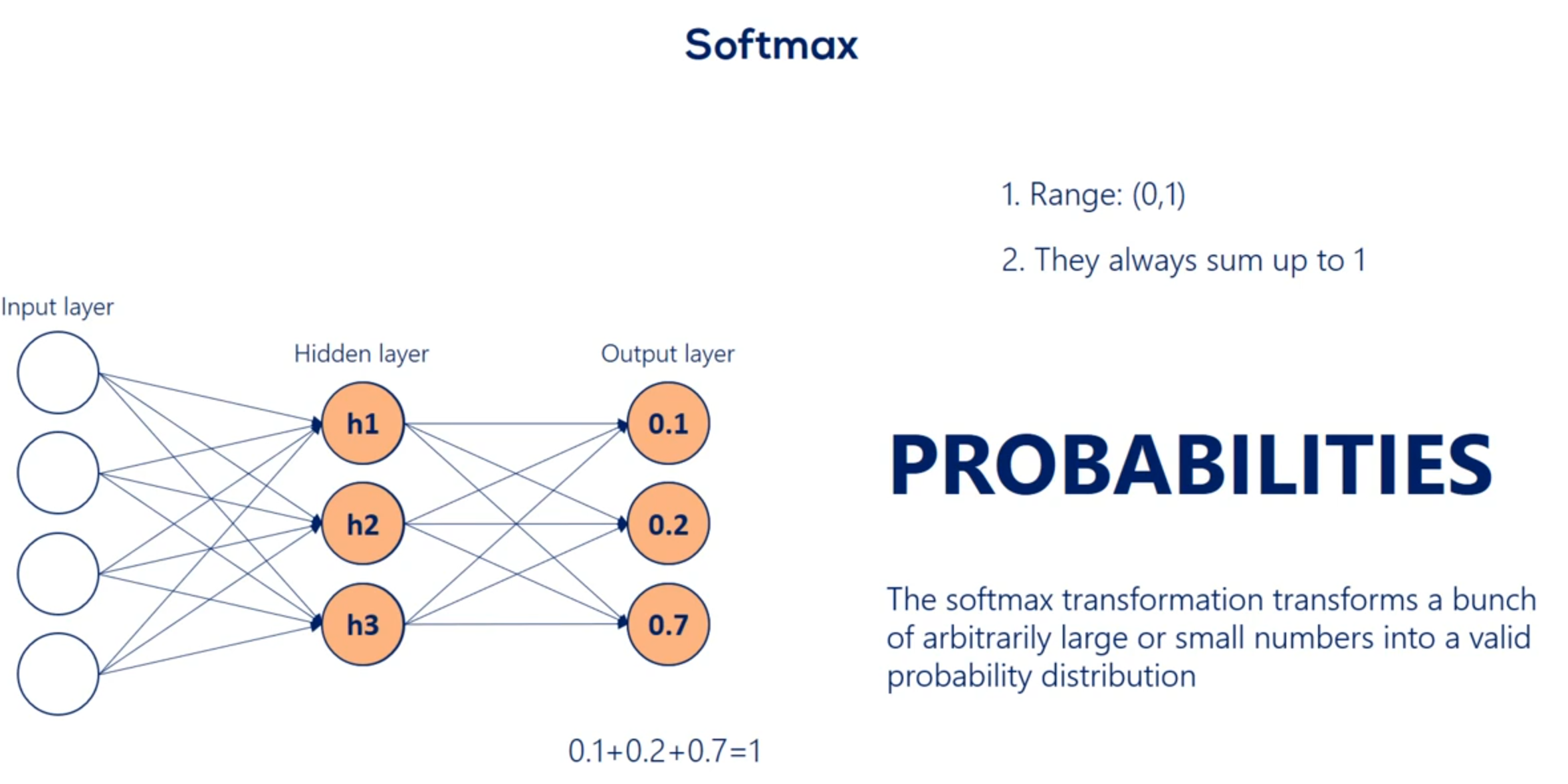

# Softmax function

Let's continue exploring this table which contains mostly Greek letters we said the soft max function has no definite graph y so while this function is different if we take a careful look at its formula we would see the key difference between this function and the other is it takes an argument the whole vector A instead of individual elements. So the self max function is equal to the exponential of the element at position. I divided by the sum of the exponentials of all elements of the vector. So while the other activation functions get an input value and transform it regardless of the other elements the SAAF Max considers the information about the whole set of numbers we have.

A key aspect of the soft Max transformation is that the values it outputs are in the range from 0 to 1. There is some is exactly 1 What else has such a property. Probabilities Yes probabilities indeed. The point of the soft Max transformation is to transform a bunch of arbitrarily large or small numbers that come out of previous layers and fit them into a valid probability distribution. This is extremely important and useful.

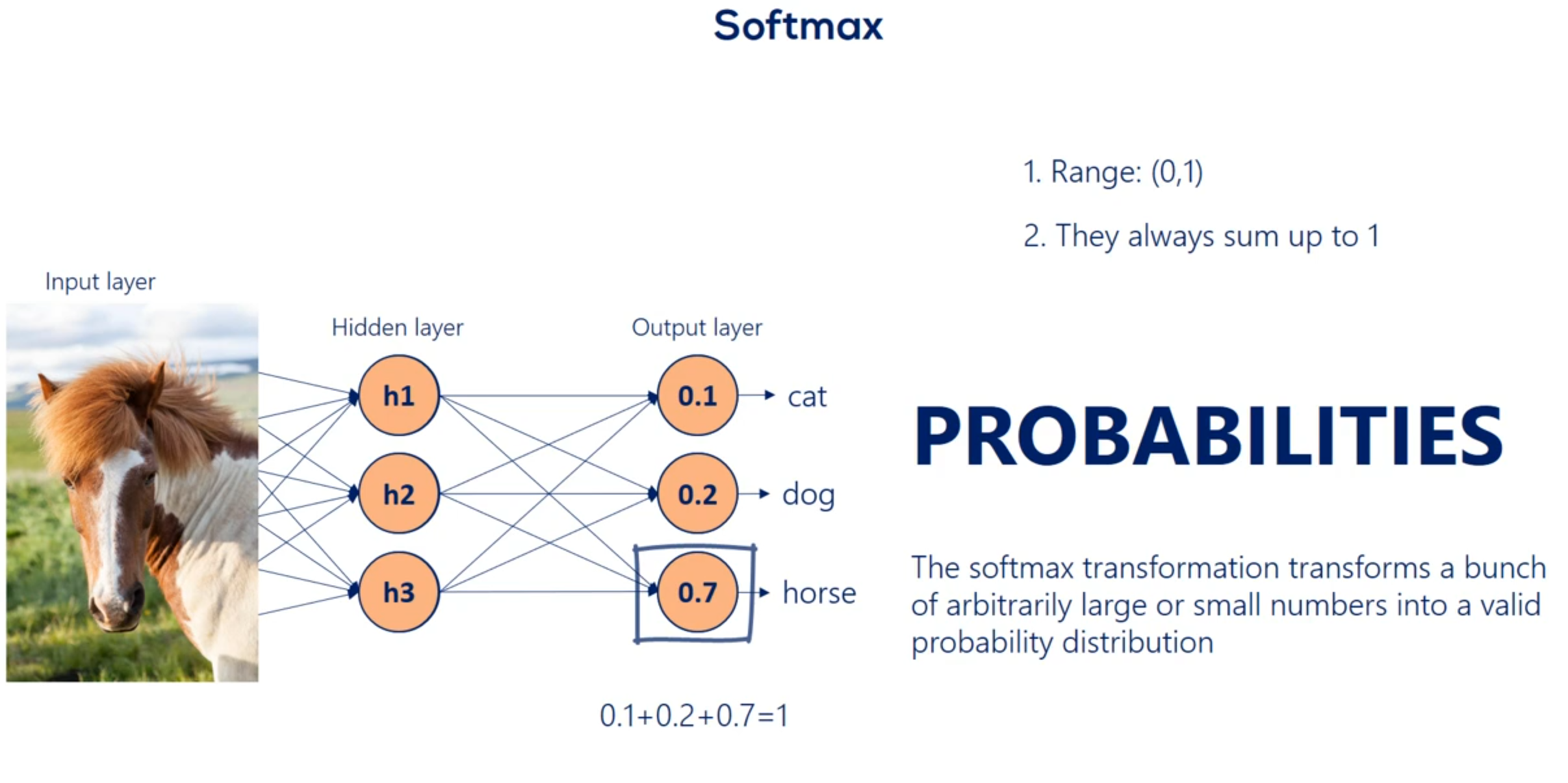

Remember our example with cats dogs and horses we saw earlier. One photo was described by a vector containing 0.1 0.2 and 0.7. We promise we will tell you how to do that. Well that's how through a soft Max transformation we kept our promise now that we know we are talking about probabilities we can comfortably say we are 70 percent certain the image is a picture of a horse. This makes everything so intuitive and useful that the SAAF next activation is often used as the activation of the final output layer and classification problems. So no matter what happens before the final output of the algorithm is a probability distribution.

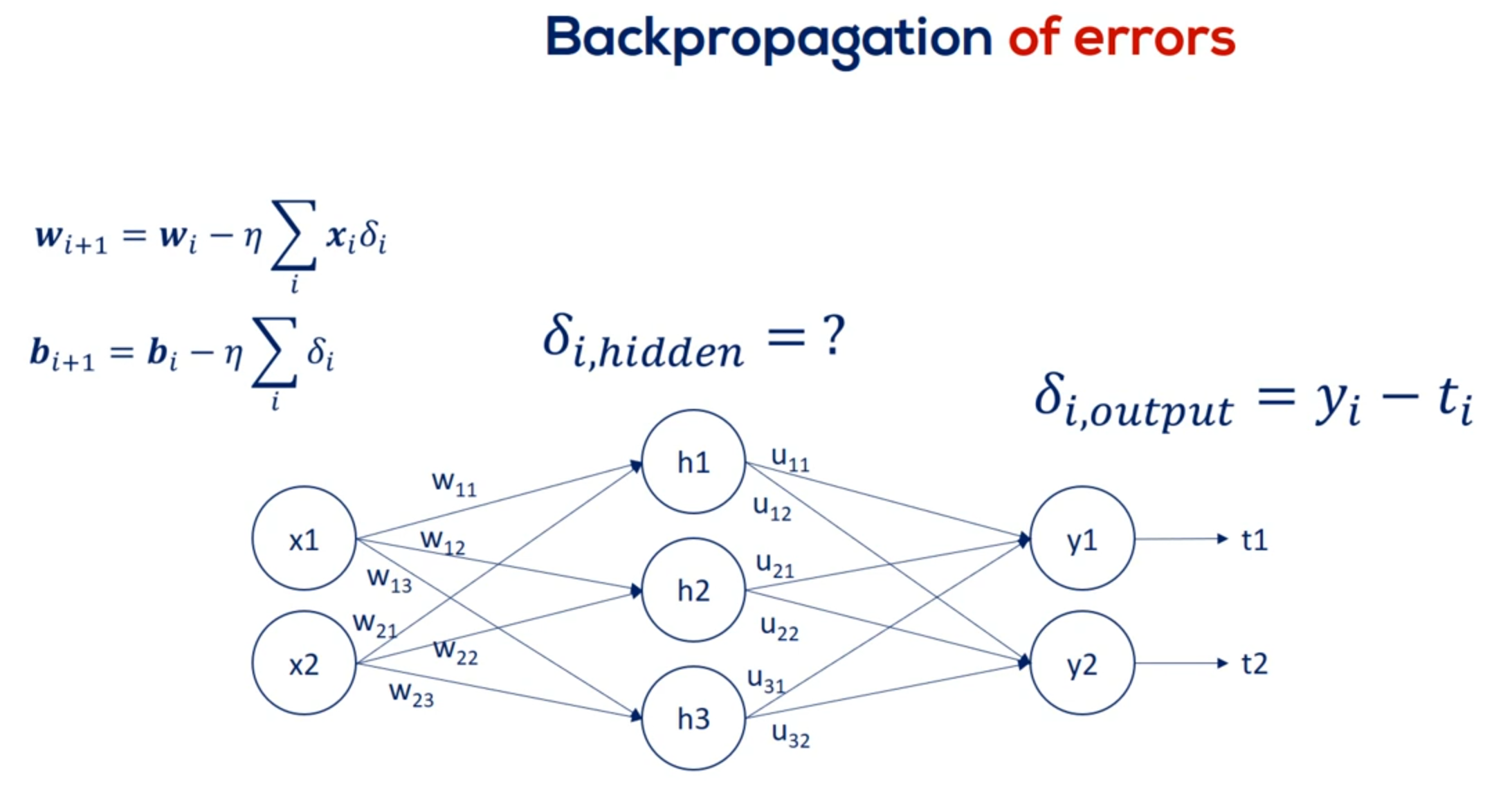

# Backpropagation

First I'd like to recap what we know so far we've seen and understood the logic of how layers are stacked.

We've also explored a few activation functions and spent extra time showing they are central to the concept of stacking layers.

Moreover by now we have said 100 times that the training process consists of updating parameters through the gradient descent for optimizing the objective function.

In supervised learning the process of optimization consisted of minimizing the loss.

Our updates were directly related to the partial derivatives of the loss and indirectly related to the

errors or deltas as we called them.

Let me remind you that the Deltas were the differences between the targets and the outputs.

All right as we will see later deltas for the hidden layers are trickier to define. Still they have a similar meaning.

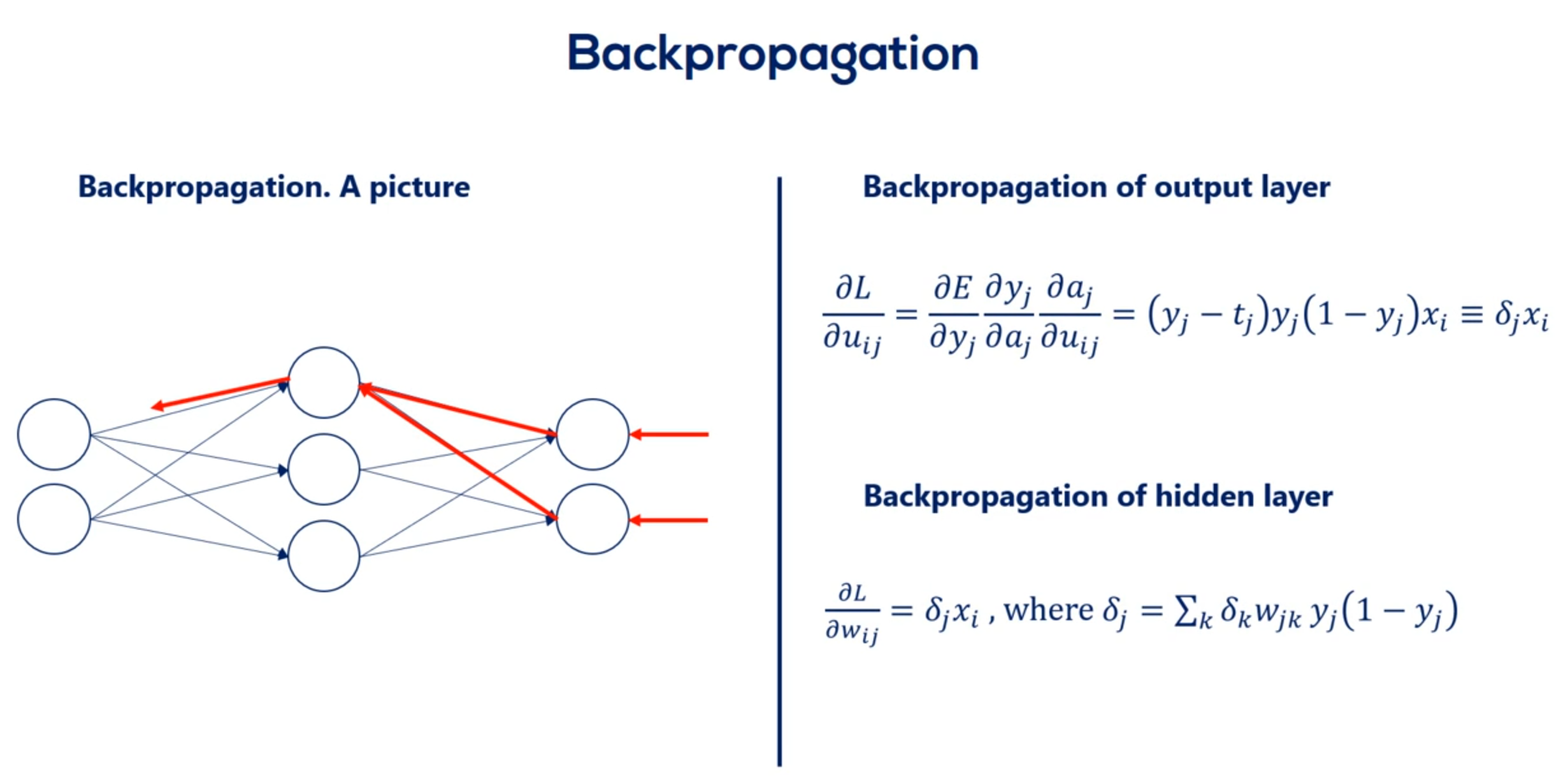

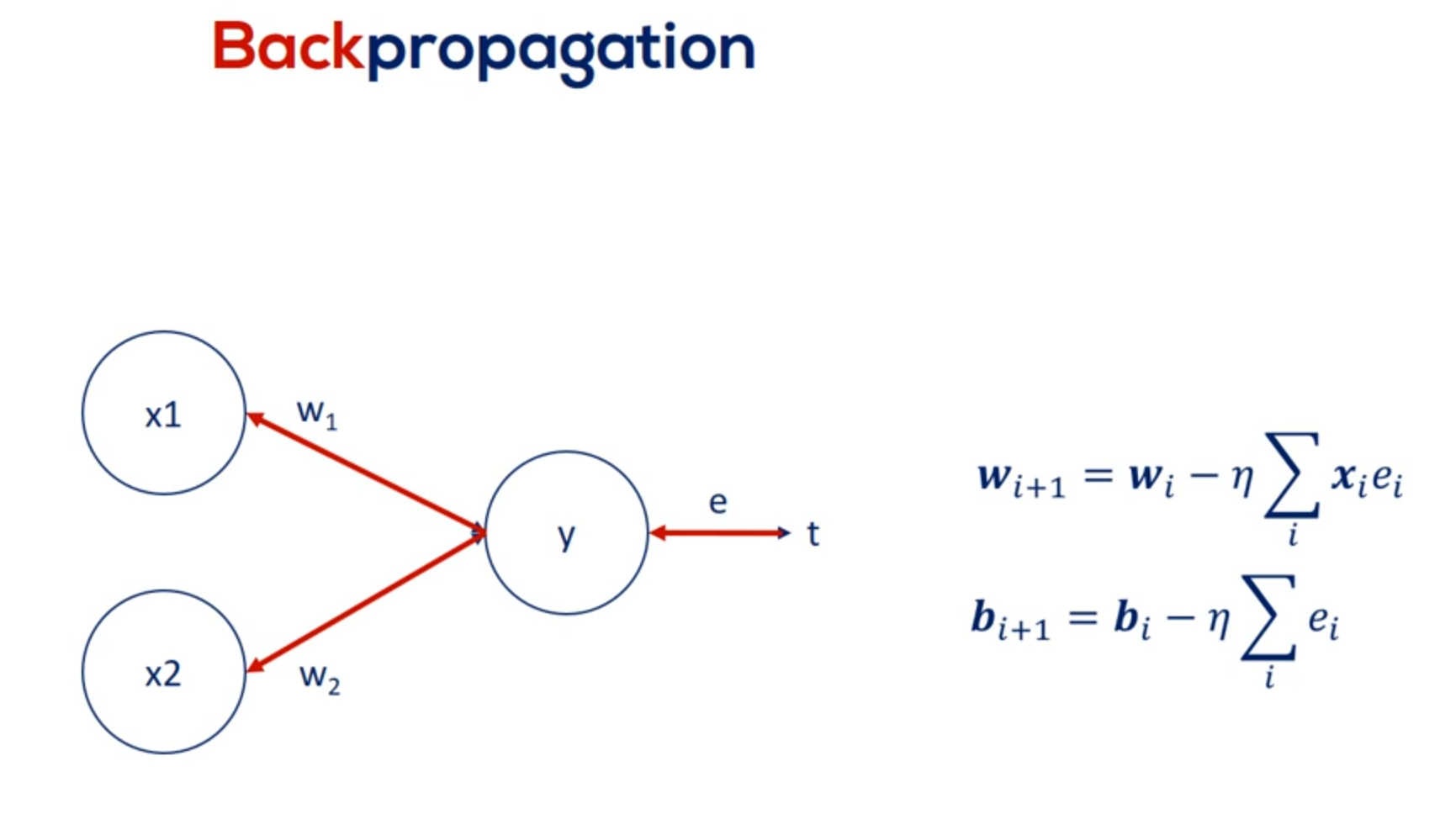

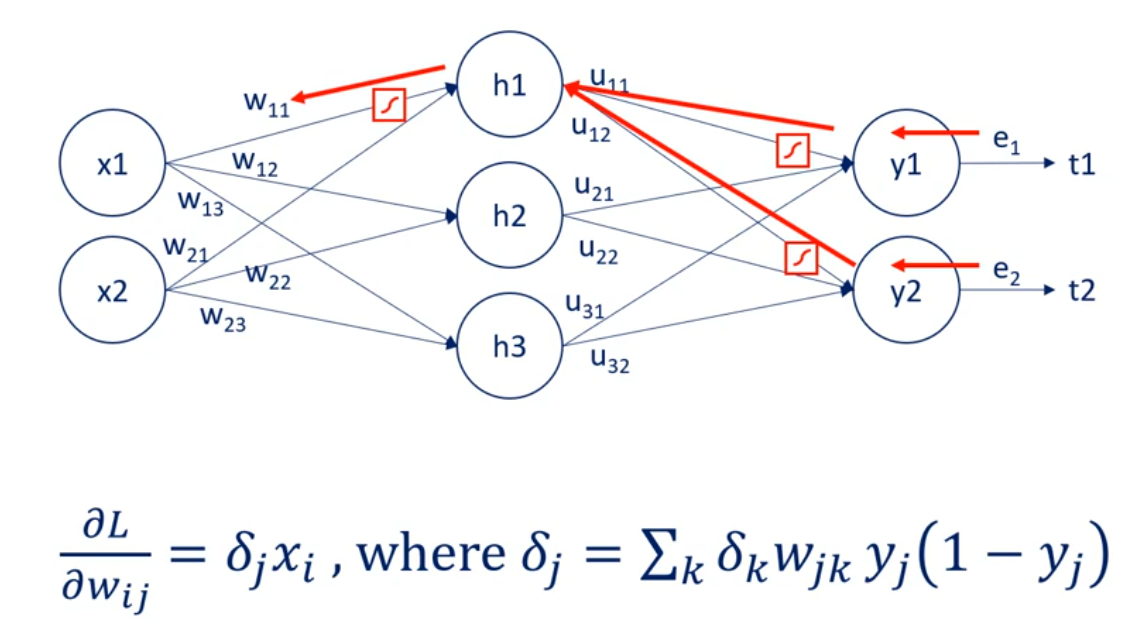

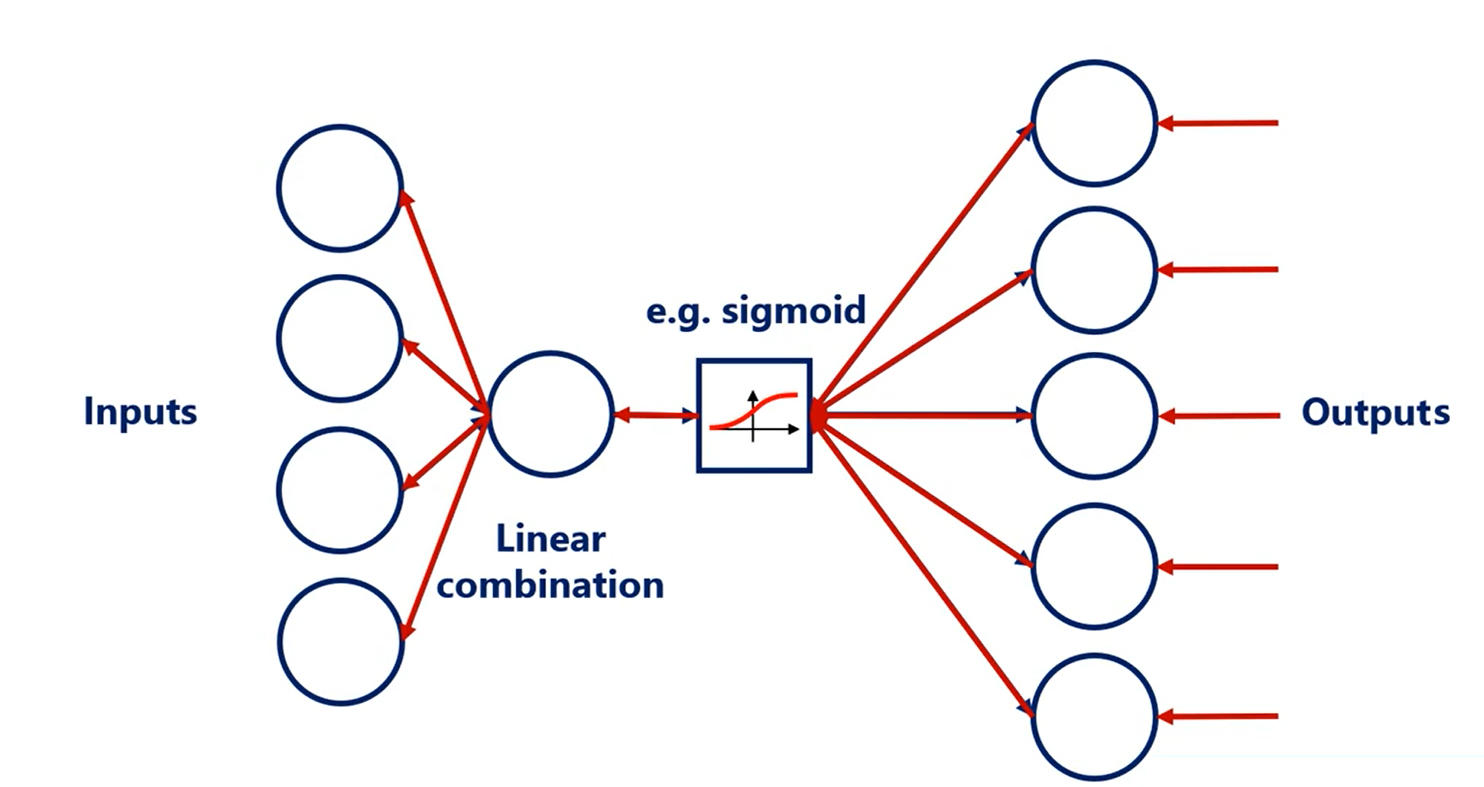

The procedure for calculating them is called back propagation of errors having these deltas allows us to vary parameters using the familiar update rule.

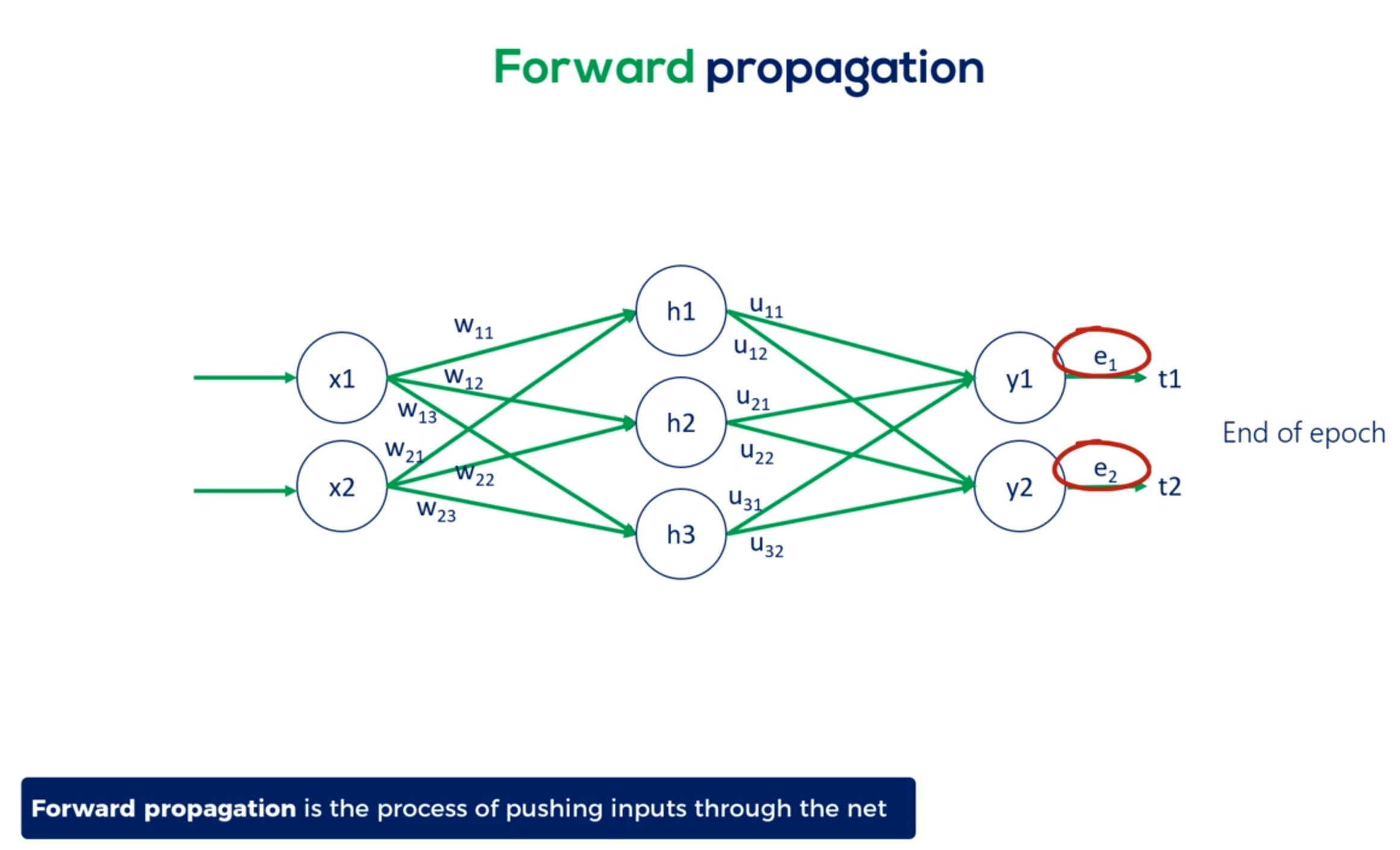

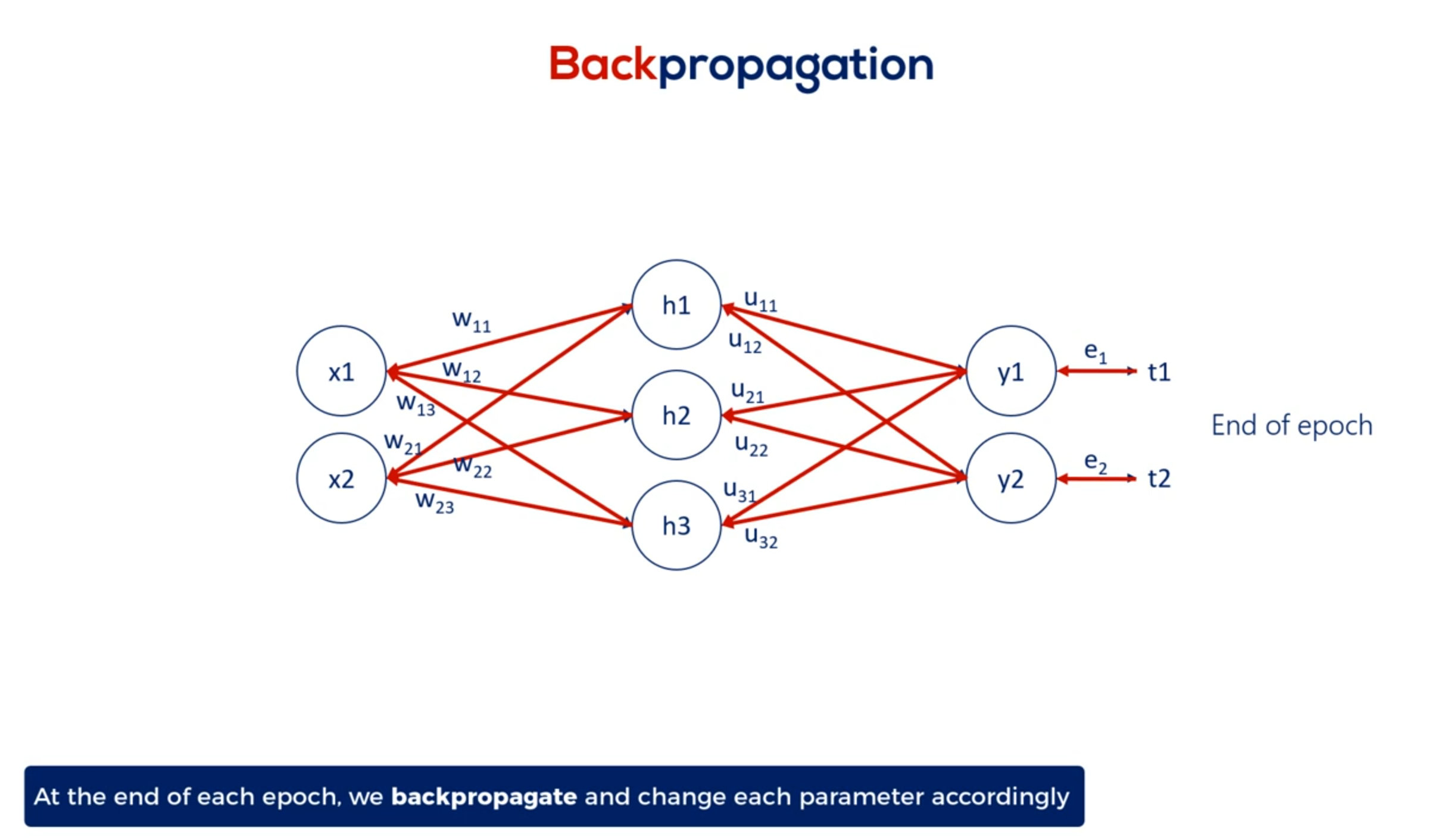

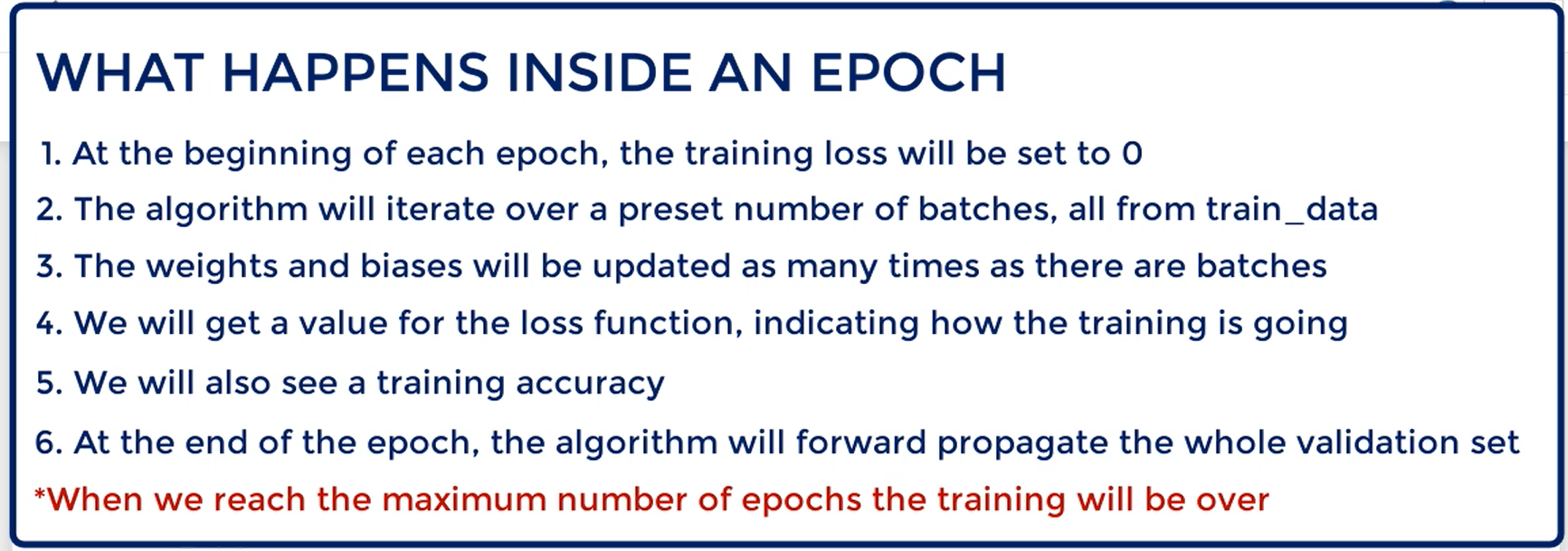

Let's start from the other side of the coin forward propagation

Forward propagation is the process of pushing inputs through the net.

At the end of each epoch the obtained outputs are compared to the targets to form the errors.

Then we back propagate through partial derivatives and change each parameter so errors at the next epoch are minimized.

For the minimal example the back propagation consisted of a single step, aligning the weights, given the errors we obtained.

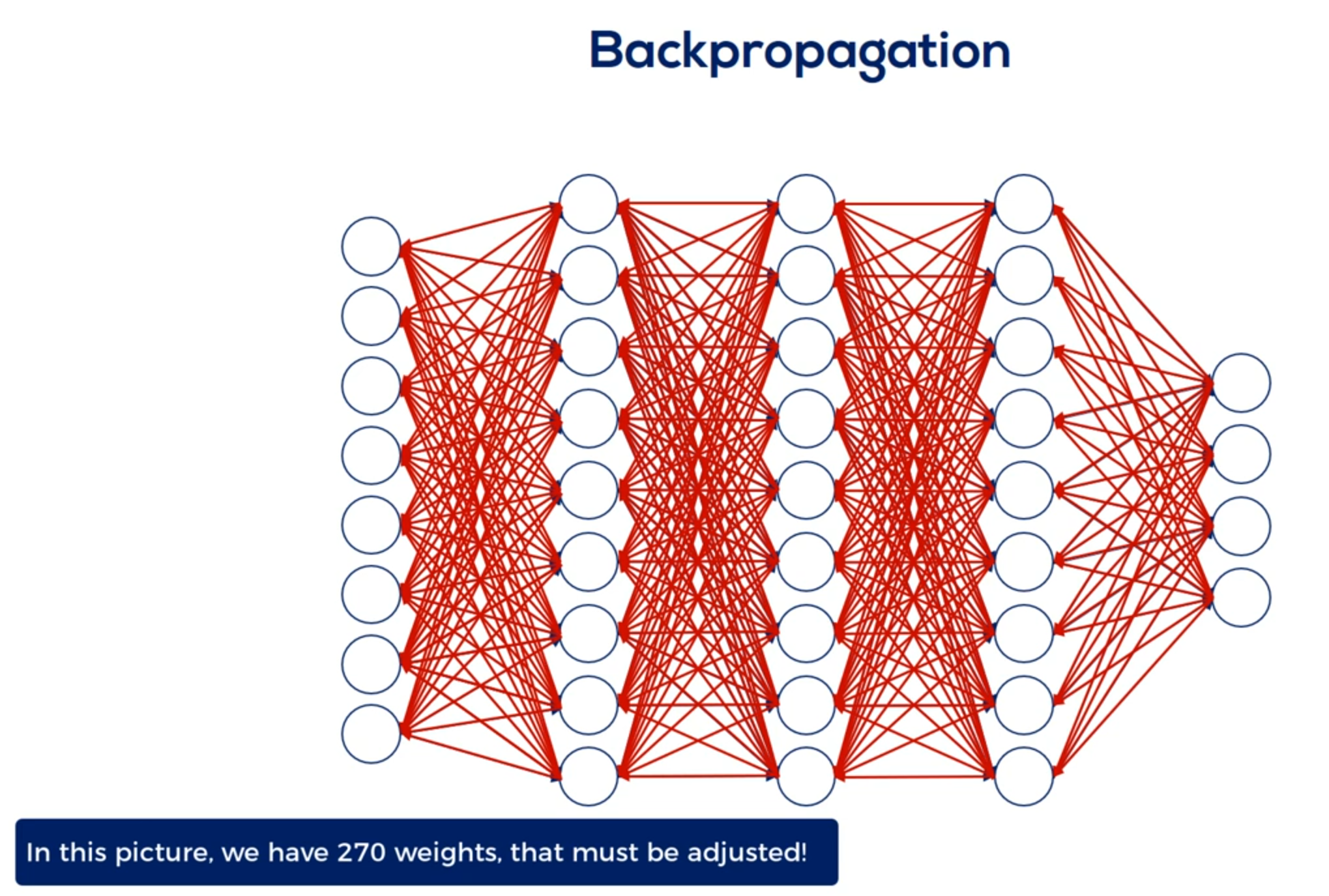

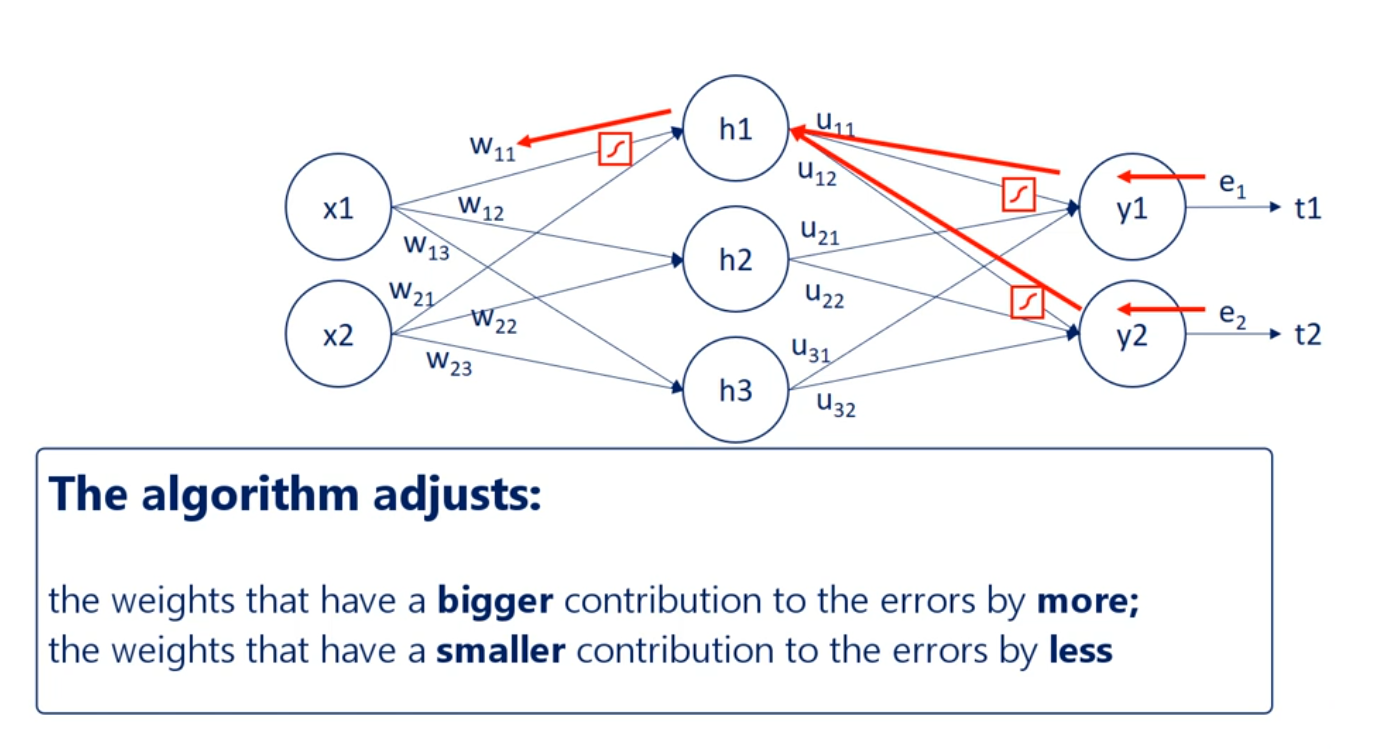

Here's where it gets a little tricky when we have a deep net. We must update all the weights related to the input layer and the hidden layers. For example in this famous picture we have 270 weights and yes this means we had to manually draw all 270 arrows you see here.

So updating all 270 weights is a big deal.

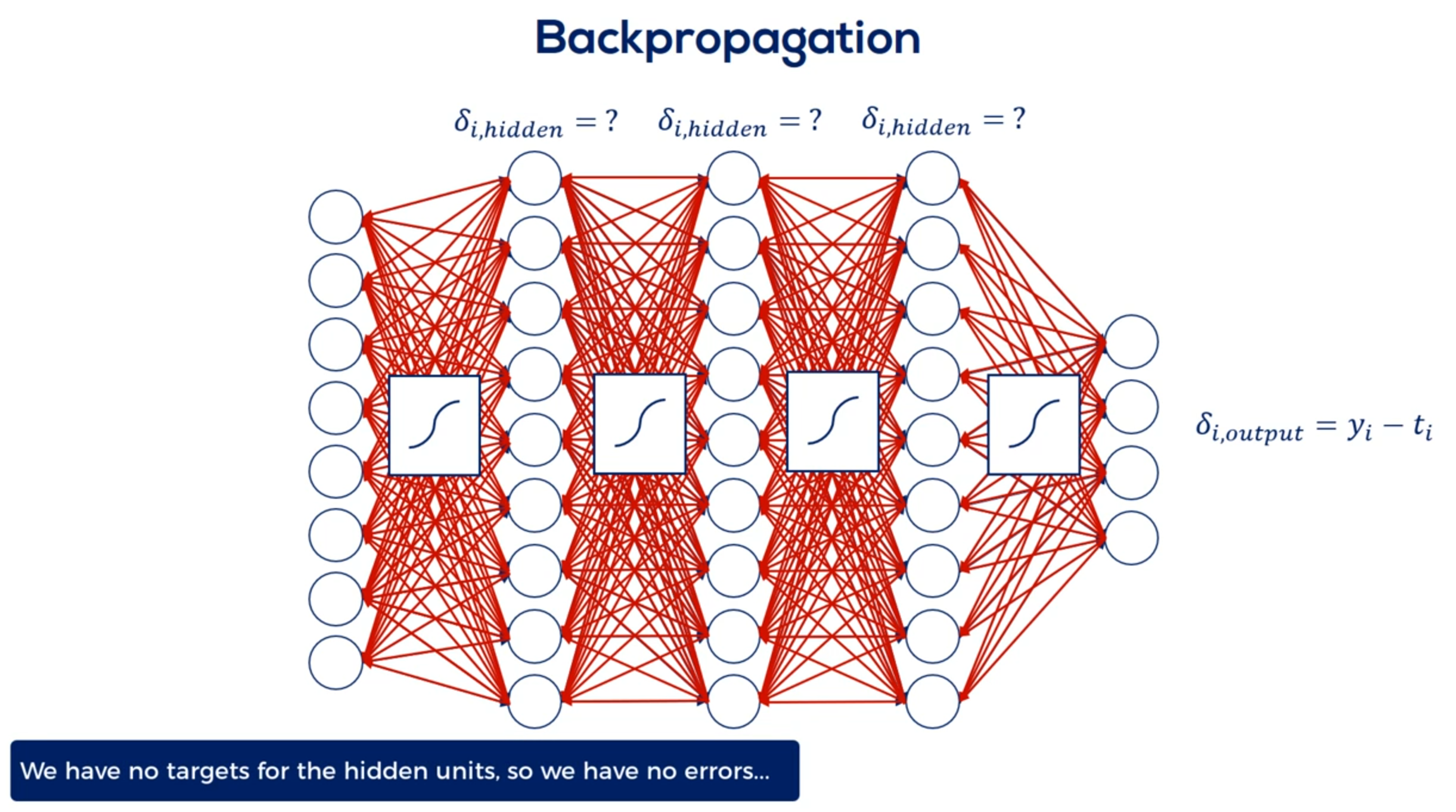

But wait. We also introduced activation functions. This means we have to update the weights accordingly. Considering the use nonlinearities and their derivatives.

Finally to update the weights we must compare the outputs to the targets. This is done for each layer but we have no targets for the hidden units. We don't know the errors So how do we update the weights. That's what back propagation is all about. We must arrive the appropriate updates as if we had targets.

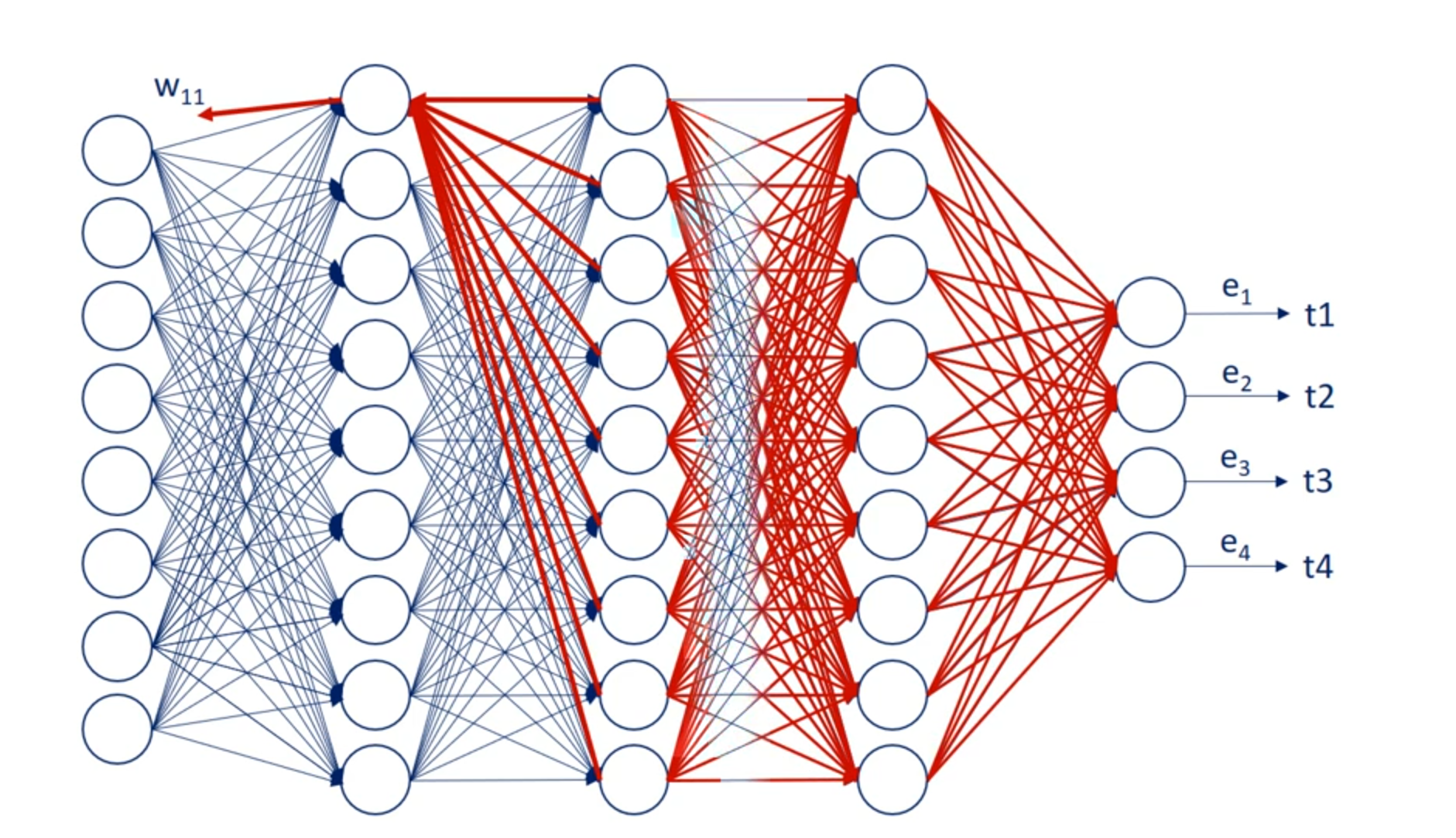

Now the way academics solve this issue is through errors. The main point is that we can trace the contribution of each unit hit or not to the error of the output.

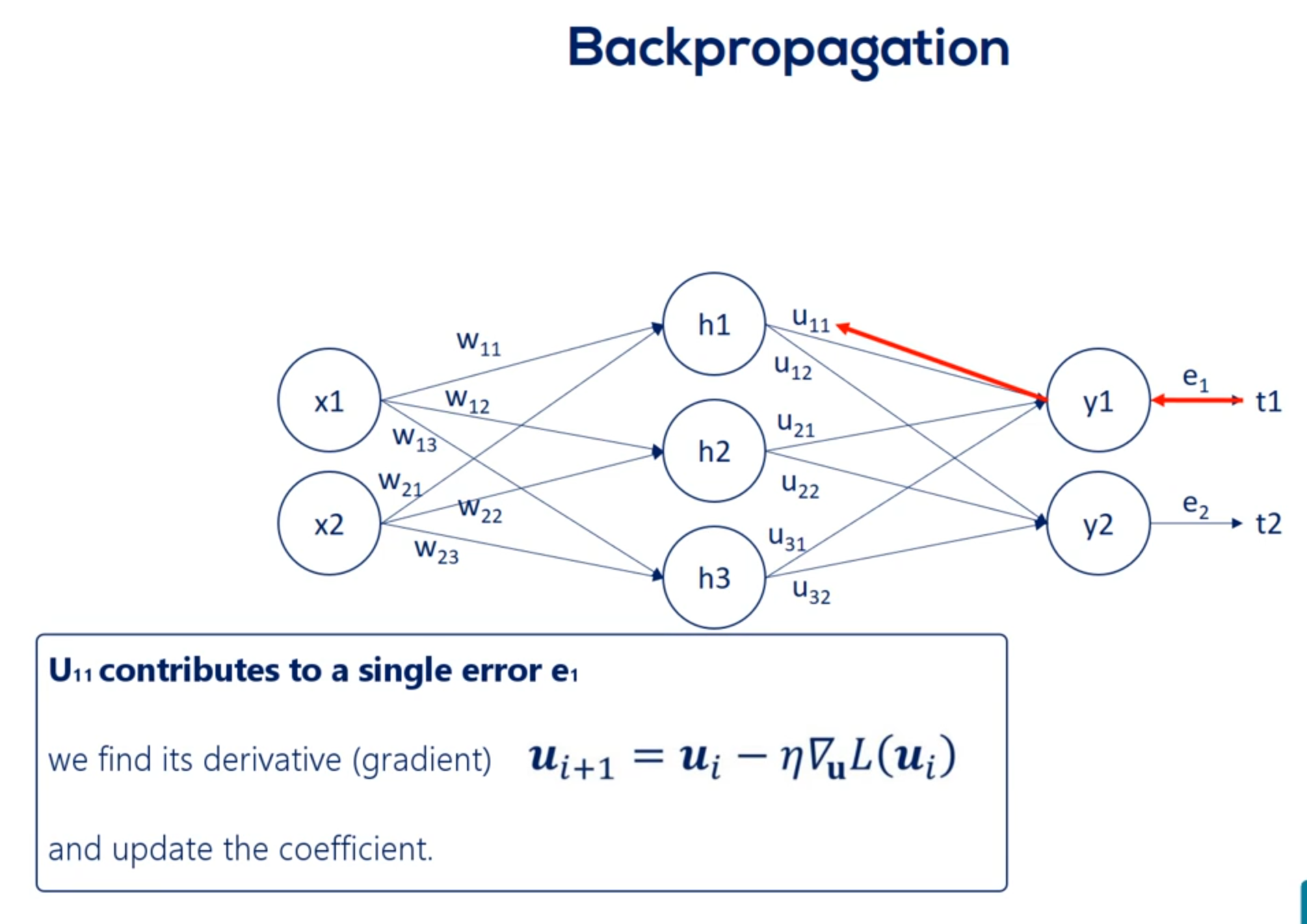

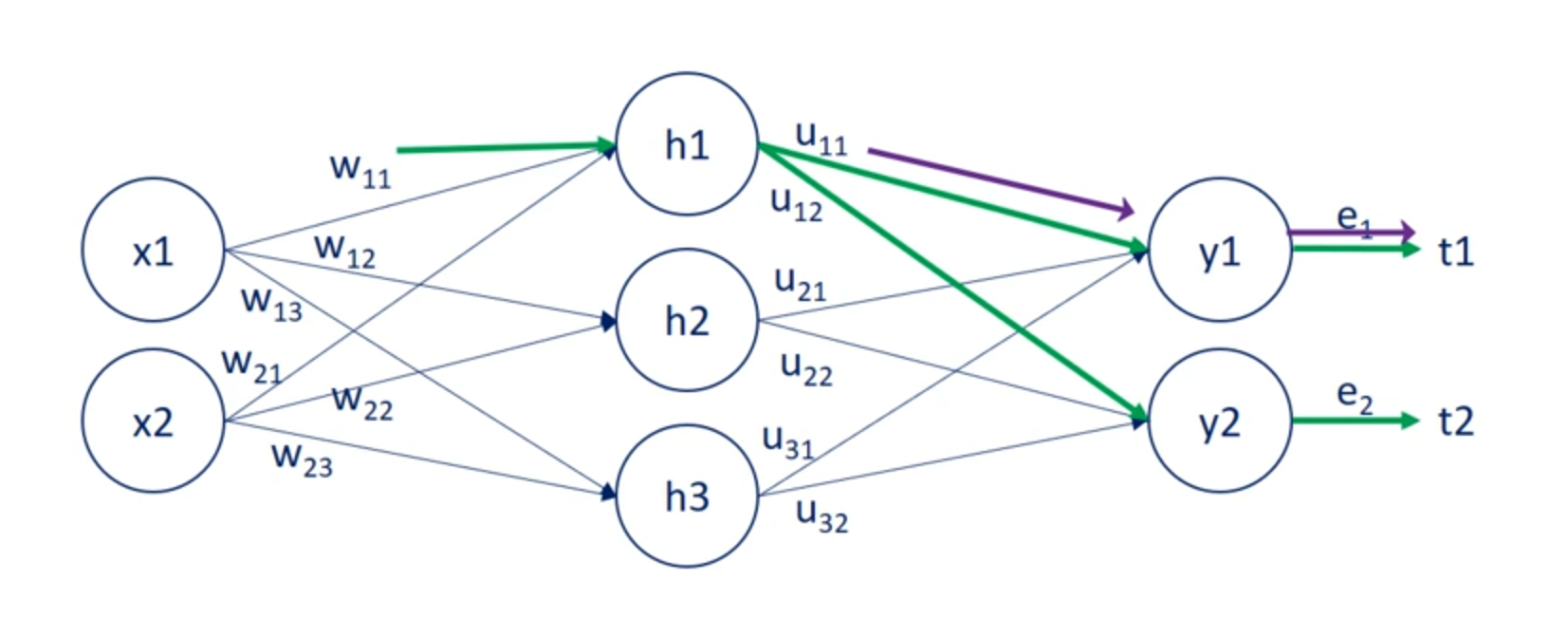

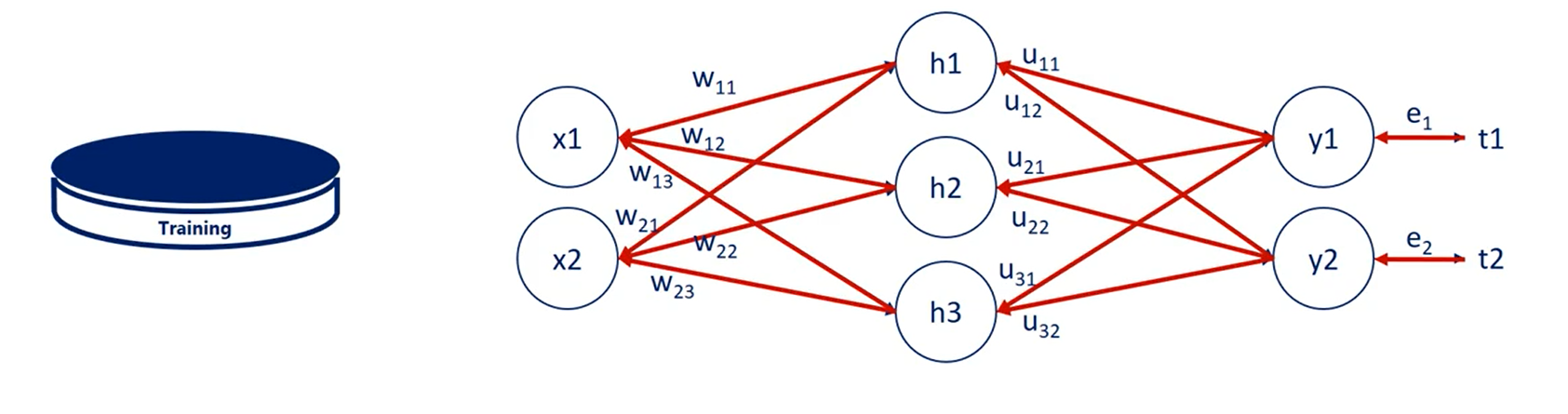

OK great let's look at the schematic illustration of back propagation shown here our net is quite simple.

It has a single hidden layer.

Each node is labeled.

So we have inputs x 1 and x 2 hidden layer units output layer units. Why one in y2 two. And finally the targets T1 and T2.

The weights are w 1 1 w 1 2 w 1 3 w 2 1 w 2 2 and W 2 3 for the first part of the net. For the second part we named them you 1 1 you 1 2 you 2 1 you 2 2 3 1 and you 3 2. So we can differentiate between the two types of weights.

That's very important.

We know the error associated with Y 1 and y to as it depends on known targets. So let's call the two errors. E 1 and 2.

Based on them we can adjust the weights labeled with you. Each U contributes to a single error.

For example you 1 1 contributes to e 1.

Then we find its derivative and update the coefficient. Nothing new here.

Now let's examine w 1 1 . Helped us predict h1 But then we needed h1 to calculate y1 in y2. Thus it played a role in determining both errors. e1 and e2.

So while u11 contributes to a single error w11 contributes to both errors. Therefore it's adjustment rule must be different.

The solution to this problem is to take the errors and back propagate them through the net using the weights.

Knowing the u weights we can measure the contribution of each hit in unit to the respective errors.

Then once we found out the contribution of each hit in unit to the respective errors we can update the W weights.

So essentially through back propagation the algorithm identifies which weights lead to which errors then it adjusts the weights that have a bigger contribution to the errors by more than the weights with a smaller contribution.

A big problem arises when we must also consider the activation functions. They introduce additional complexity to this process.

Linear your contributions are easy but non-linear ones are tougher. Emergent back propagating in our introductory net. Once you understand it, it seems very simple.

While pictorially straightforward mathematically it is rough to say the least.

That is why back propagation is one of the biggest challenges for the speed of an algorithm.

WARNING

Continue on Chapter 8 of the desktop course

Practical lessons on chapters 12 and 13

# Overfitting

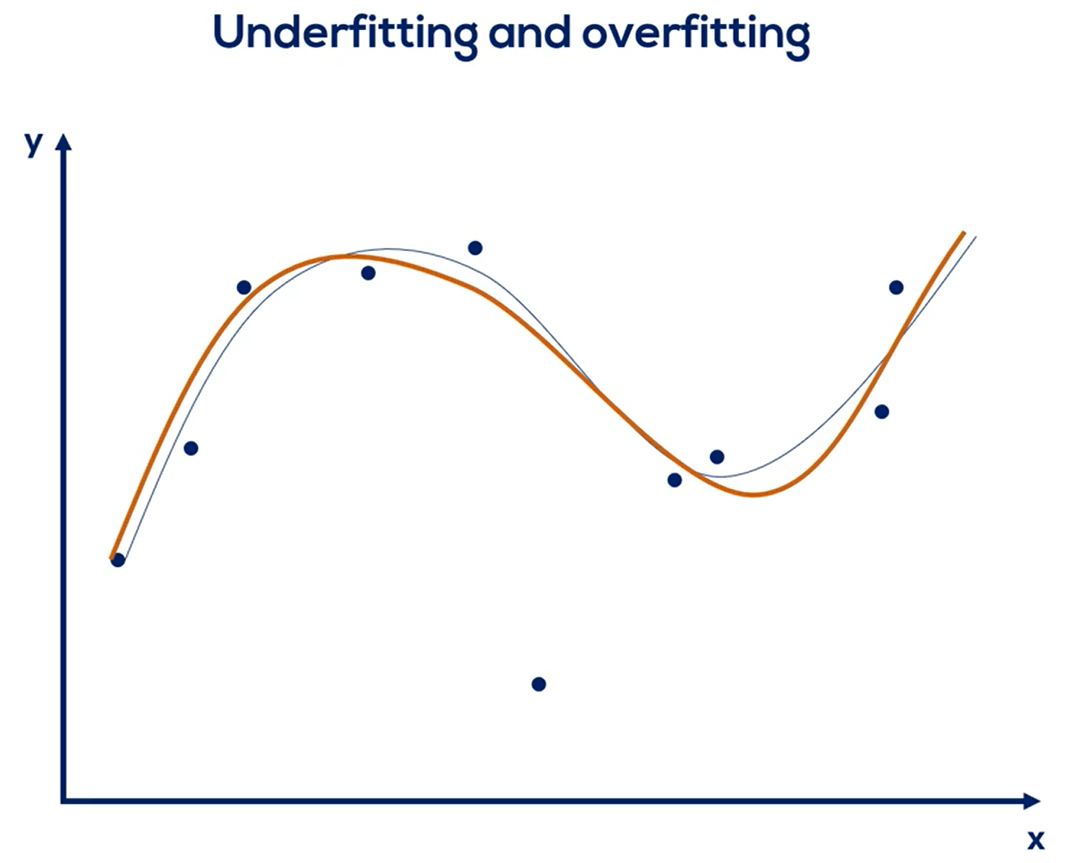

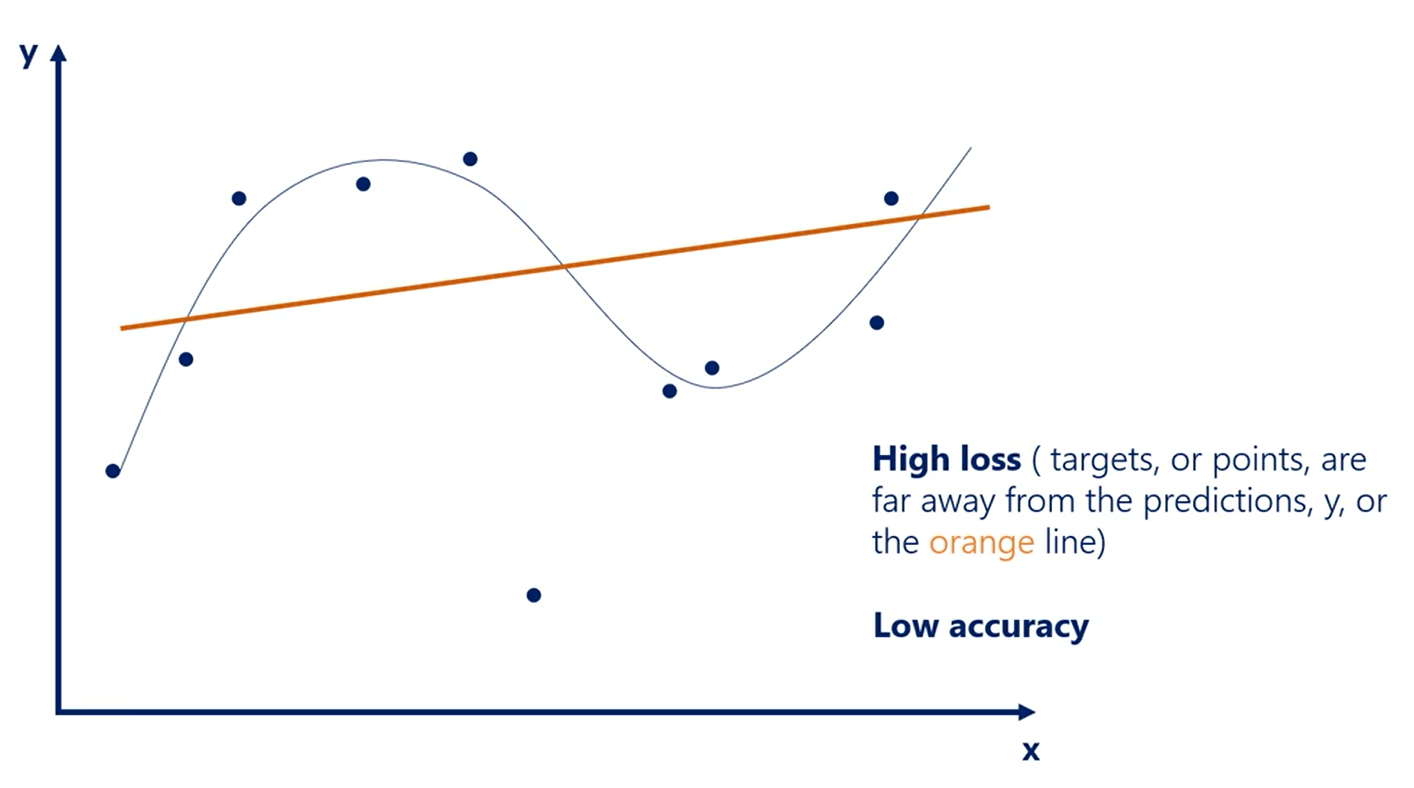

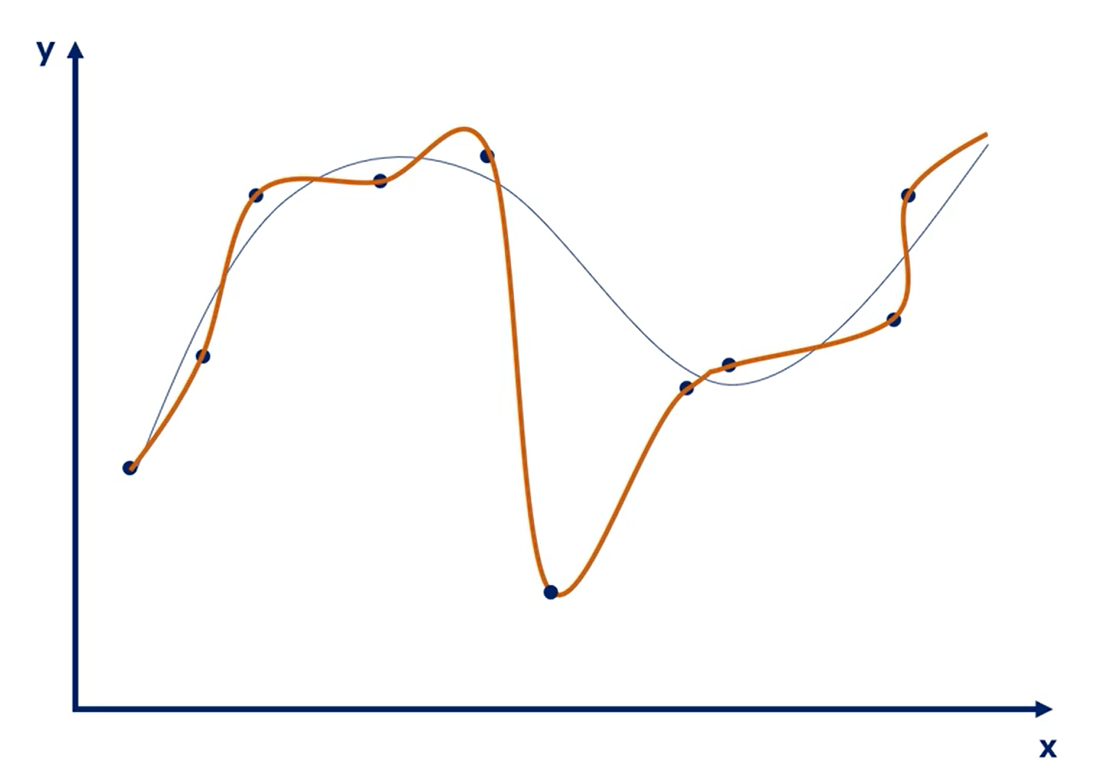

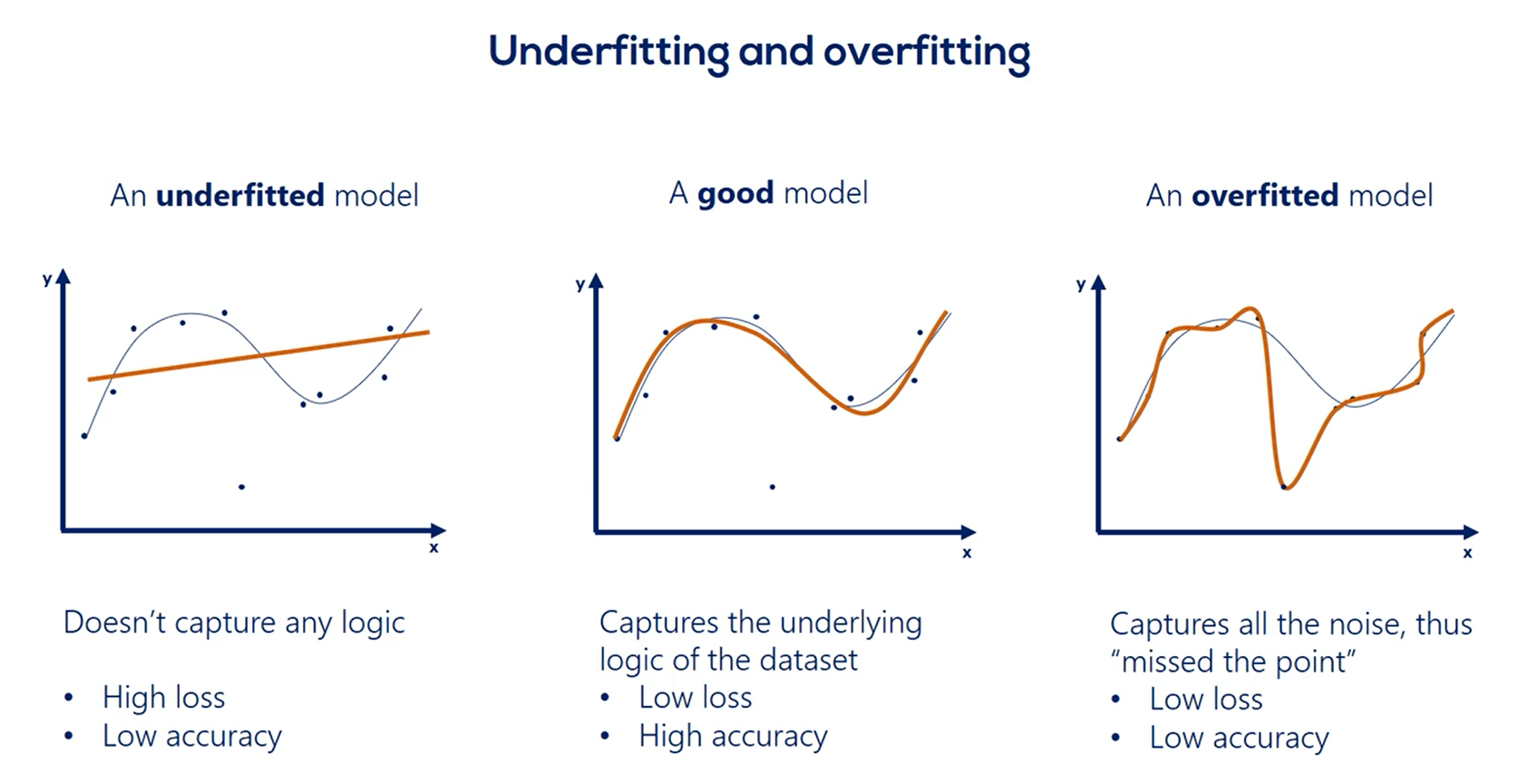

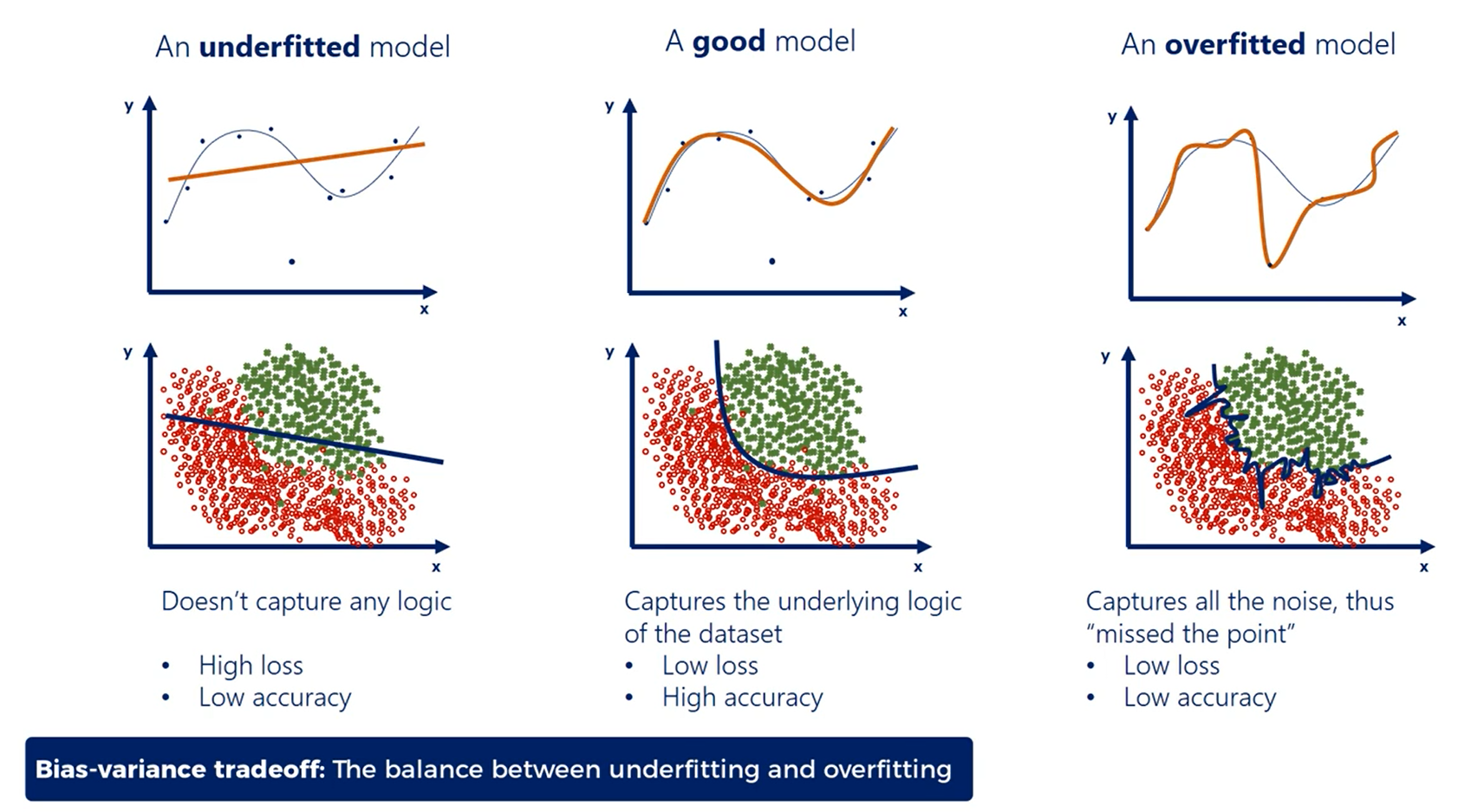

| Underfitting | Overfitting |

|---|---|

| The model has not capture the underlying logic of the data | Our training has focused on the particular training set so much, it has "missed the point" |

A good model:

Underfitted Model:

Overfitted Model:

Comparison:

# Overfitting - A classification example

# Preventing Overfitting

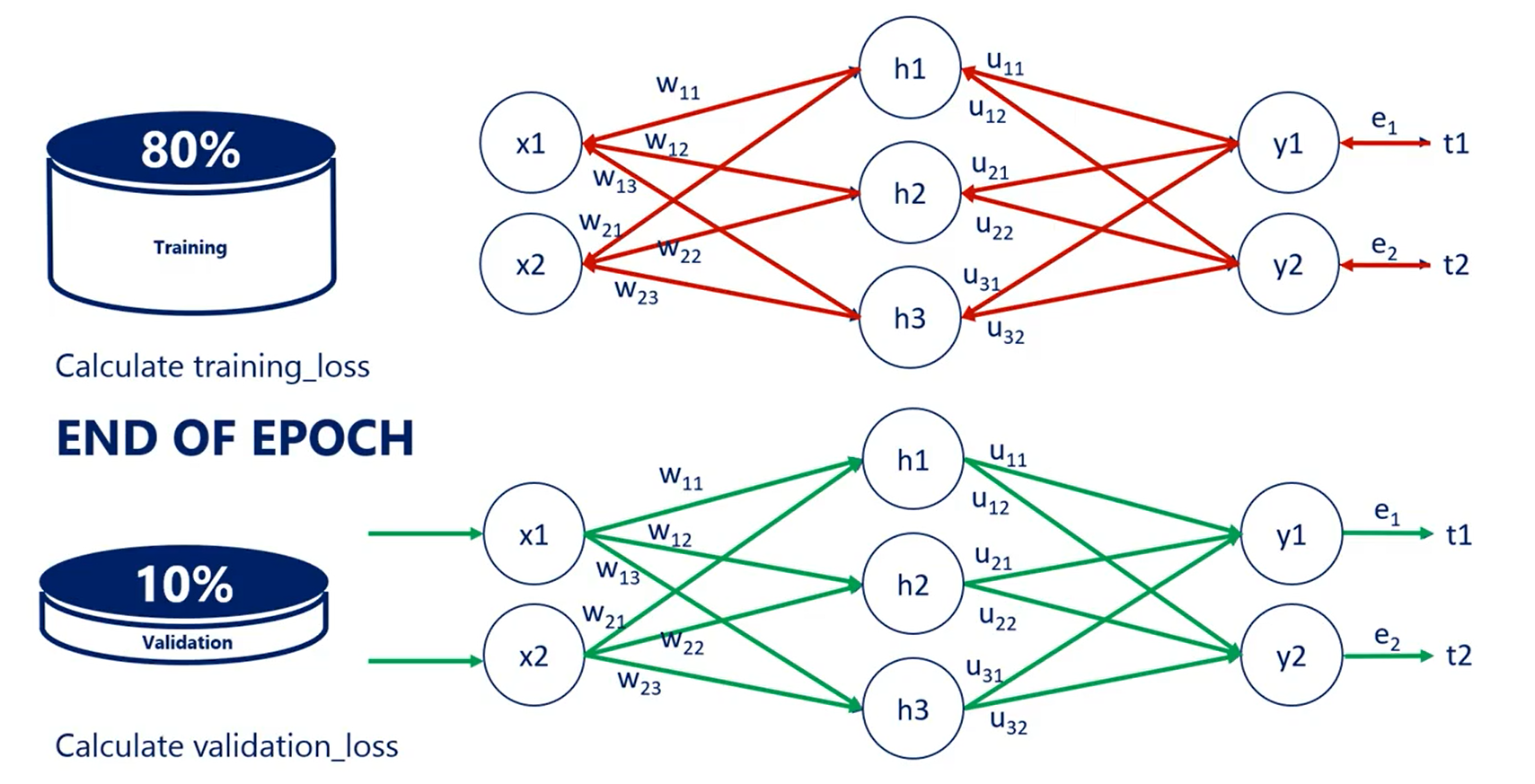

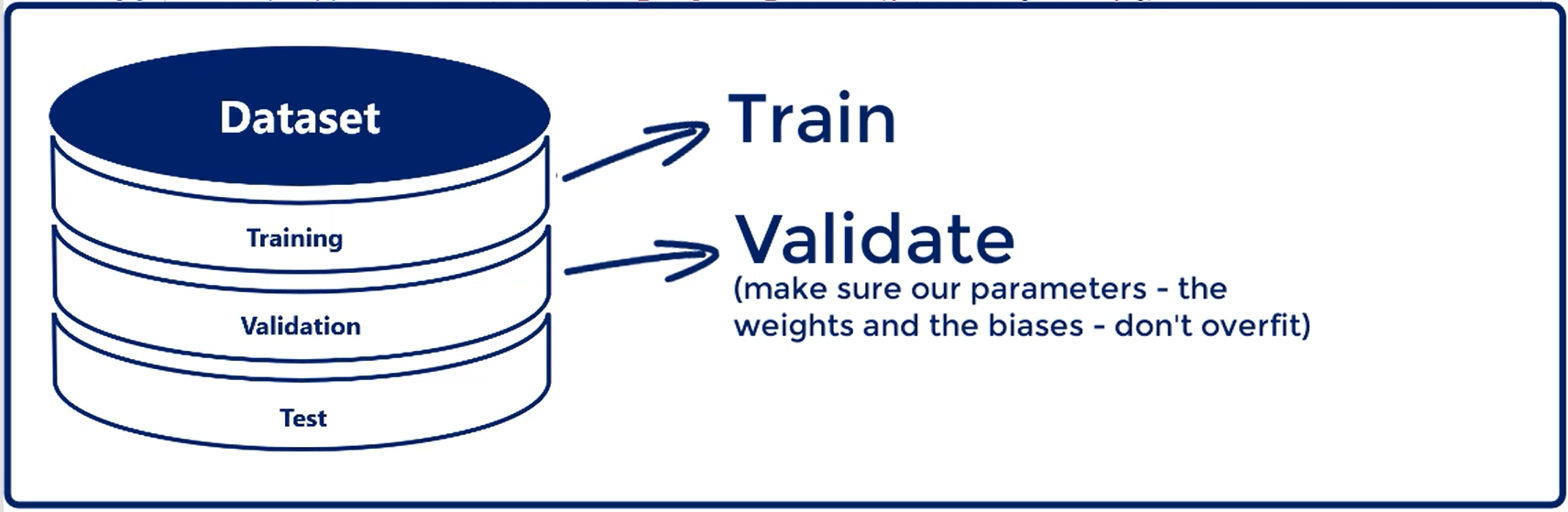

# Training and Validation

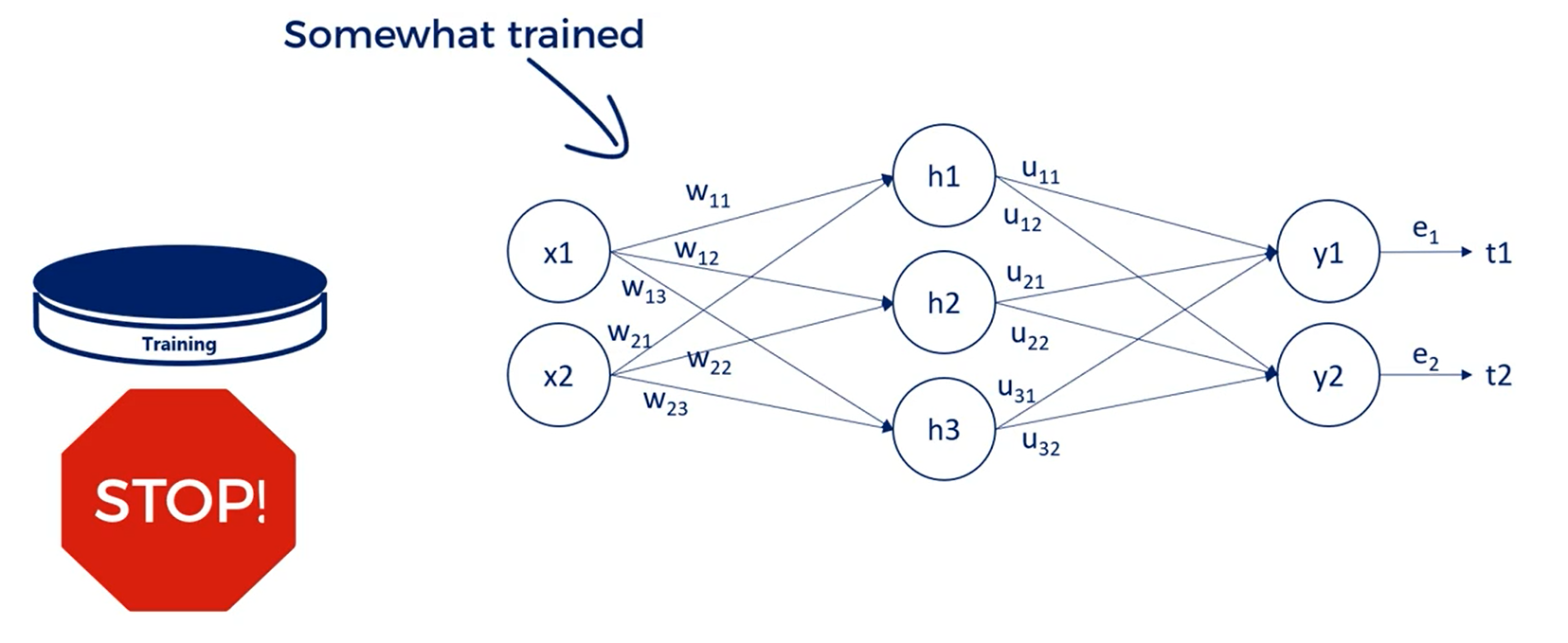

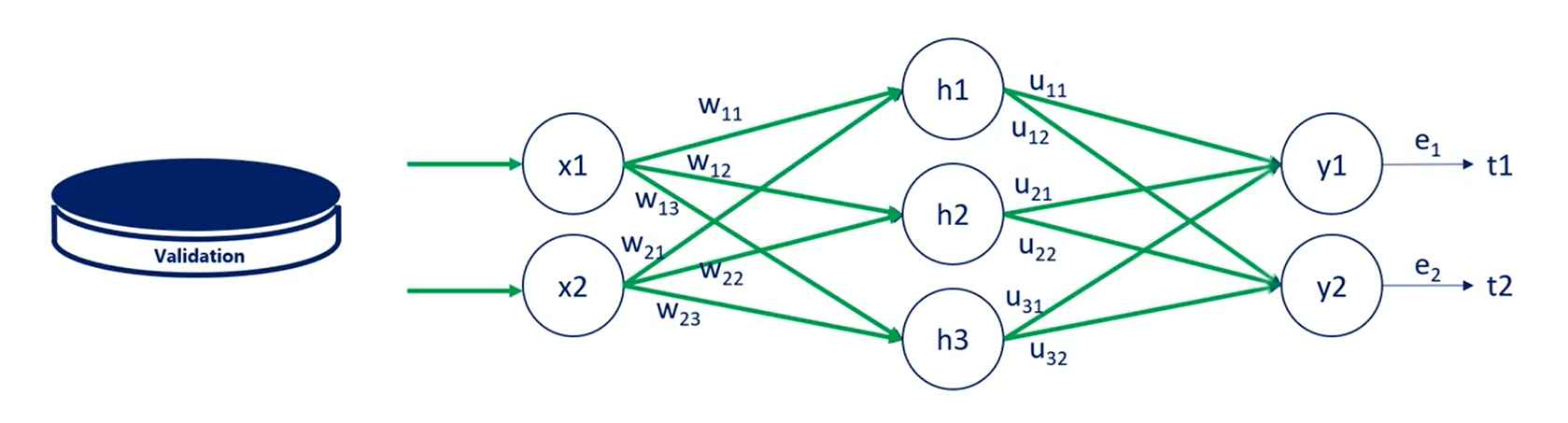

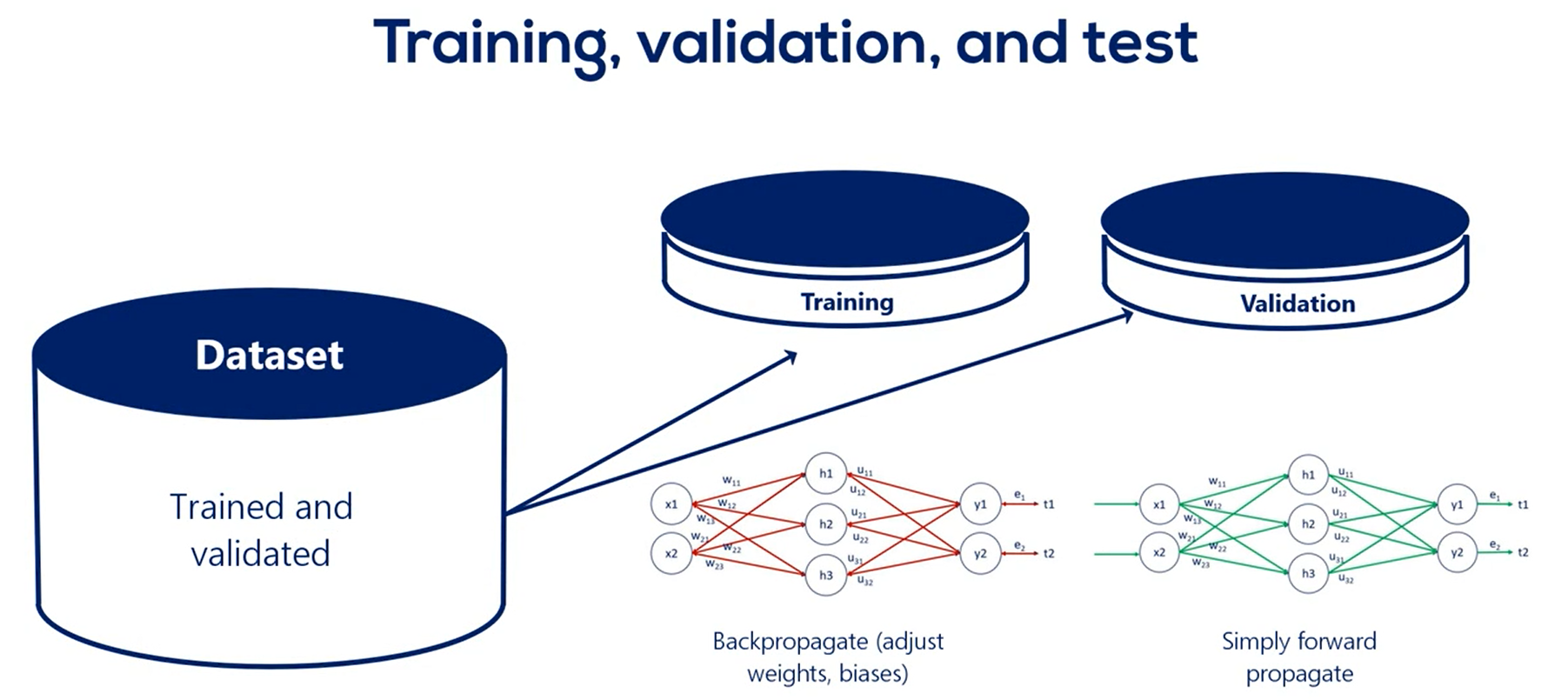

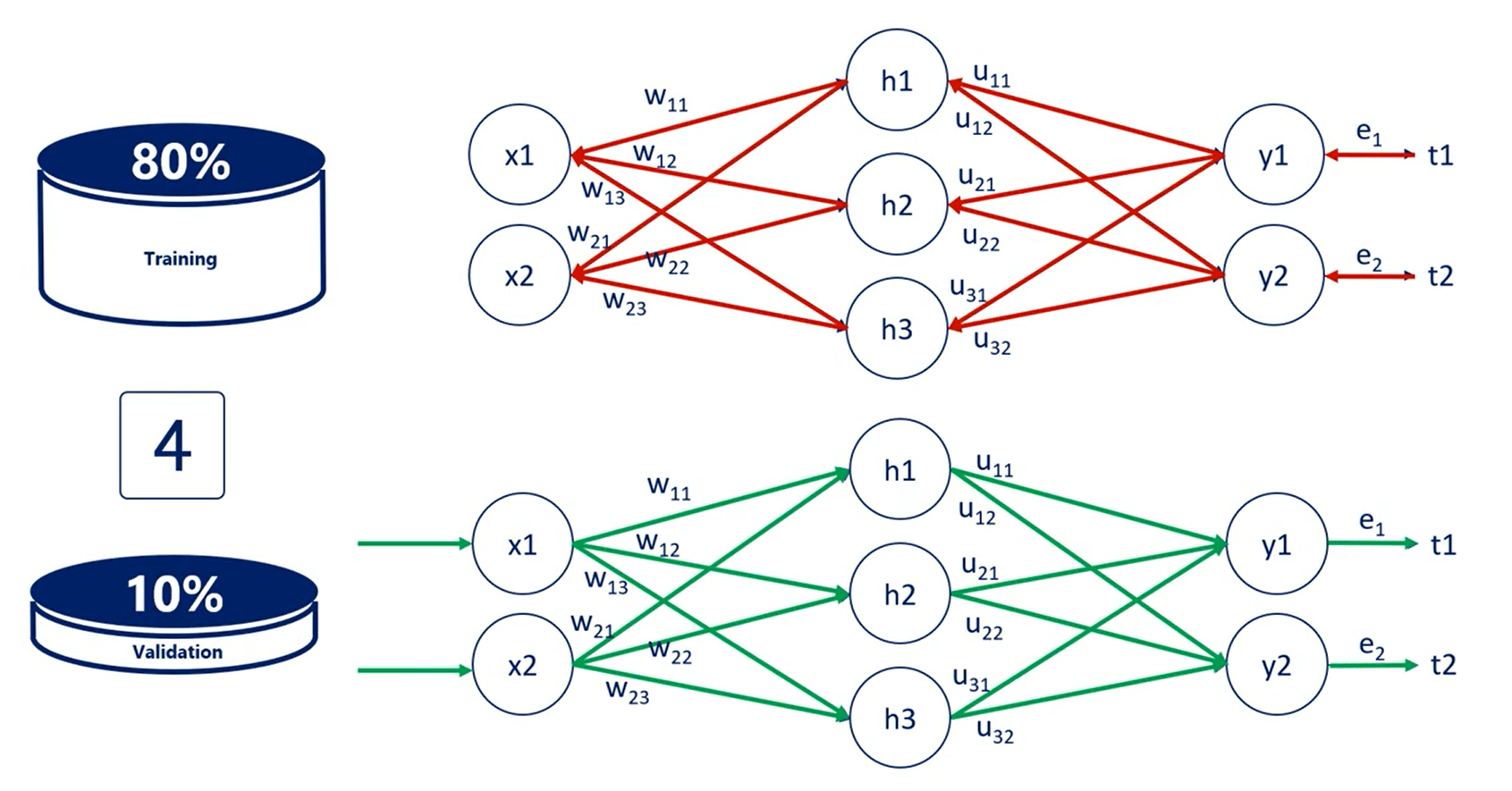

All the training is done on the training set.

In other words we update the weights for the training so only every once in a while we stop training for a bit. At this point the model is somewhat trained.

What we do next is take the model and apply it to the validation data set. This time we just run it without updating the weights so we only propagate forward not backward.

In other words we just calculate its loss function on average the last function calculated for the validation set should be the same as the one of the training set.

This is logical as the training and validation sets were extracted from the same initial dataset containing the same perceived dependencies.

Normally, we would perform this operation many times in the process of creating a good machine learning algorithm.

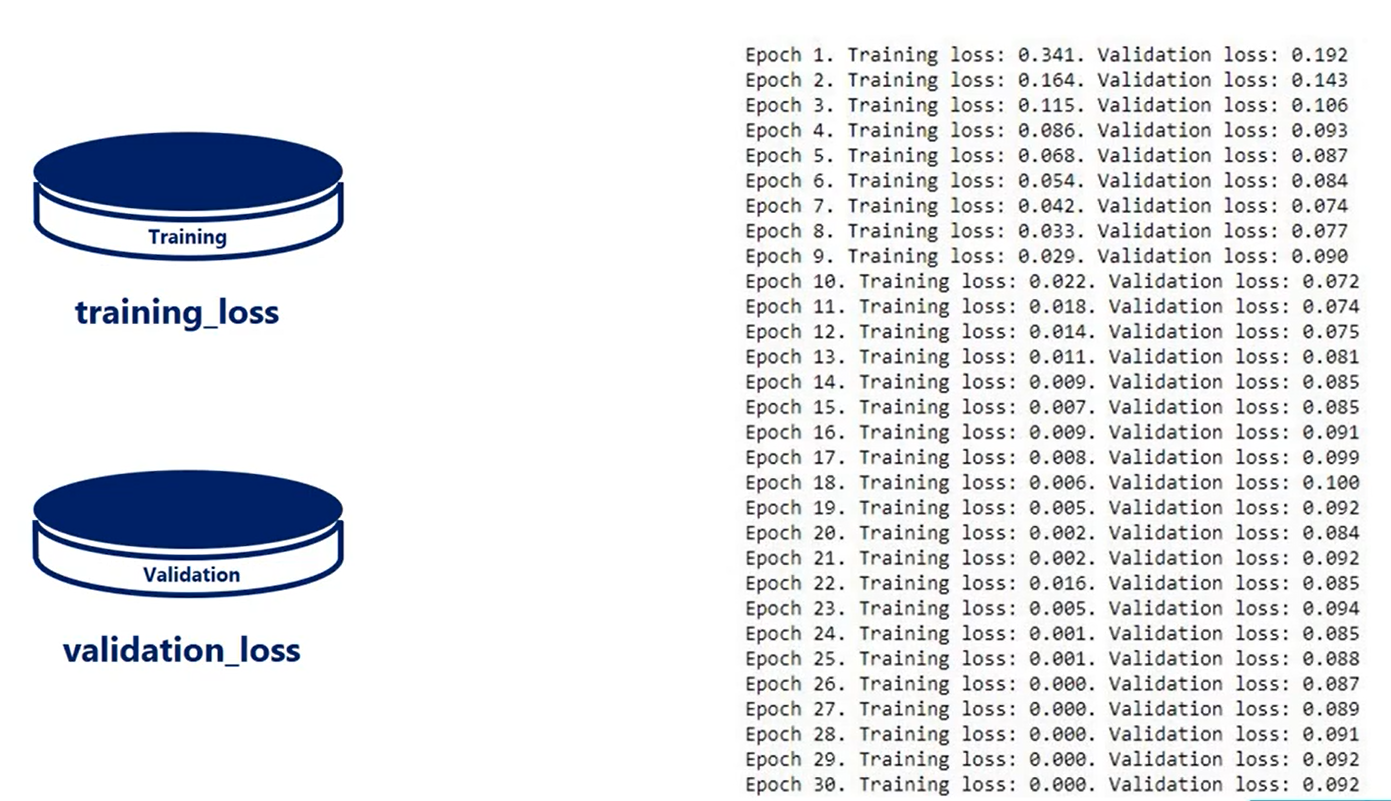

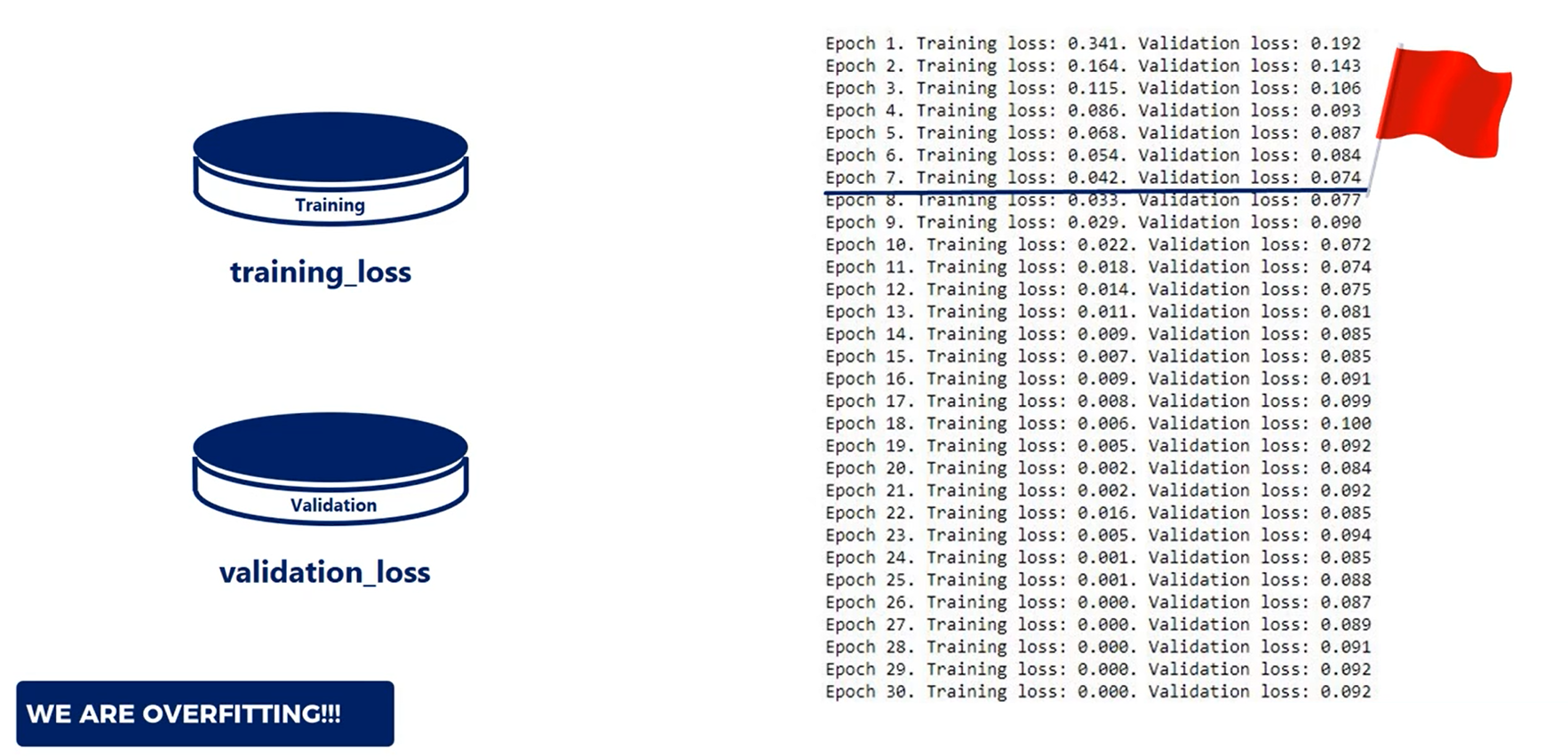

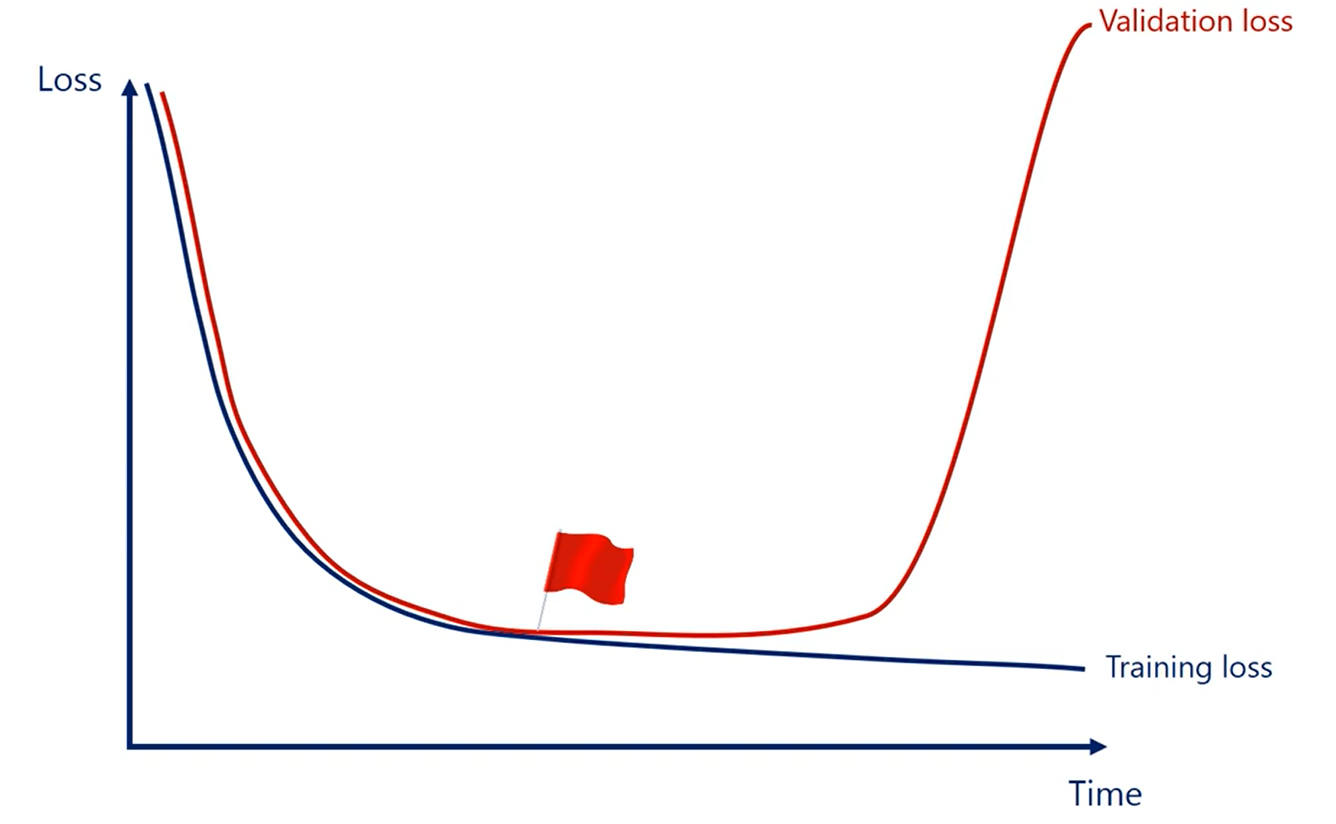

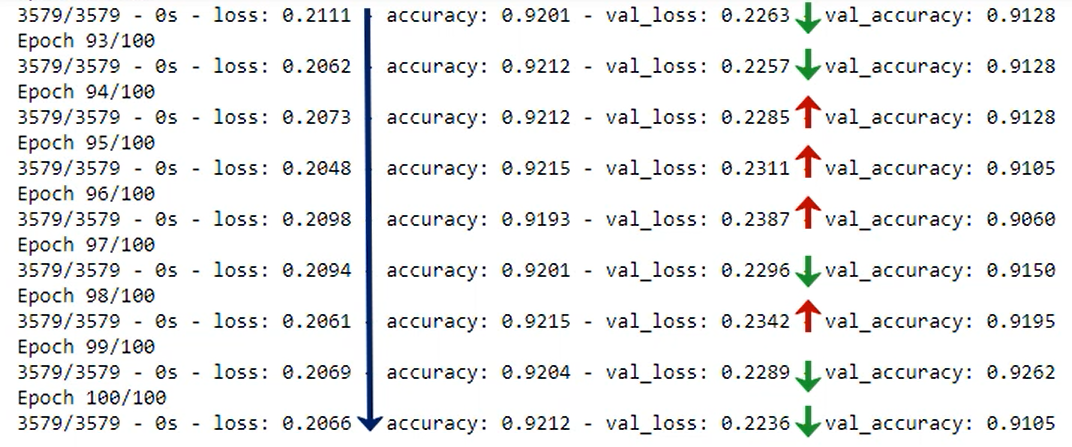

The two last functions we calculate are referred to as training loss and validation loss and because the data in the training is trained using the gradient descent, each subsequent loss will be lower or equal to the previous one. That's how gradient descent works by definition, so we are sure that treating loss is being minimized.

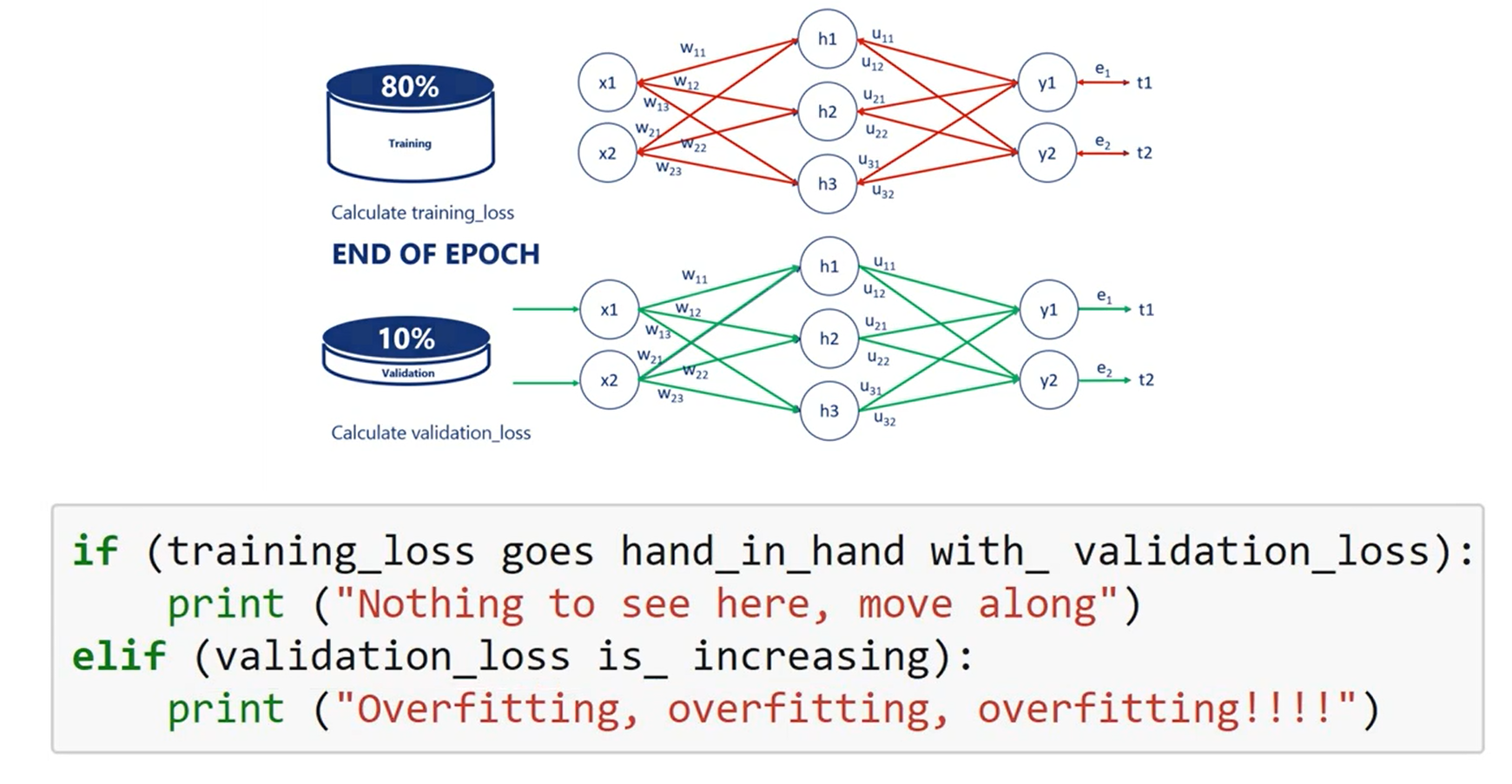

That's where the validation loss comes in play at some point the validation loss could start increasing. That's a red flag. We are overfitting we are getting better at predicting the training set but we are moving away from the overall logic data. At this point we should stop training the model.

WARNING

It is extremely important that the model is not trained on validation samples.

This will eliminate the whole purpose of the above mentioned process.

The training set and the validation set should be separate without overlapping each other.

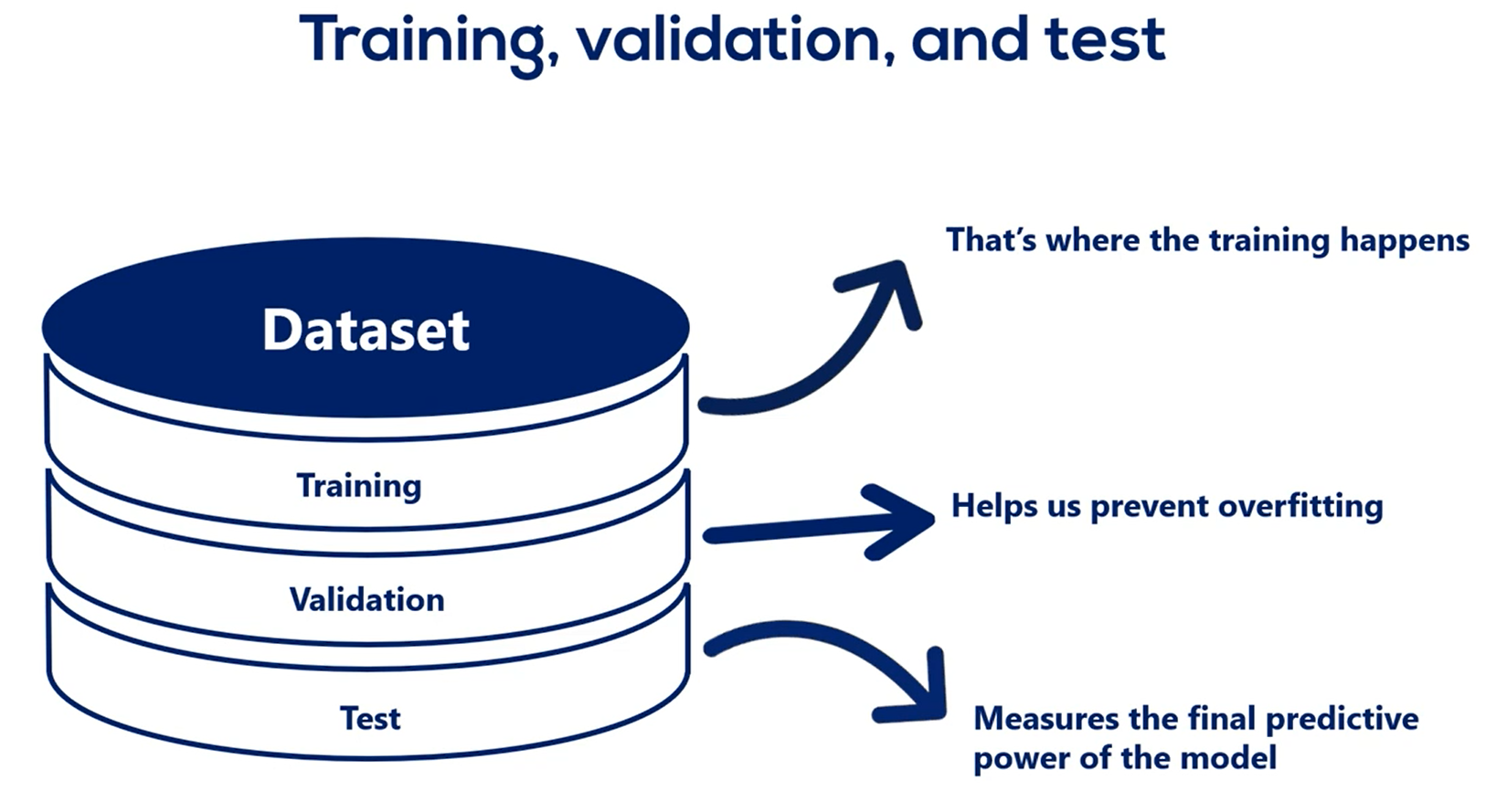

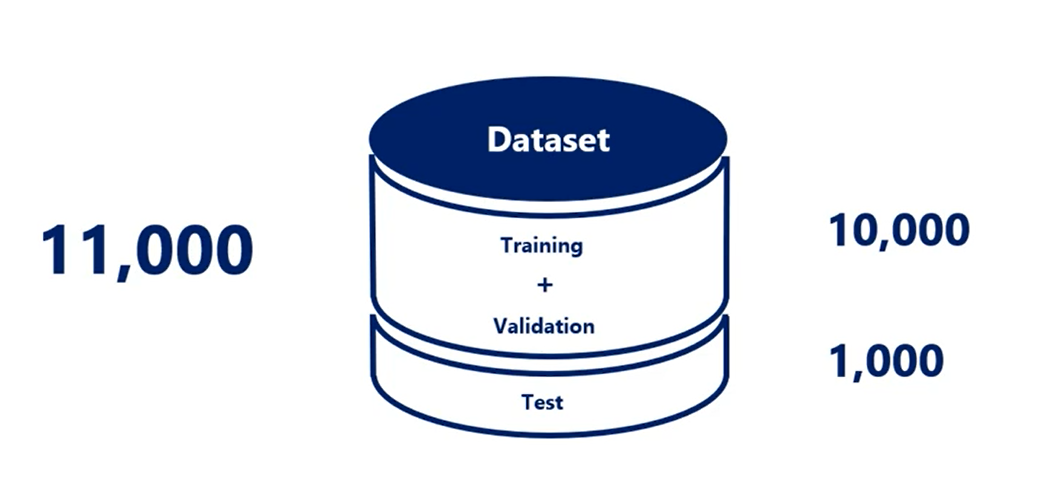

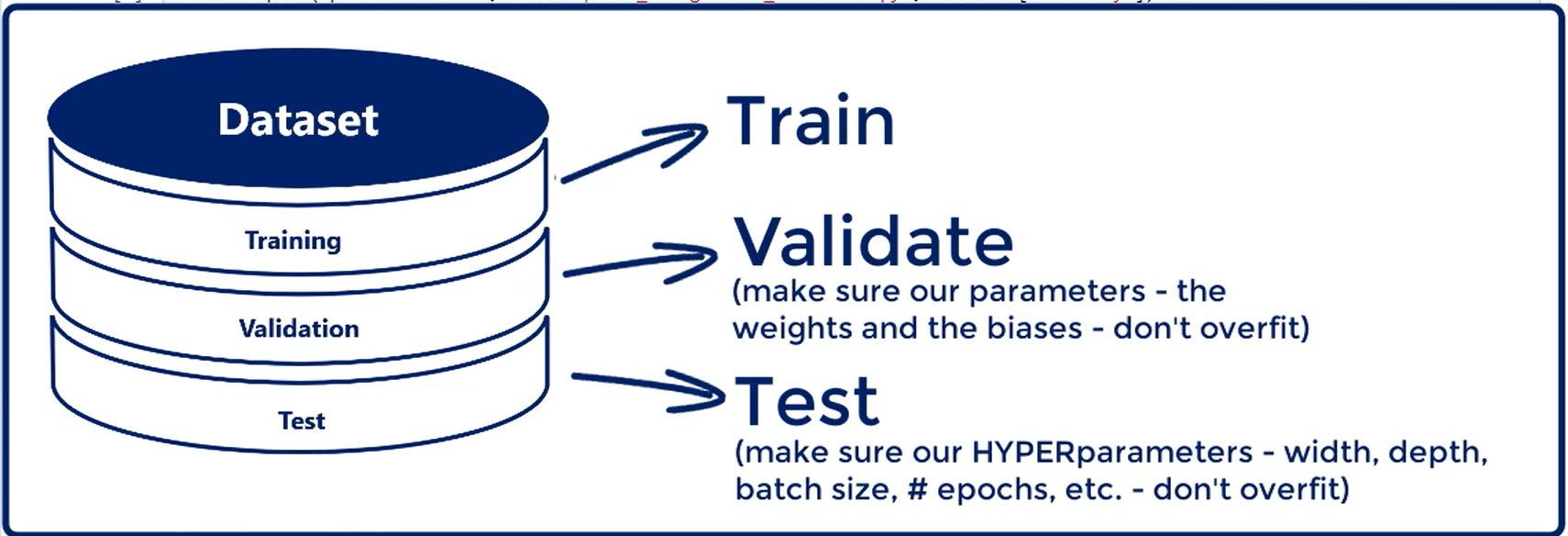

# Training, Validation and Test

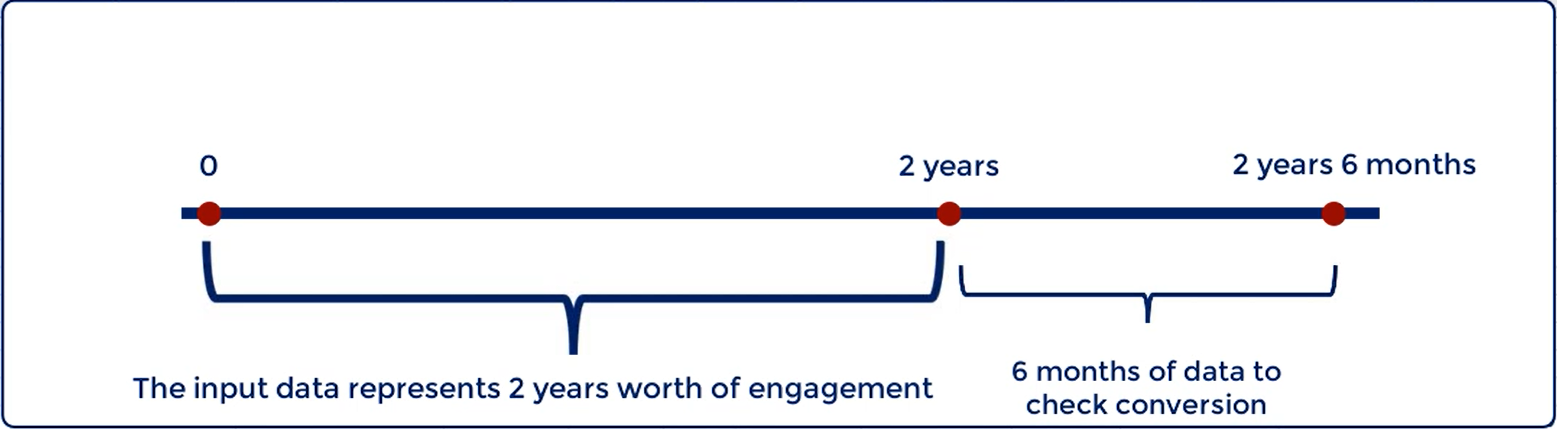

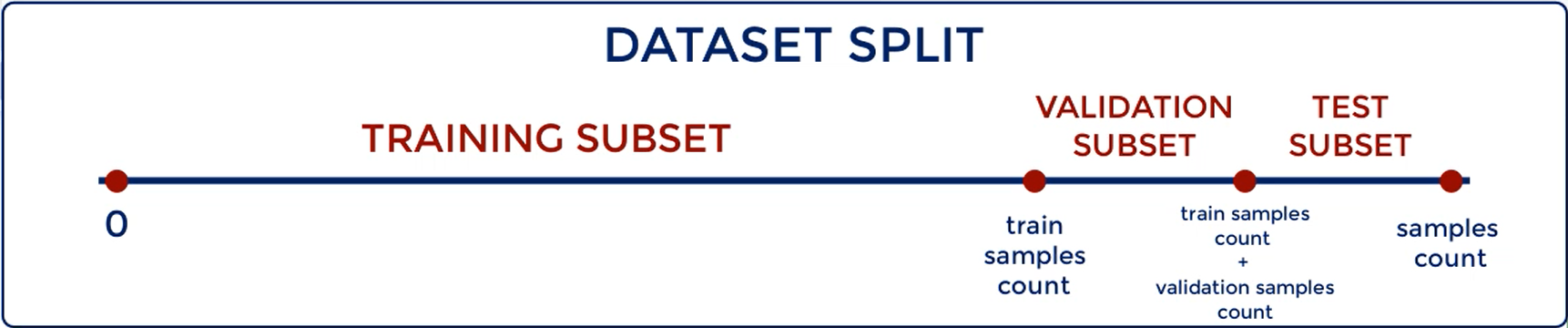

We introduce the validation data set. In addition, we said we want to divide the initial dataset into three parts.

Training, validation and Test.

After we have trimmed the model and validated it, it is time to measure its predictive power. Actually this is done by running the model on a new data It hasn't seen before.

That's equivalent to applying the model in real life.

The accuracy of the prediction we get from this test is the accuracy we would expect the model to have if we deploy in real life, so the test data set is the last step we take.

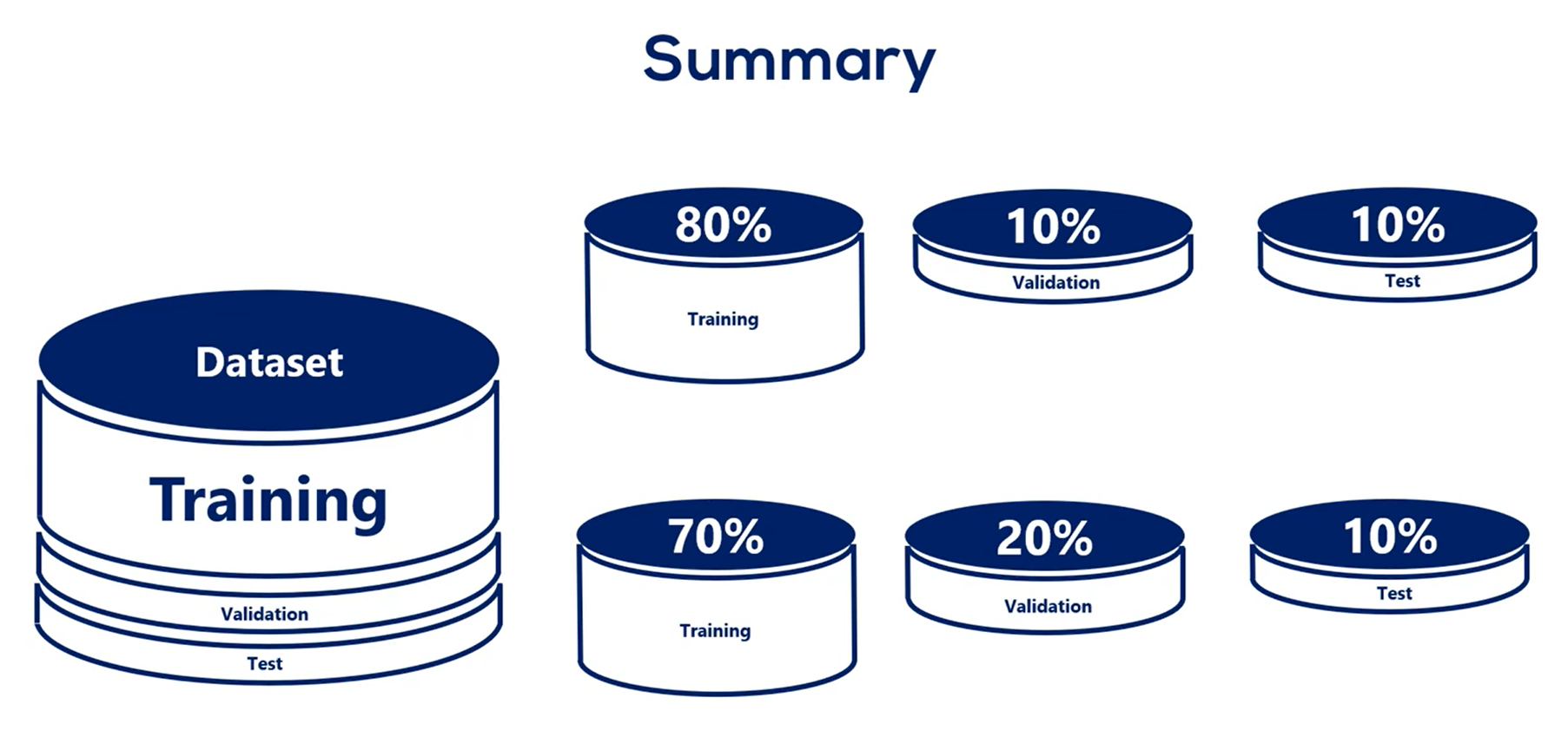

Let's summarize:

First, You get a data set.

Second, you split it into three parts.

There is no set rule but splits like 80 percent training 10 percent validation and 10 percent test or 70 2010 are commonly used.

Obviously the data set where we treat the model should be considerably larger than the other two. You want to devote as much data as possible to the training of the model while having enough samples to validate and test on.

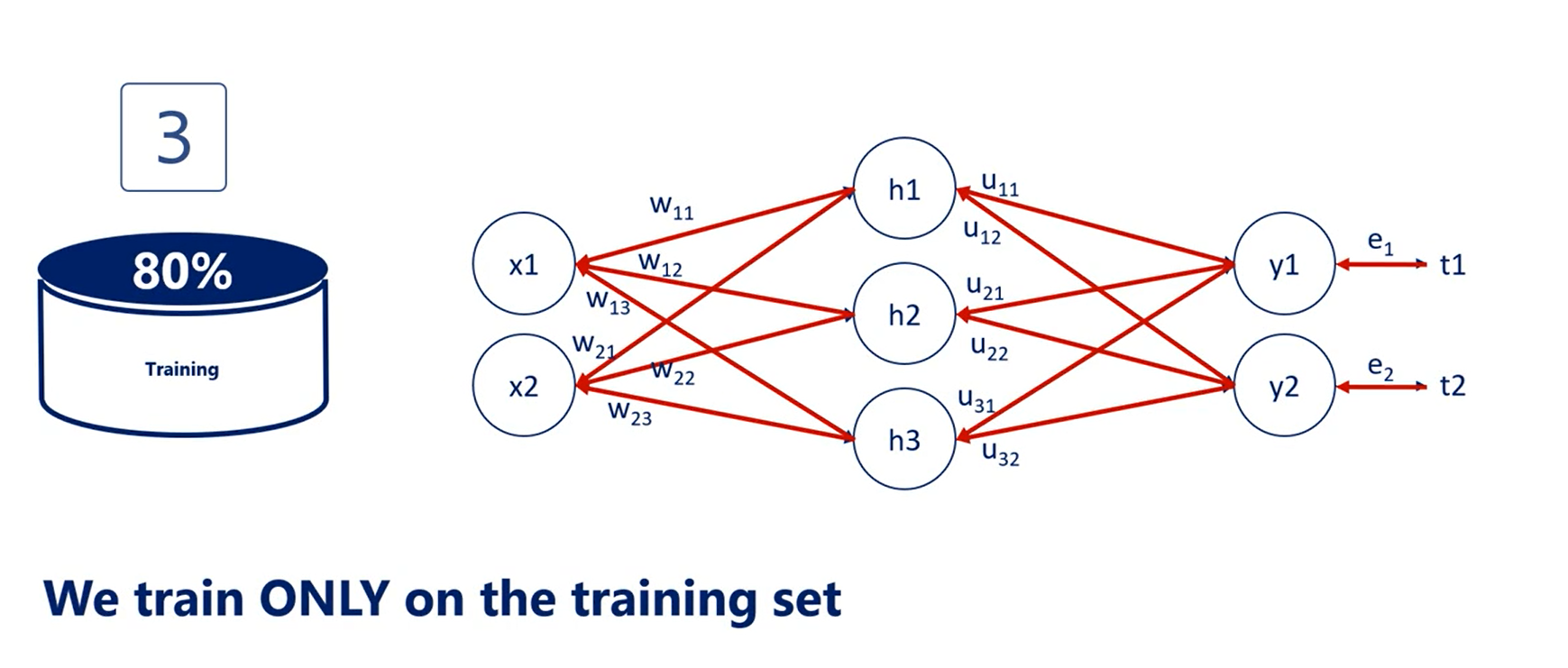

Third, we train the model using the training data set and the training data set only.

Forth, every now and then we validate the model by running it for the validation data set.

Usually we validate the data set for every epoch, every time we adjust all weights and calculate the training loss, we validate.

if the training loss and the validation loss go hand-in-hand we carry on training the model. If the validation loss is increasing we are overfitting, so we should stop.

Fifth and final step.

You test the model with the test data set the accuracy you obtain at this stage is the accuracy of your machine learning algorithm.

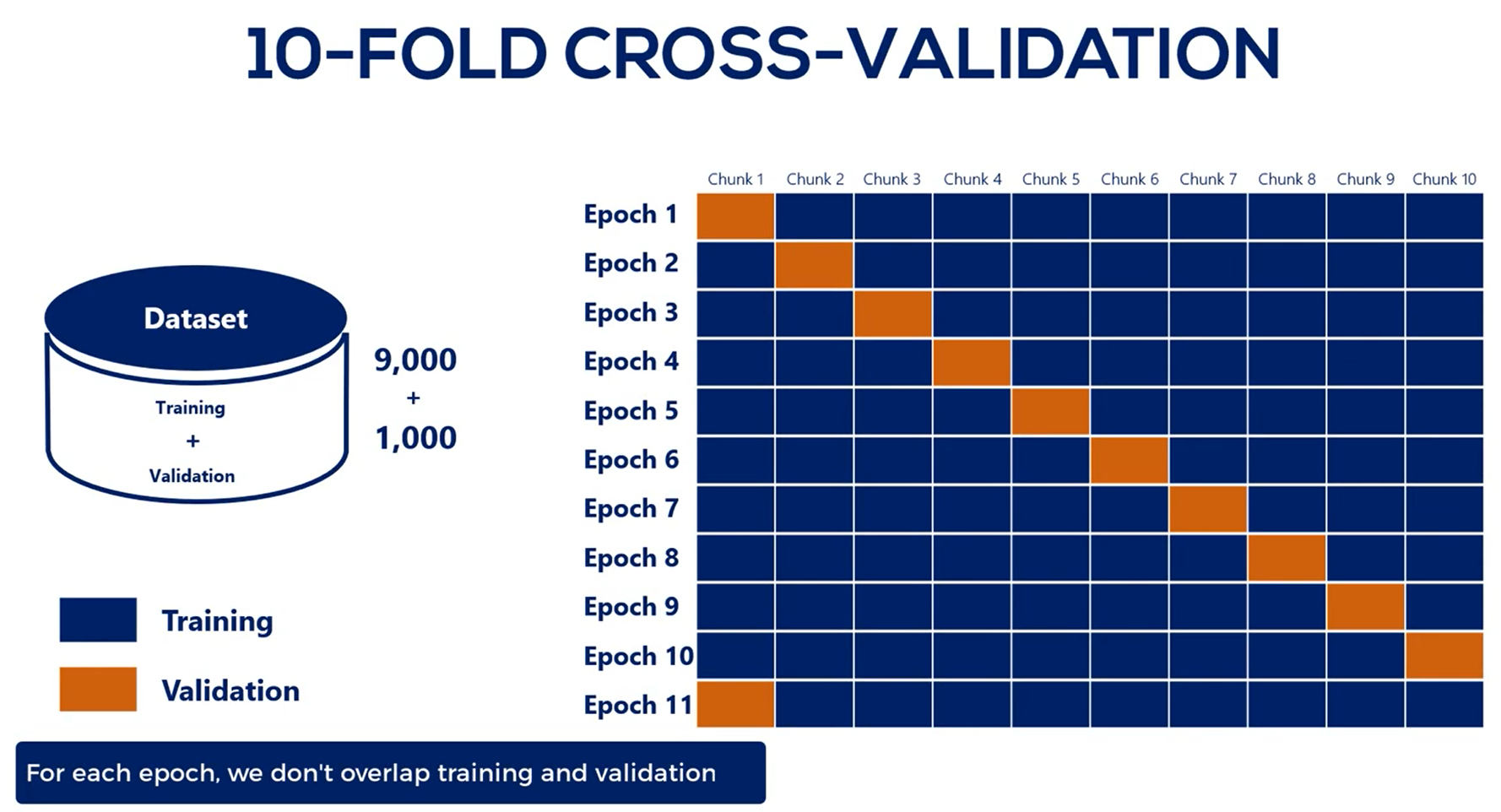

# N-fold cross validation

This is a strategy that resembles the general one but combines the train and validation data sets in a clever way. However, it still requires a test subset.

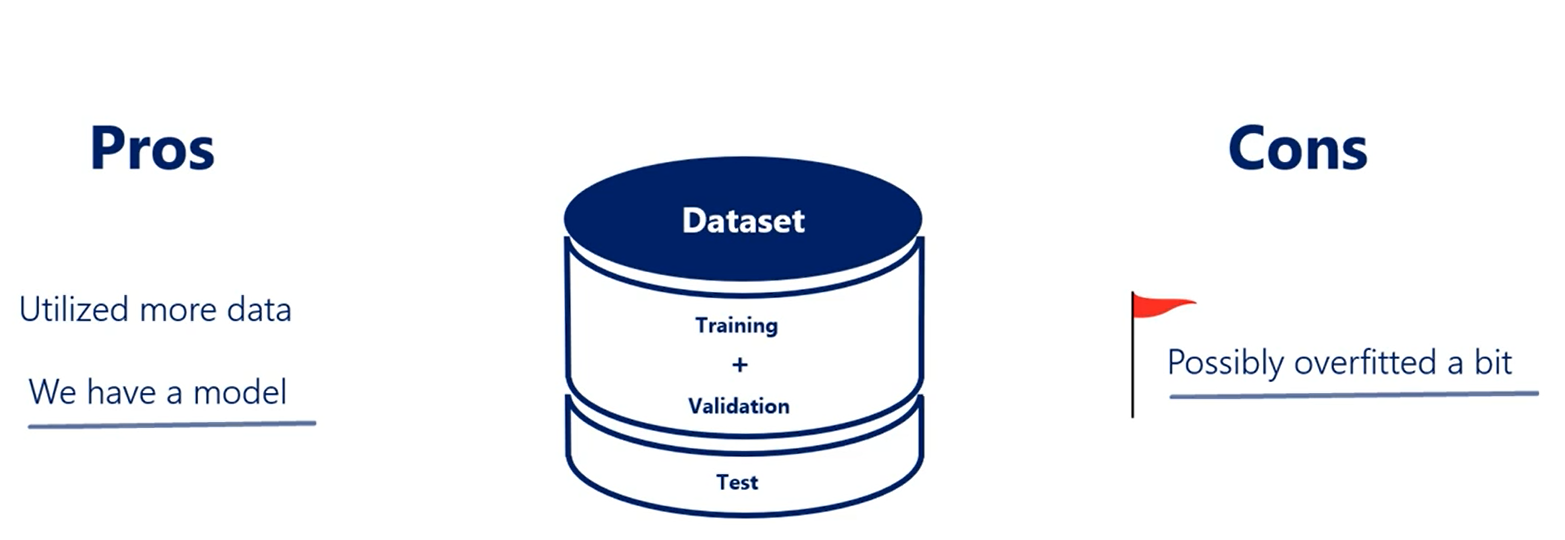

As with all good things this comes at a price we have still trained on the validation set which was not a good idea. It is less likely that the overfitting flag is raised and it is possible that we all were fitted a bit.

The tradeoff is between not having a model or having a model that's a bit over fitted and fold cross-validation solves the scarce data issue but should by no means be used as the norm.

Whenever you can, divide your data into three parts: training, validation and test.

Only if it doesn't manage to learn much because of data scarce it you should try the old cross-validation.

# Early stopping

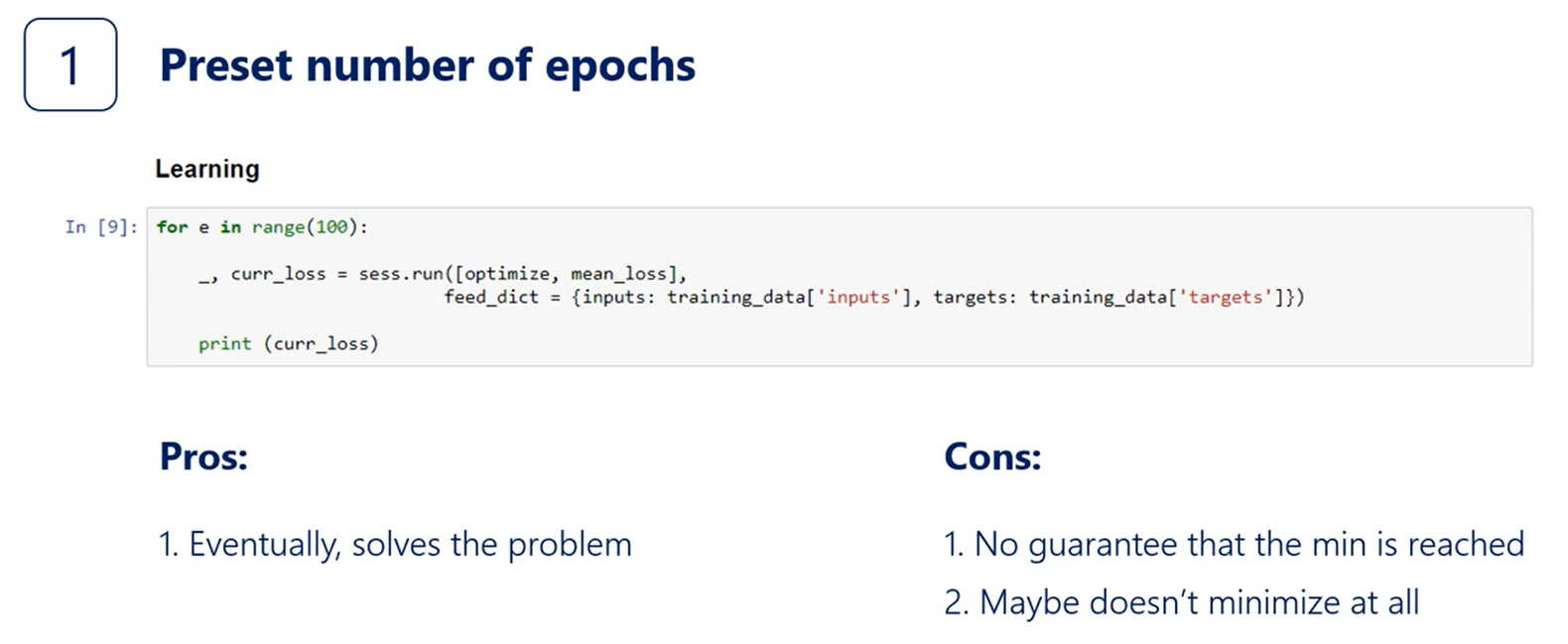

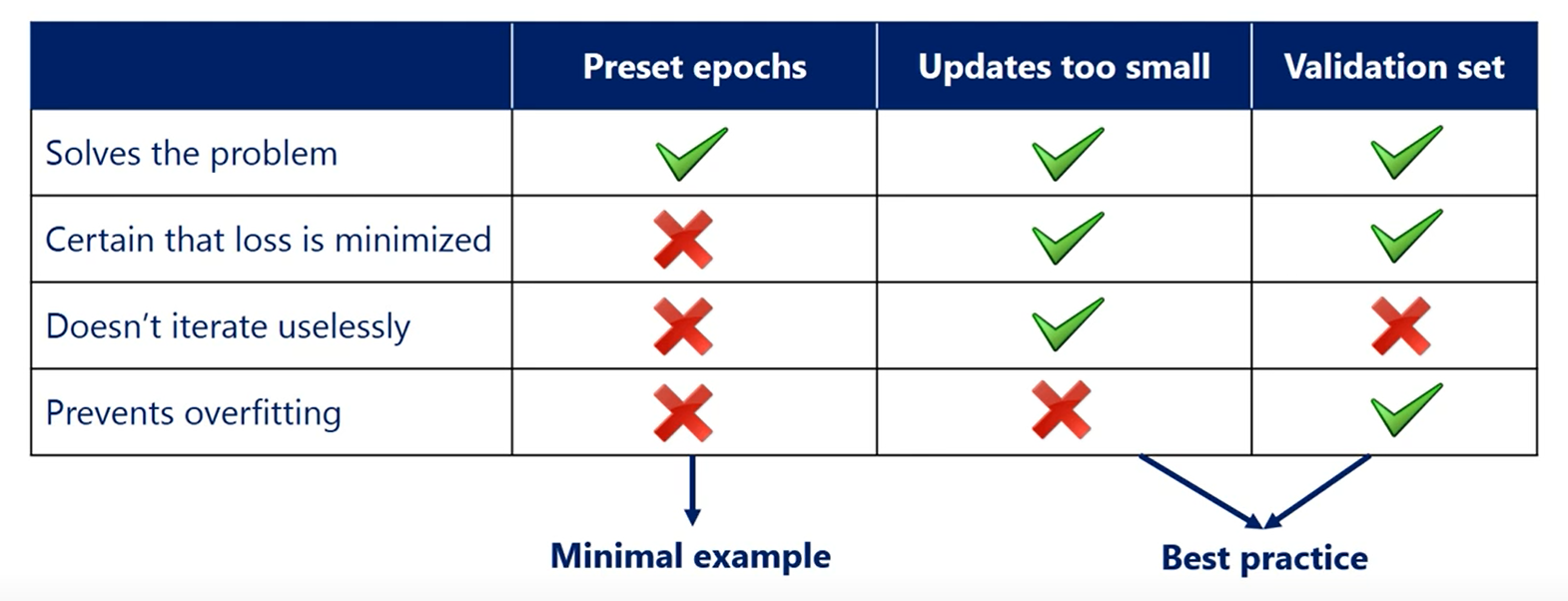

The simplest one is to train for a pre-set number of epochs in the minimal example after the first section.

This gave us no guarantee that the minimum has been reached or passed a high enough learning rate would even cause the loss to divert to infinity.

Still, the problem was so simple that rookie mistakes aside very few epochs would cause a satisfactory result.

However, our machine learning skills have improved so much, and we shouldn't even consider using this naive method.

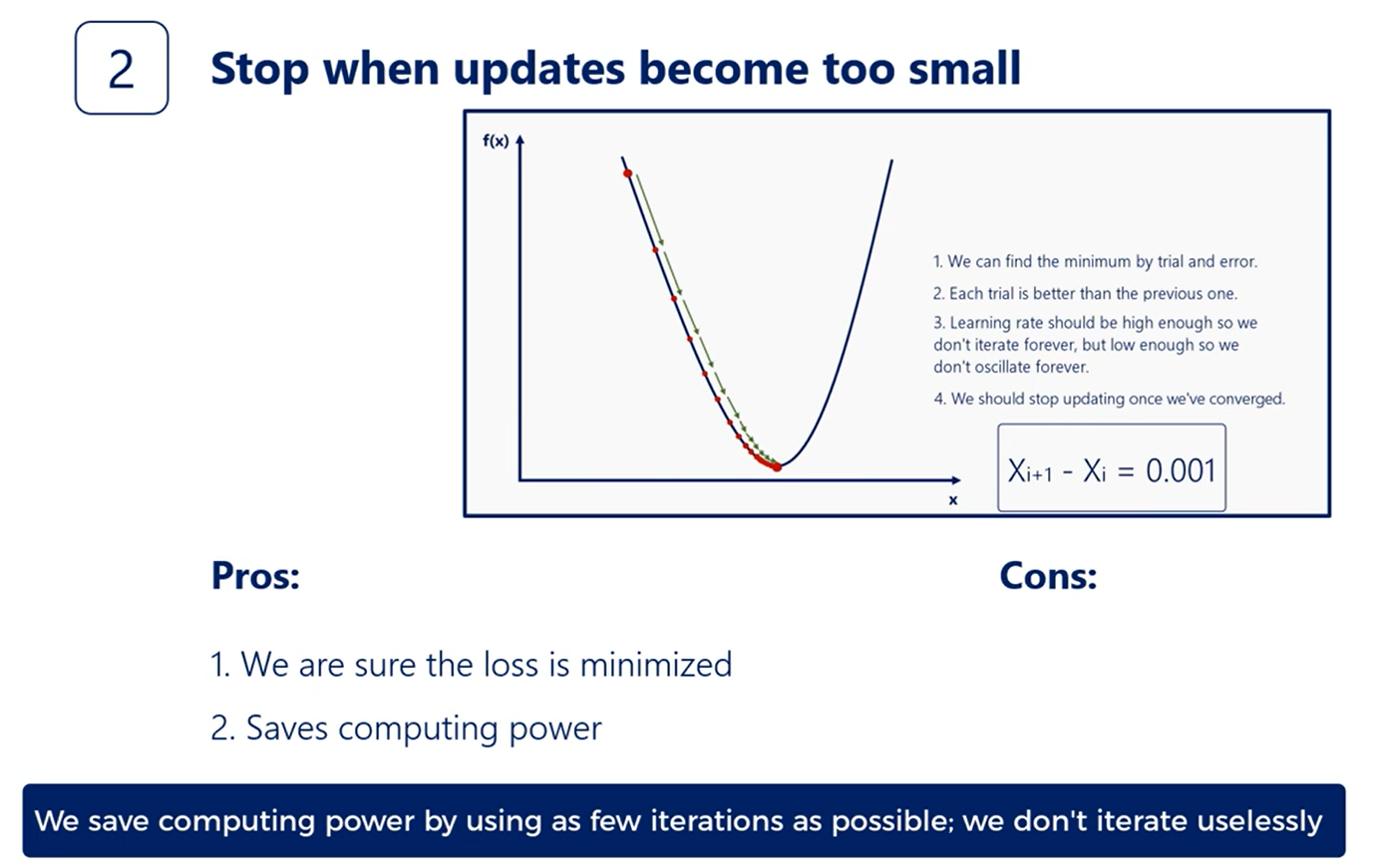

A bit more sophisticated technique is to stop when the last function updates become sufficiently small.

We even had a note on that when we introduce the gradient descent a common rule of thumb is to stop when the relative decrease in the loss function becomes less than 0.001 or 0.1 percent.

This simple rule has two underlying ideas.

First, we are sure we won't stop before we have reached a minimum. That's because of the way gradient descent works. It will descend until a minimum is reached.

The last function will stop changing making the update rule yielding in the same weights. In this way we'll be stuck in the minimum.

The second idea is that we want to save computing power by using as few iterations as possible.

As we said once we have reached the minimum or diverged to infinity we will be stuck there knowing that a gazillion more epochs won't change a thing.

We can just stop there.

This saves us the trouble of iterating uselessly without updating anything.

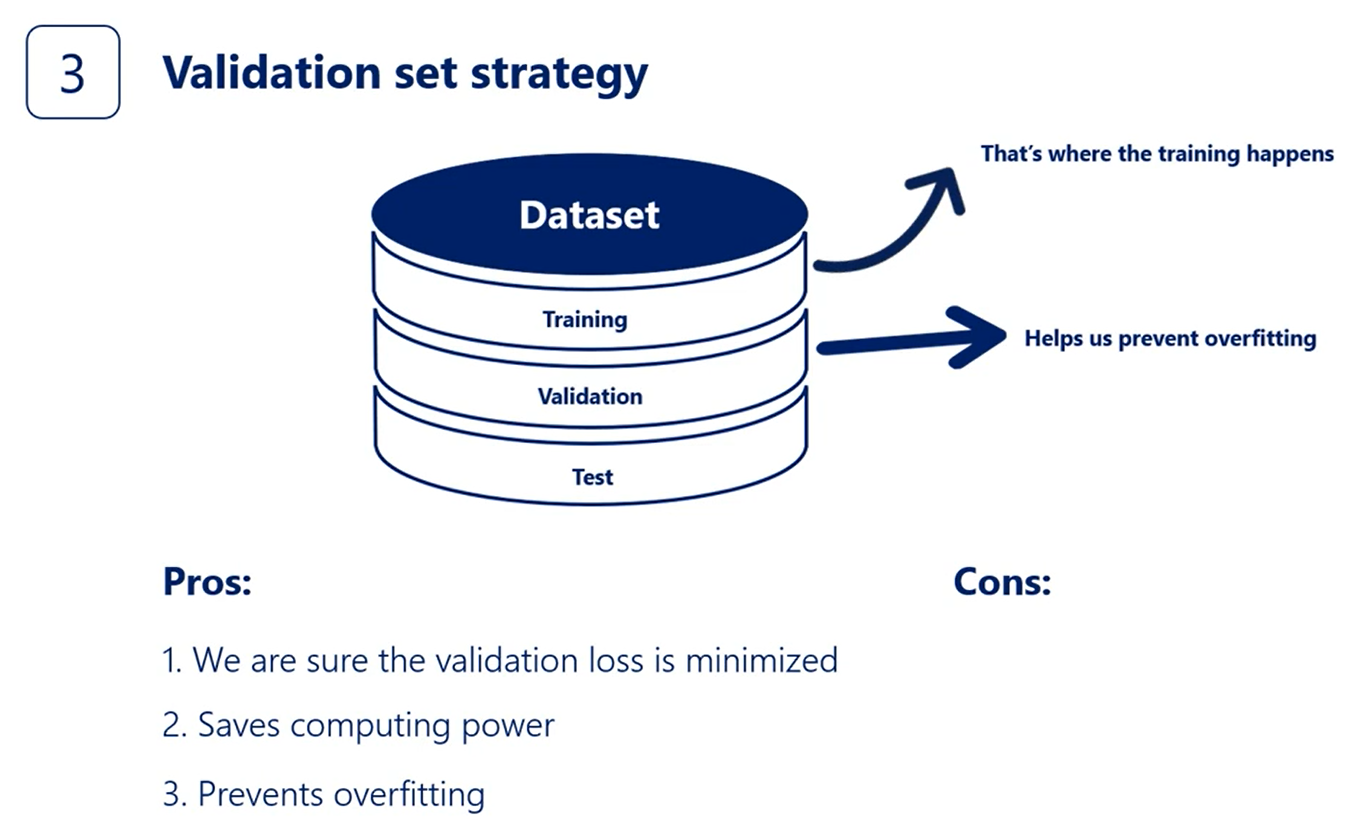

The third is the best way.

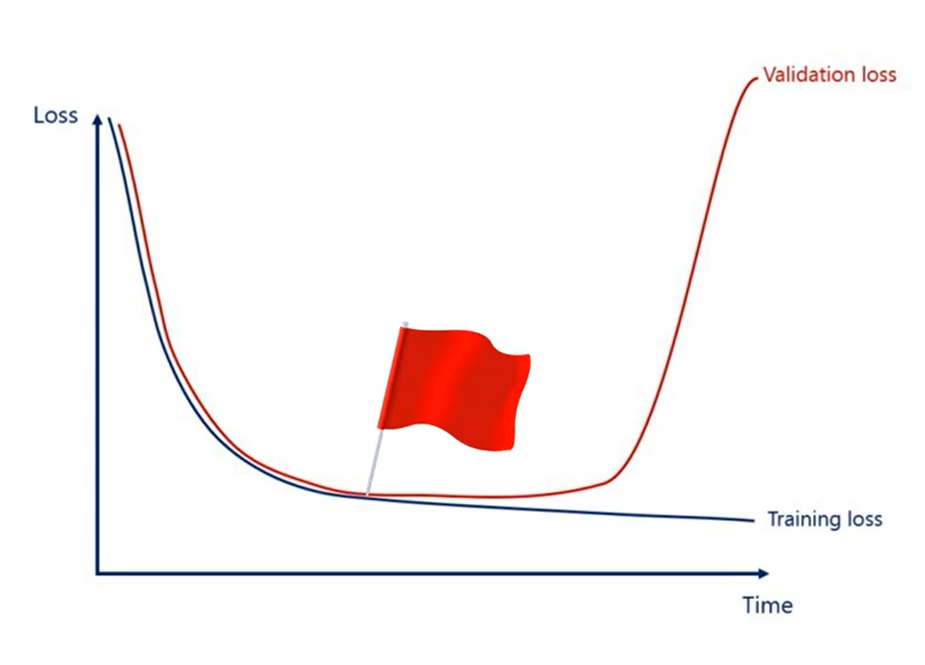

Let me state the rule once again using the proper figure a typical training occurs this way as time goes by the error become smaller.

The distribution is exponential as initially we are finding better weights quickly.

The more we train the model the harder it gets to achieve an improvement. At some point it becomes almost flat.

Now if we put the validation curve on the same graph it would start with the training cost at the point when we started overfitting the validation cost will start increasing.

Here's the point I wish the two functions begin diverging.

That's our red flag. We should stop the algorithm before we do more damage to the model.

Now depending on the case. Different types of early stopping can be used the preset number of iterations method was used in the minimal example.

That wasn't by chance. The problem was linear and super simple. A more complicated method for early stopping would be a stretch.

The second method that monitors the relative change is simple and clever but doesn't address overfitting.

The validation set strategy is simple and clever and it prevents overfitting.

However, it may take our algorithm a really long time to overfit it is possible that the weights are barely moving, and we still haven't started overfitting.

That's why I like to use a combination of both methods.

So my rule would be stop when the validation loss starts increasing or when the training last becomes very small.

# Initialization

Initialization is the process in which we set the initial values of weights. It was important to add to this section to the Course as an inappropriate initialization would cause in an optimizer role model let's revise what we have seen so far.

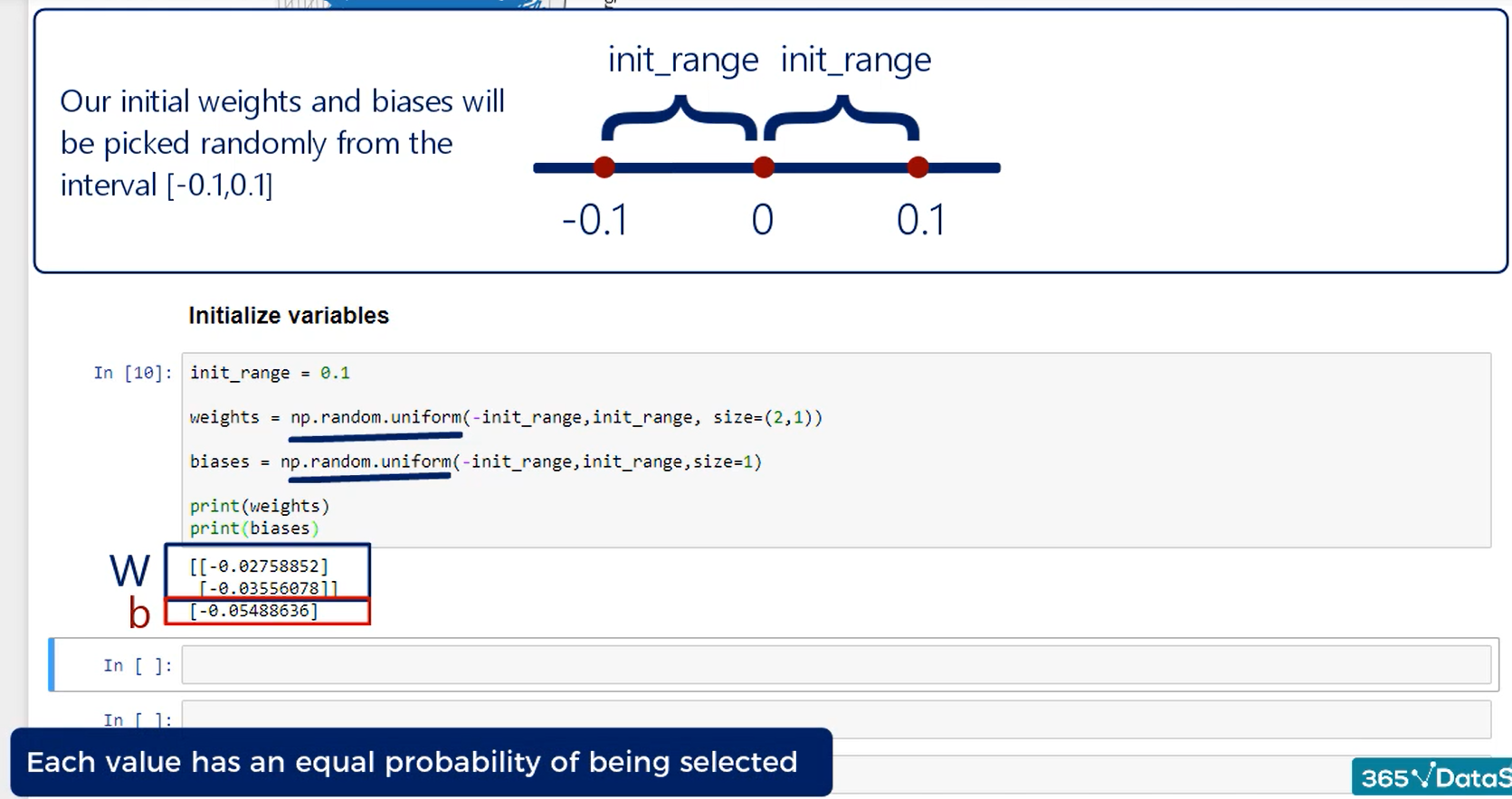

A simple approach would be to initialize weights randomly within a small range.

We did that in the minimal example we use the PI method random uniform and our range was between minus 0.1 and 0.1 this approach chooses the values randomly.

But in a uniform manner each one has the exact same probability of being chosen equal probability of being selected.

Sounds intuitive, but it is important to stress it.

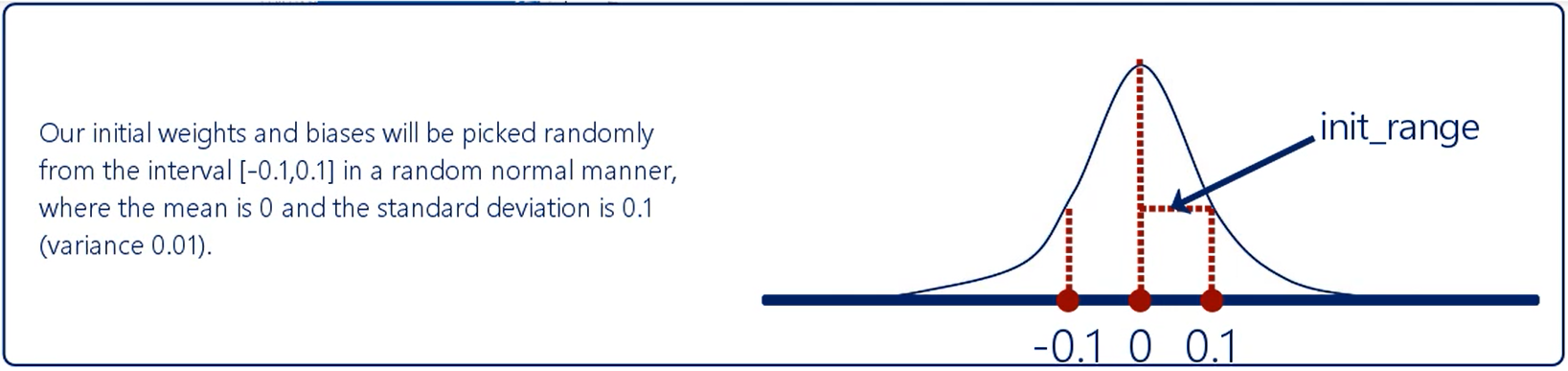

This time though we pick the numbers from a zero mean normal distribution. The chosen variance is arbitrary but should be small.

As you can guess since it follows the normal distribution values closer to 0 are much more likely to be chosen than other values.

An example of such initialization is to draw from a normal distribution with a mean zero, and a standard deviation 0.1.

Both methods are somewhat problematic although they were the norm until 2010. It was just recently that academics came up with a solution.

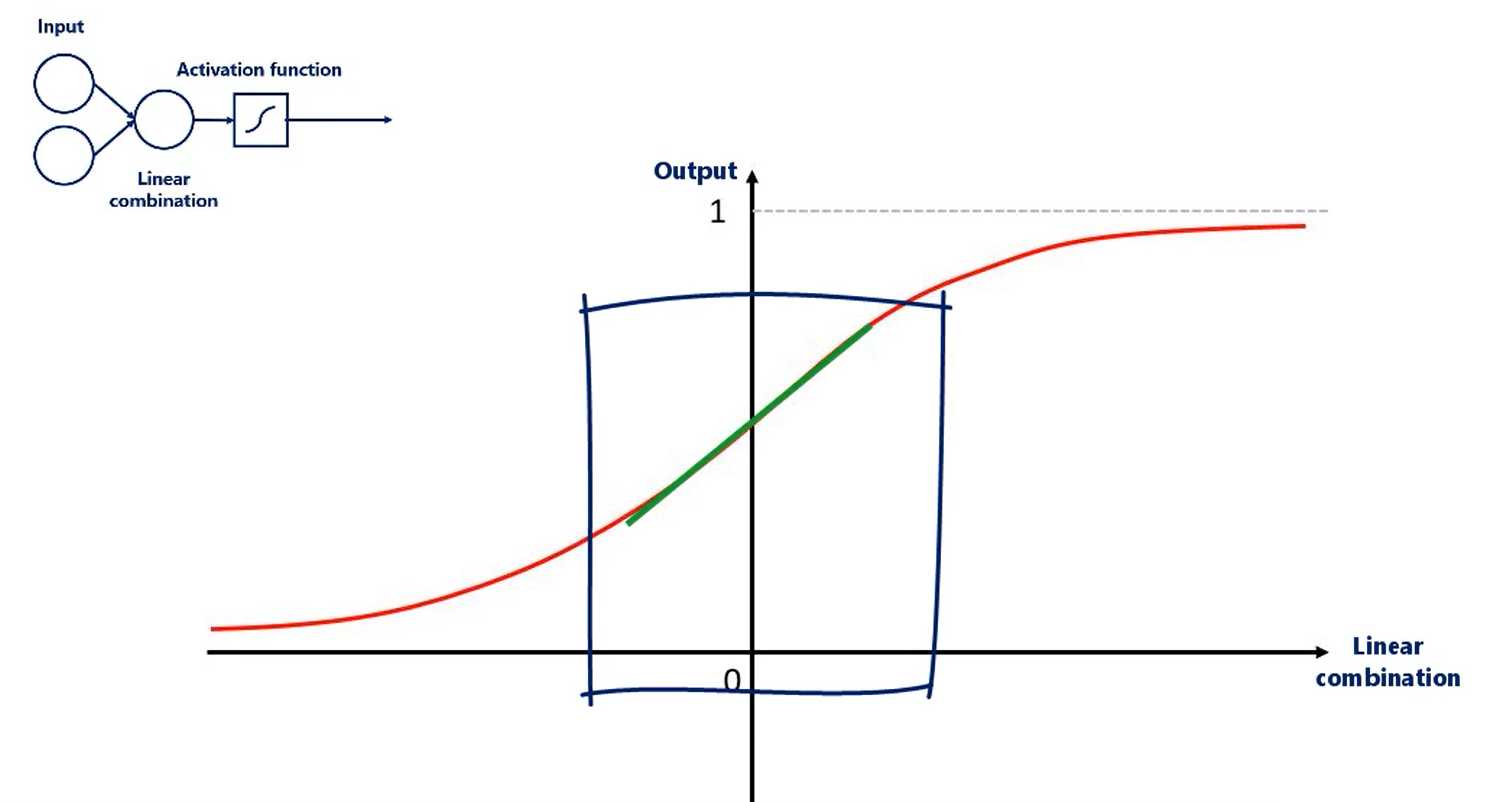

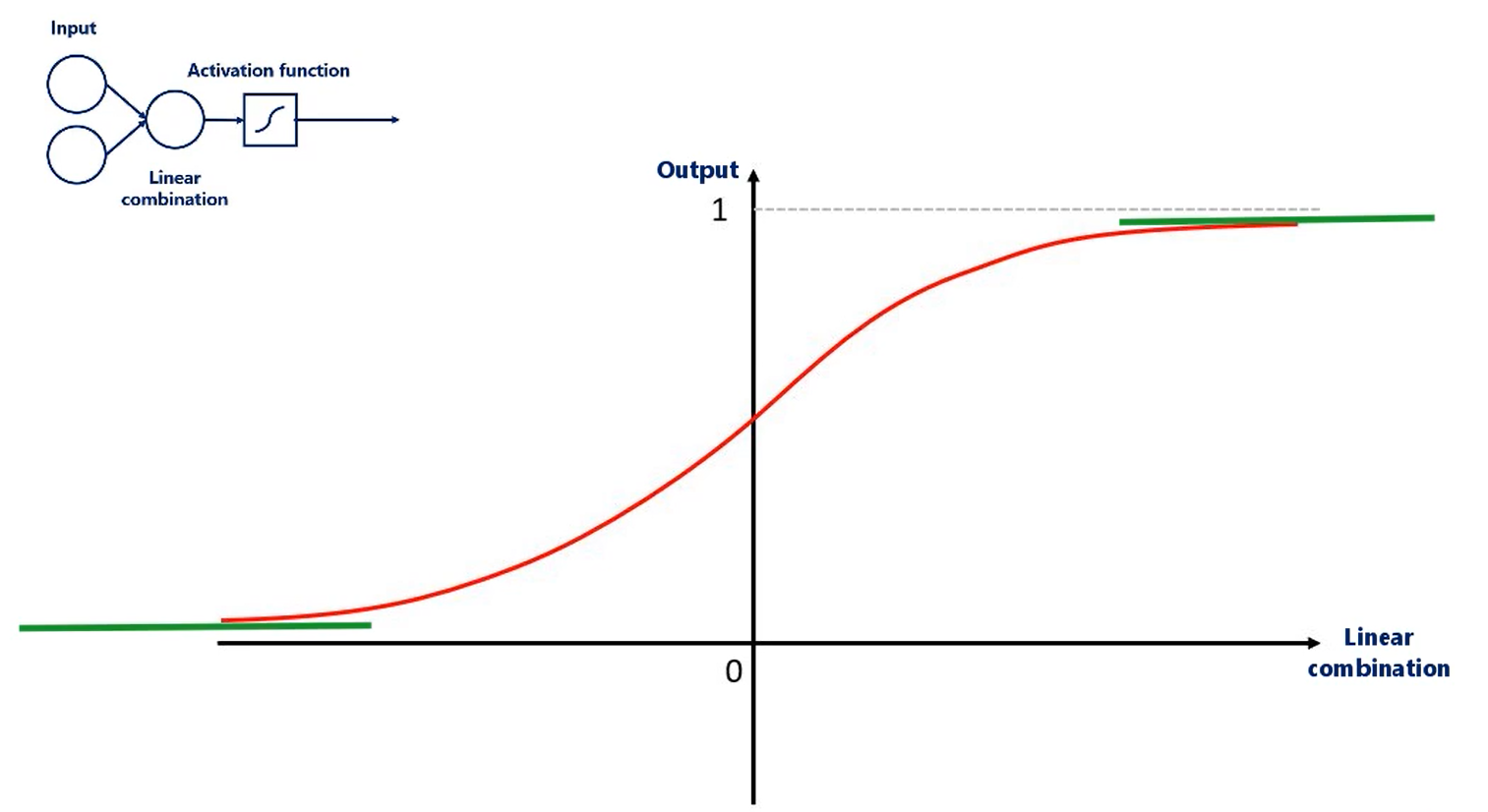

Let's explore the problem. Weights are used in linear combinations then the linear combinations are activated once more we will use the sigmoid activator the sigmoid as other commonly used non-linearities is peculiar around its mean and its Extreme's activation functions take as inputs the linear combination of the units from the previous layer right.

Well if the weights are too small this will cause values that fall around this range in this range.

Unfortunately the sigmoid is almost linear.

If all our inputs are in this range which will happen if we use small weights the sigmoid would not apply nonlinearity but a linearity to the linear combination, and non-linearities are essential for deep nets.

Conversely, if the values are too large or too small the sigmoid is almost flat.

Which cause is the output of the sigmoid to be only once or only zeros respectively a static output of the activations minimises the gradient.

Well, the algorithm is not really trained.

So what we want is a wide range of inputs for this sigmoid. These inputs depend on the weights so the weights will have to be initialized in a reasonable range so we have a nice variance along the linear combinations in the next lesson.

# Xavier Initialization

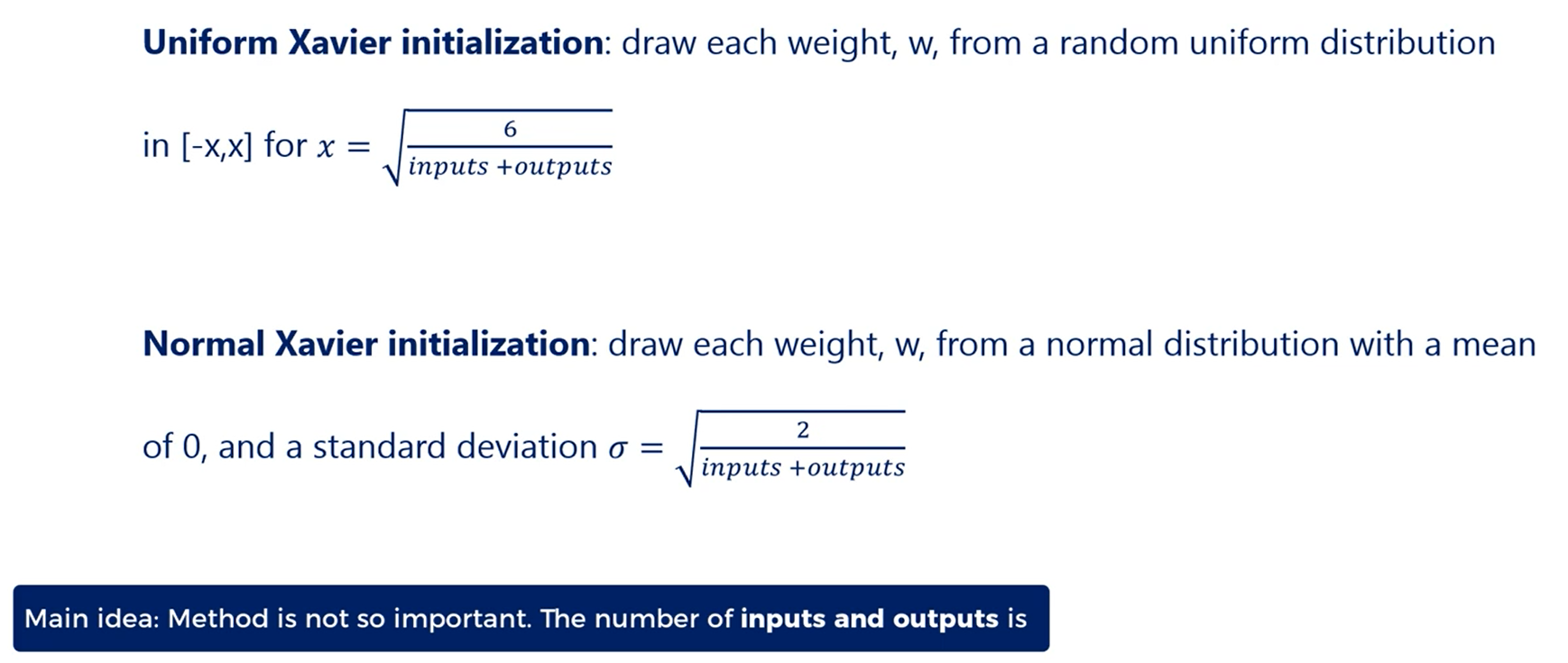

There are both a uniform Xavier initialization and a normal Xavier initialization.

The main idea is that the method used for randomization isn't so important is the number of outputs in the following where that does with the passing of each layer.

The Zeev your initialization is maintaining the variance in some bounds. So we can take full advantage of activation functions. There are two formulas.

The uniform is your initialization States.

We should draw each weight W from a random uniform distribution in the range from minus x to x where x is equal to the square root of 6 divided by the number of inputs plus the number of outputs for the transformation.

For the normal exeat your initialization we have draw each weight W from a normal distribution with a mean of zero and a standard deviation equal to two divided by the number of inputs plus the number of outputs for the transformation the numerator values 2 and 6 vary across sources. But the idea is the same.

Another detail you should notice is that the number of inputs and outputs matters outputs are clear. That's where the activation function is going.

So the higher number of outputs the higher need to spread weights.

What about inputs?

Well optimization is done through back propagation. So when we back propagate we would obviously have the same problem but in the opposite direction.

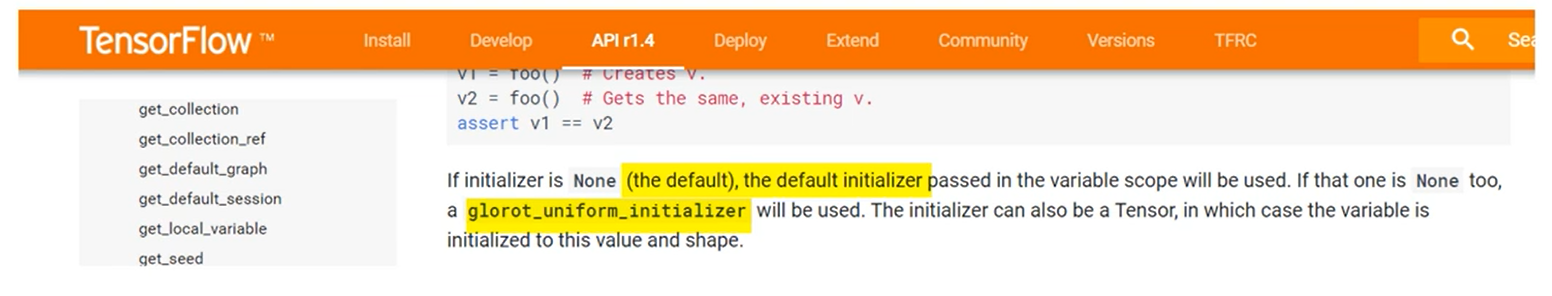

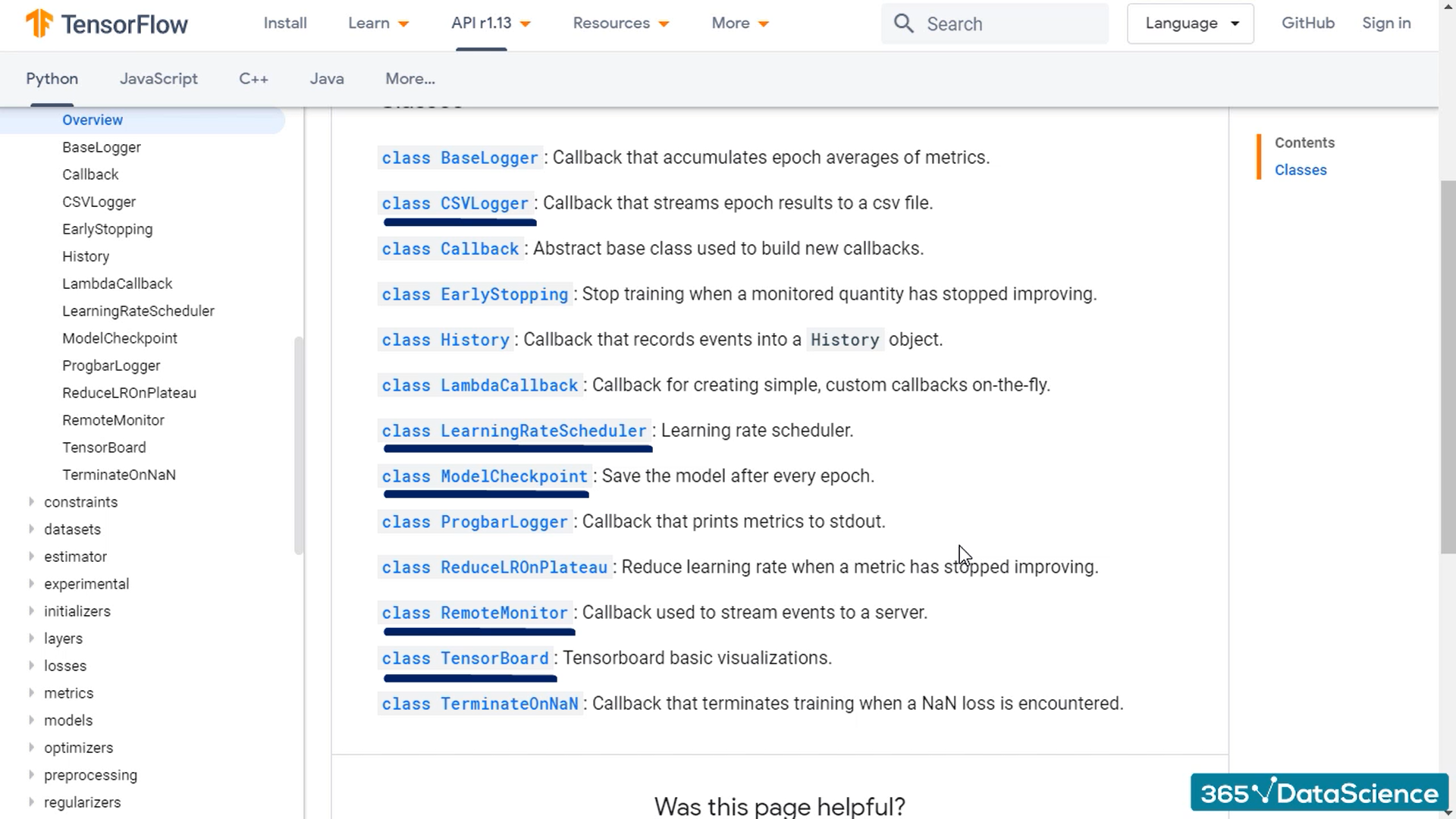

Finally, in TensorFlow this is the default initializer.

So if you initialize the variables without specifying how it will automatically adopt the exit of your initializer unlike what we did in the minimal example.

# Gradient descent and learning rates

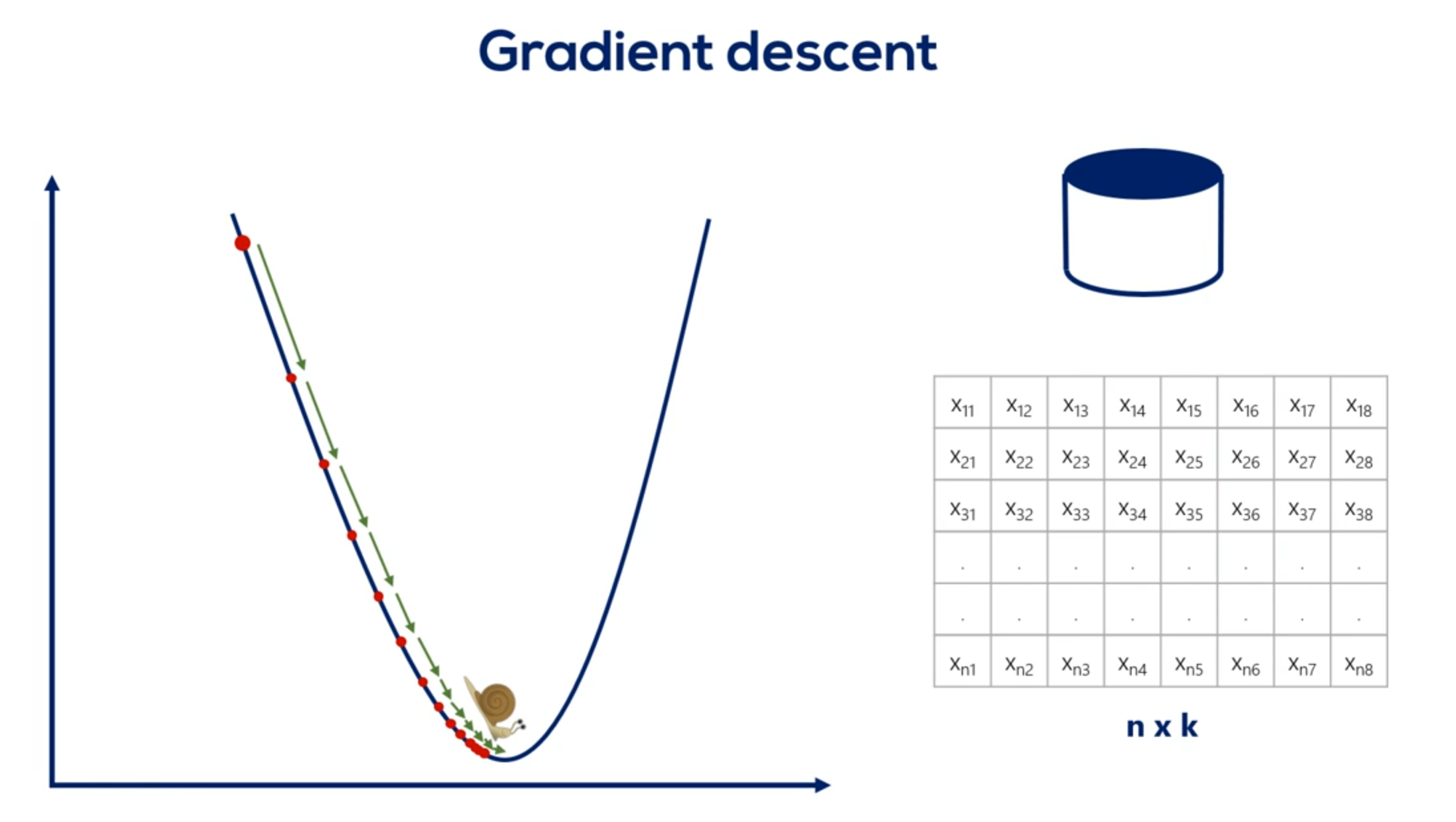

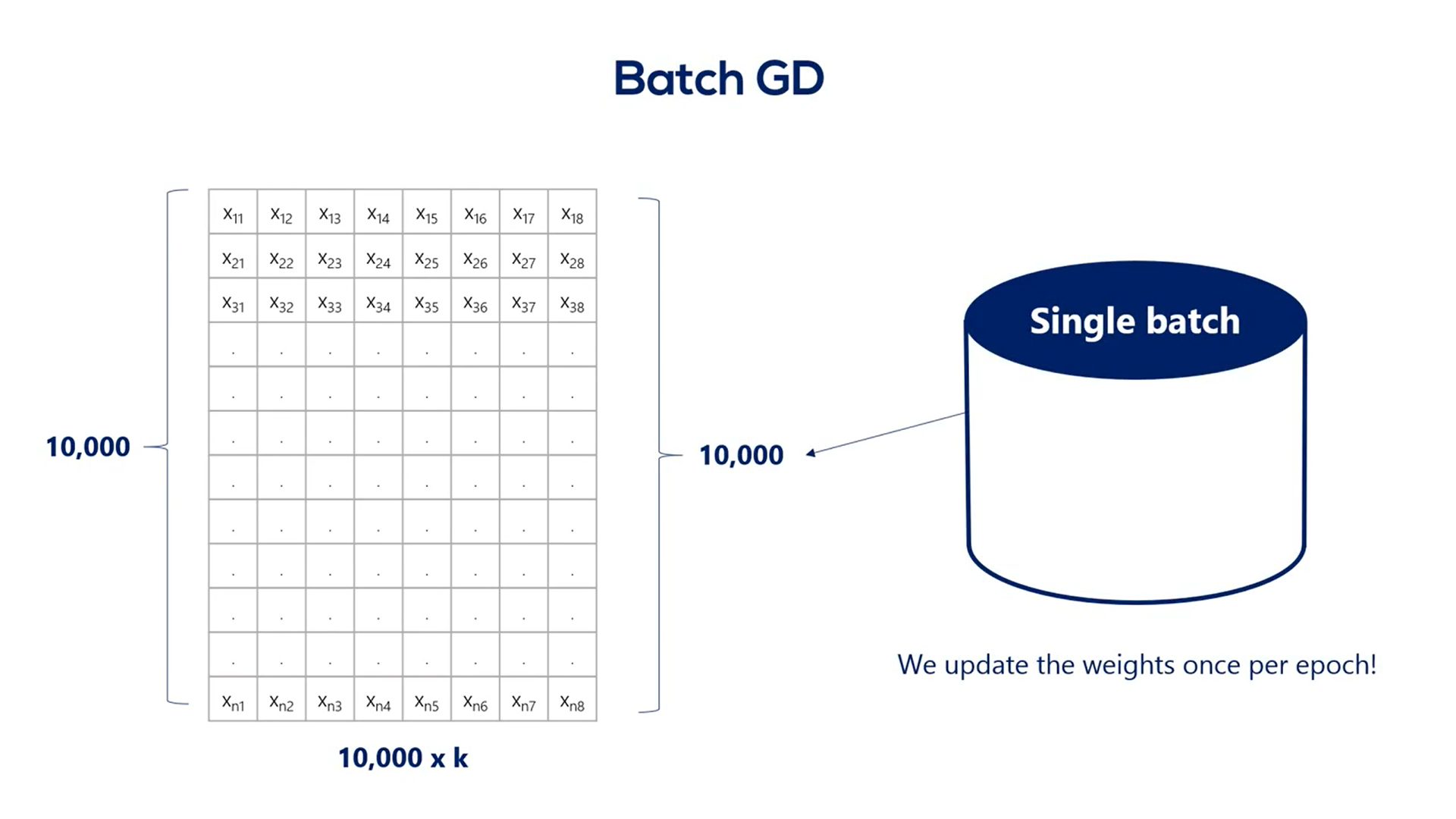

The gradient descent, GD, short for gradient descent, iterates over the whole training set before updating the weights.

Each update is very small.

That's due to the whole concept of the gradient descent, driven by the small value of the learning rate.

As you remember, we couldn't use a value too high, as this jeopardises the algorithm. Therefore, we have many epochs over many points using a very small learning rate.

This is slow.

It's not descending.

It's basically snailing down the gradient.

Fortunately for us, there is a simple solution to the problem.

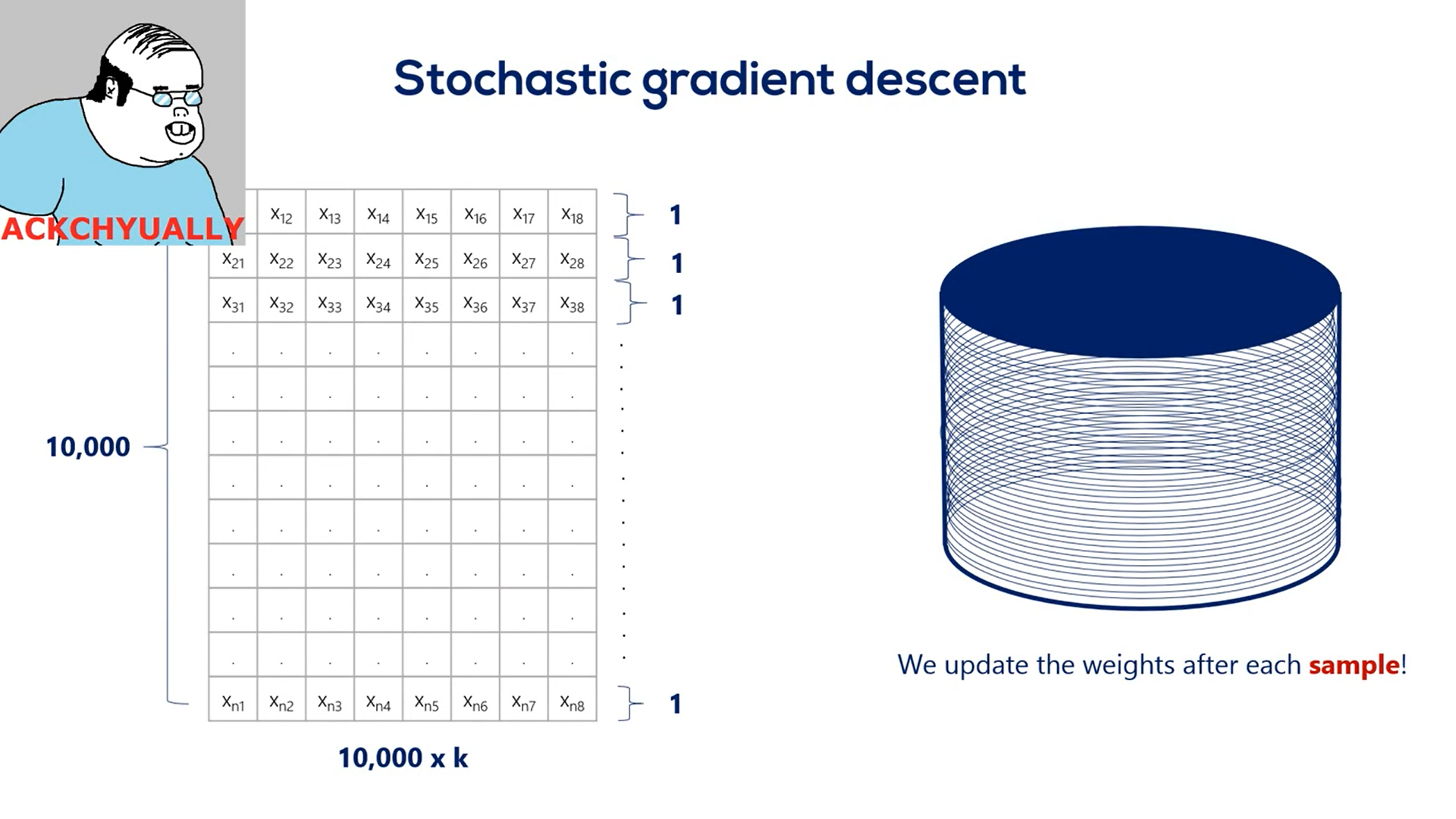

It's a similar algorithm called the SGD or the stochastic gradient descent.

It works in the exact same way, but instead of updating the weights once per epoch it updates them in real time inside a single epoch.

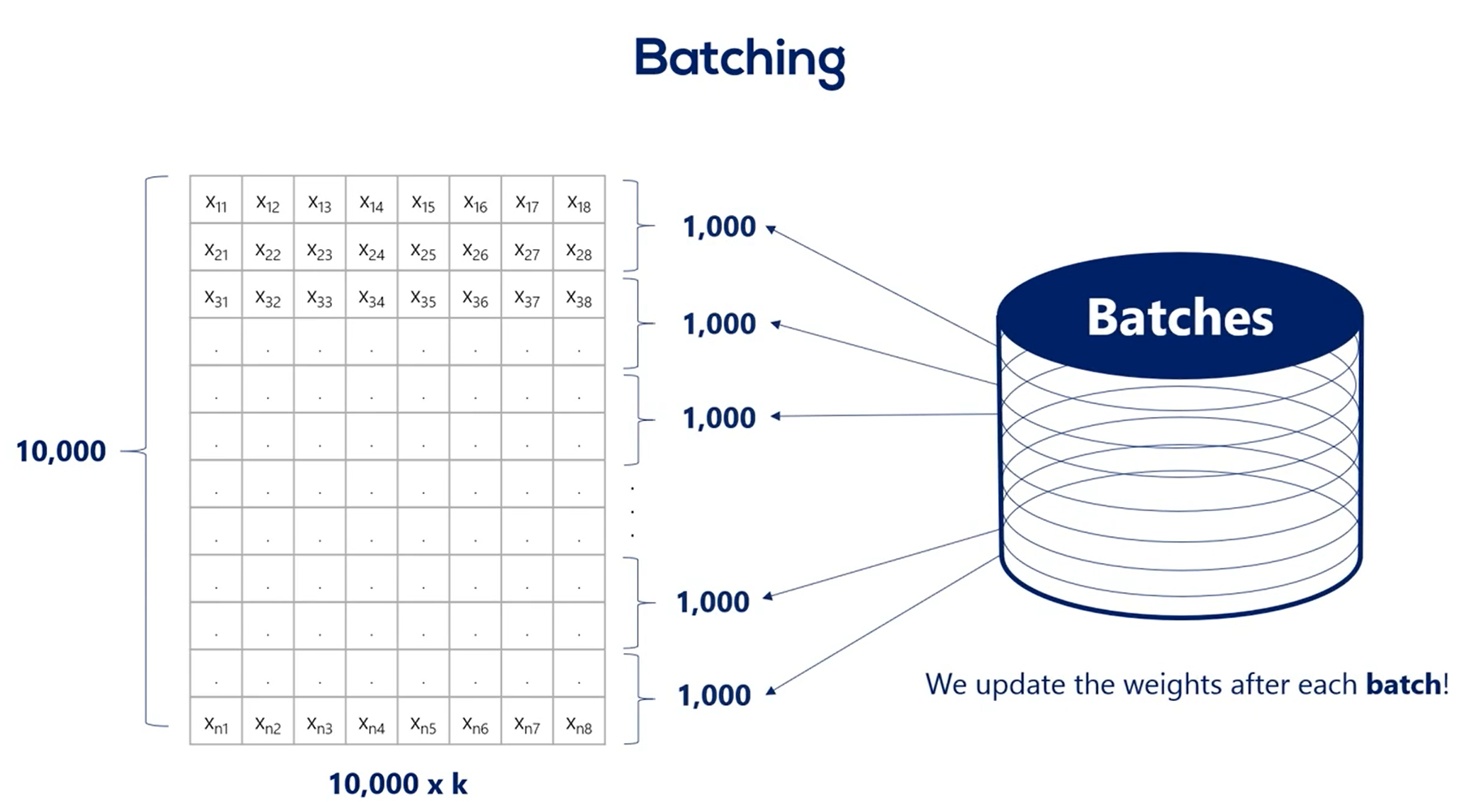

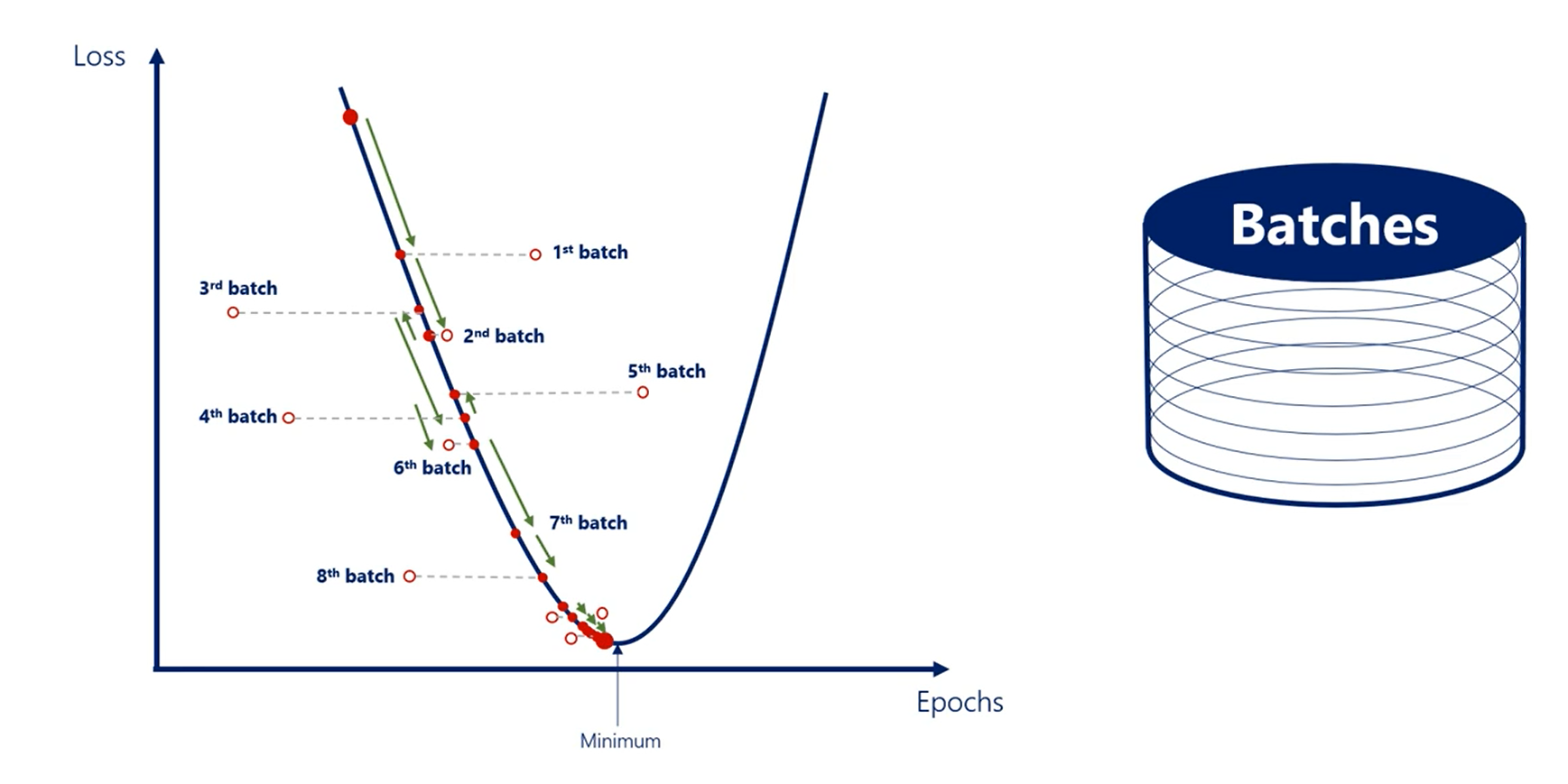

The stochastic gradient descent is closely related to the concept of batching.

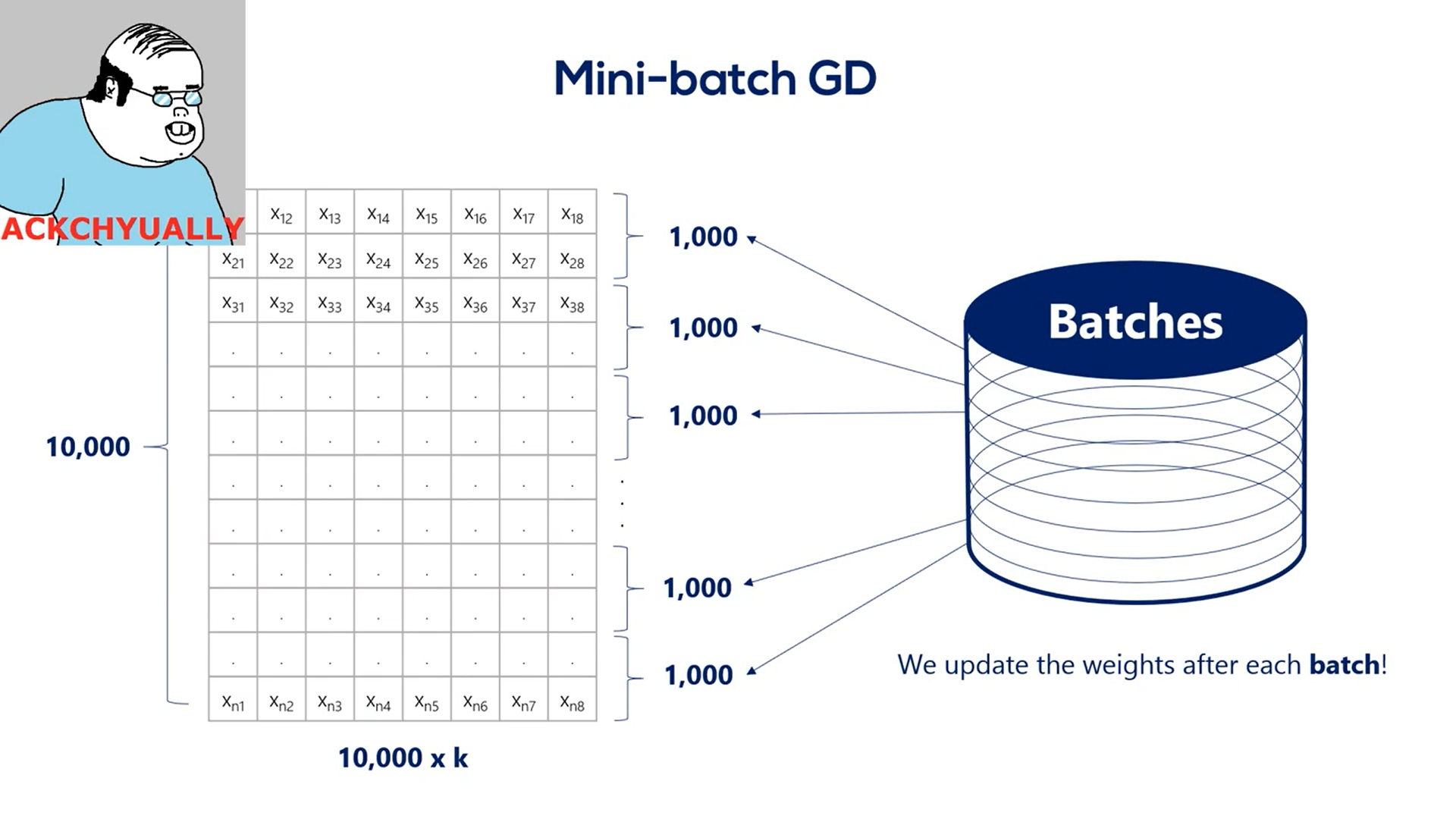

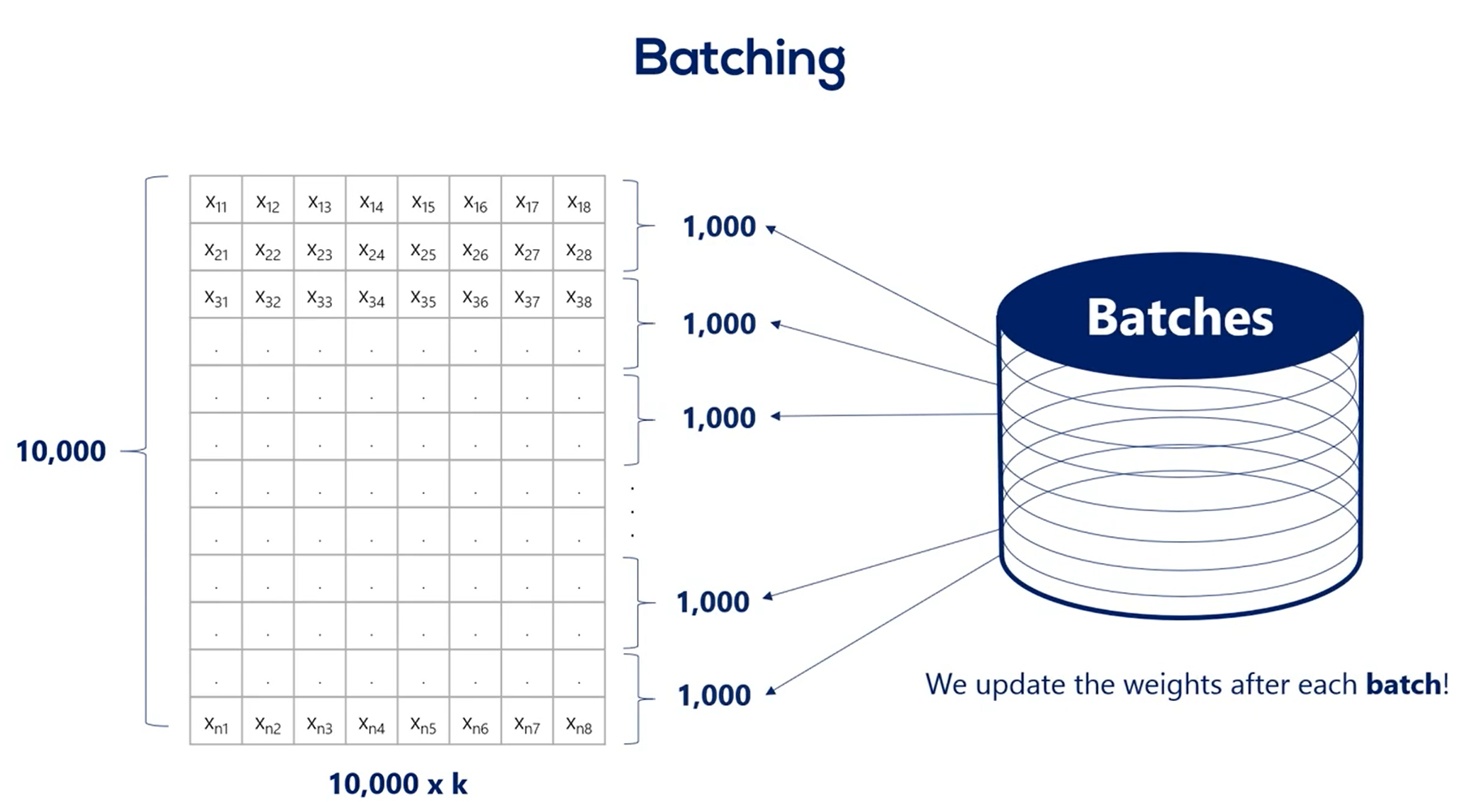

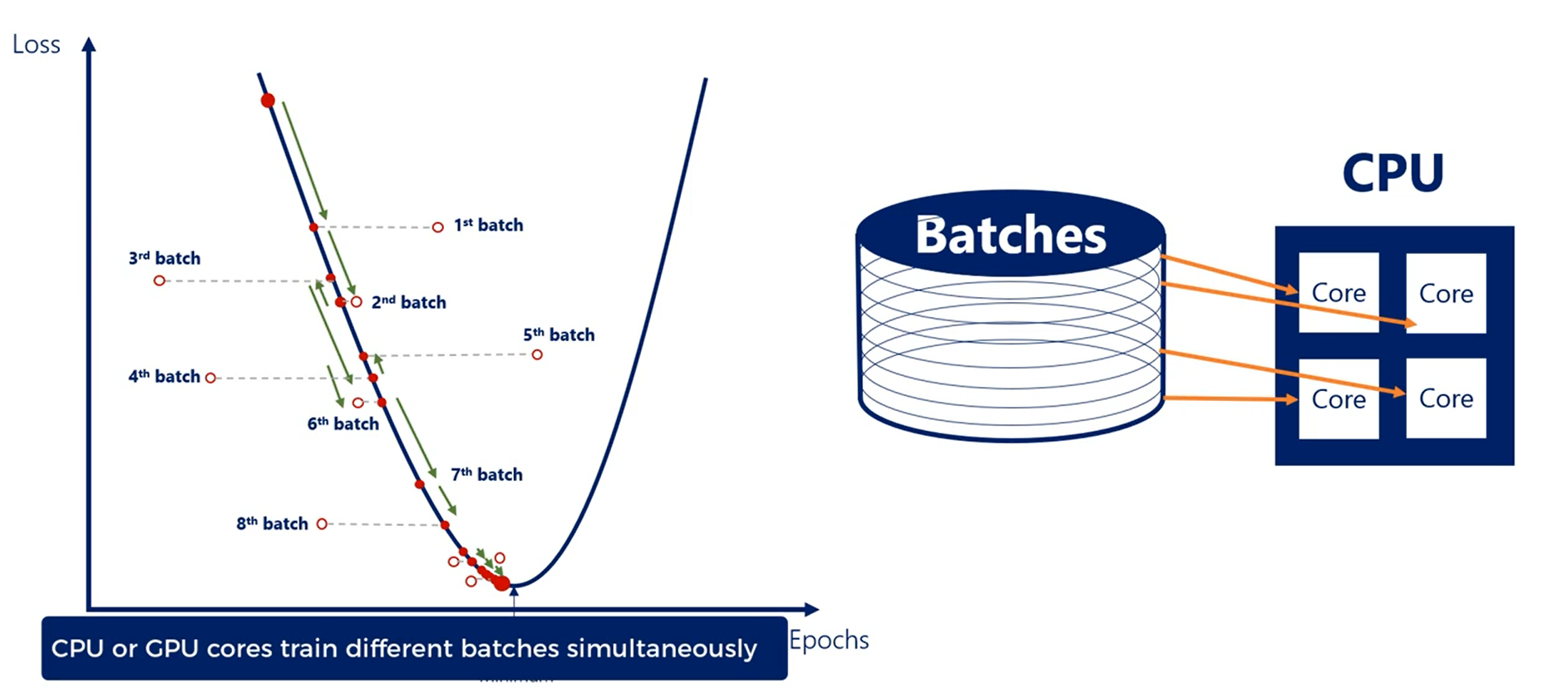

Batching is the process of splitting data into and batches often called many batches.

We update the weights after every batch instead of every epoch.

Let's say we have 10000 training points. If we choose a batch size of 1000 then we have 10 batches per epoch. So for every full iteration over the training data set we would update the weights 10 times instead of one.

This is by no means a new method. It is the same as the gradient descent but much faster.

The SGD comes at a cost.

It approximates things a bit.

So, we lose a bit of accuracy, but the tradeoff is worth it.

That is confirmed by the fact that virtually everyone in the industry uses stochastic gradient descent not gradient descent.

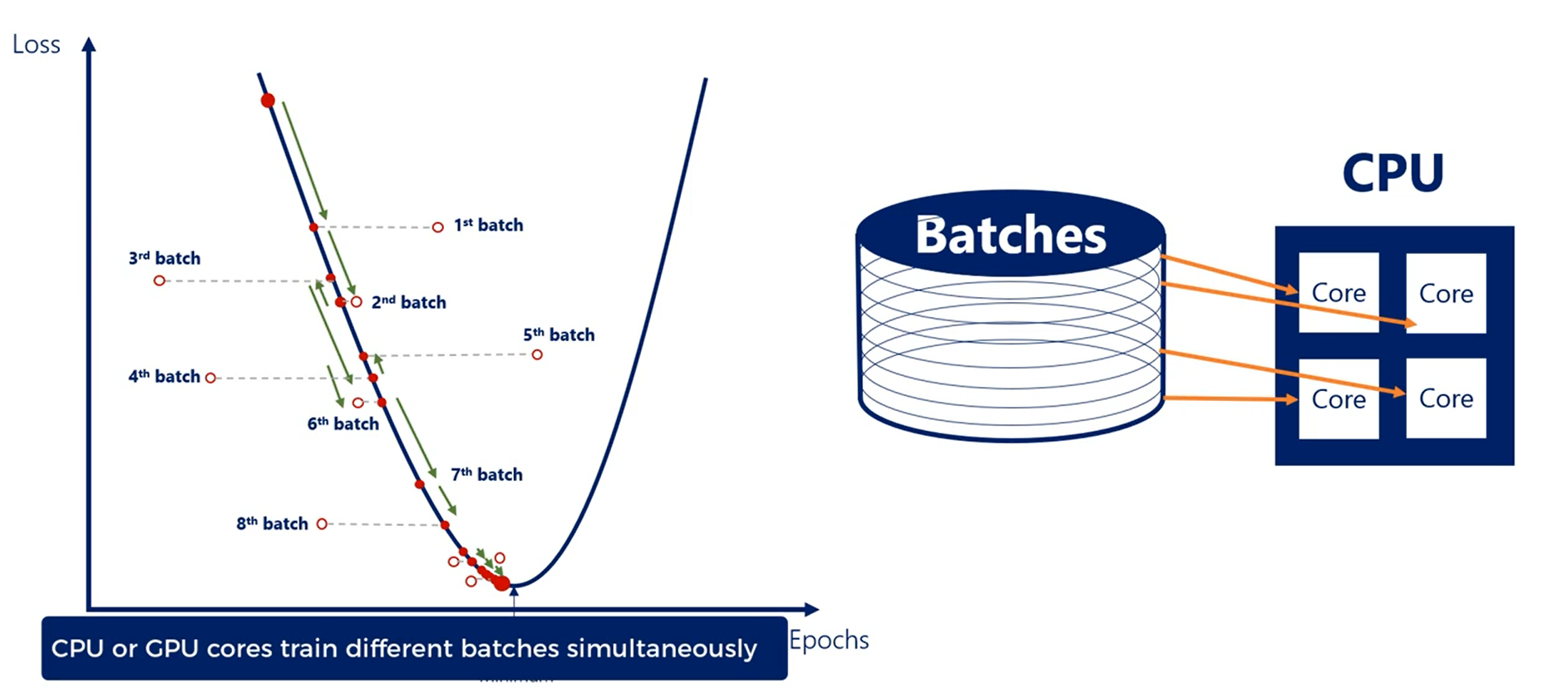

So why does this speed up the algorithm so drastically?

There are a couple of reasons, but one of the finest is related to hardware. Splitting the training set into batches allows the CPU cores or the GPU cores to train on different batches in parallel. This gives an incredible speed boost, which is why practitioners rely on it.

Actually, stochastic gradient descent is when you update after every input. So your batch size is one.

What we have been talking about was technically called mini batch.

Gradient descent However, more often than not, practitioners refer to the mini batch GD as stochastic gradient descent.

If you are wondering, the plane gradient descent we talked about at the beginning of the Course is called batched GD as it has a single batch.

# Gradient descent pitfalls

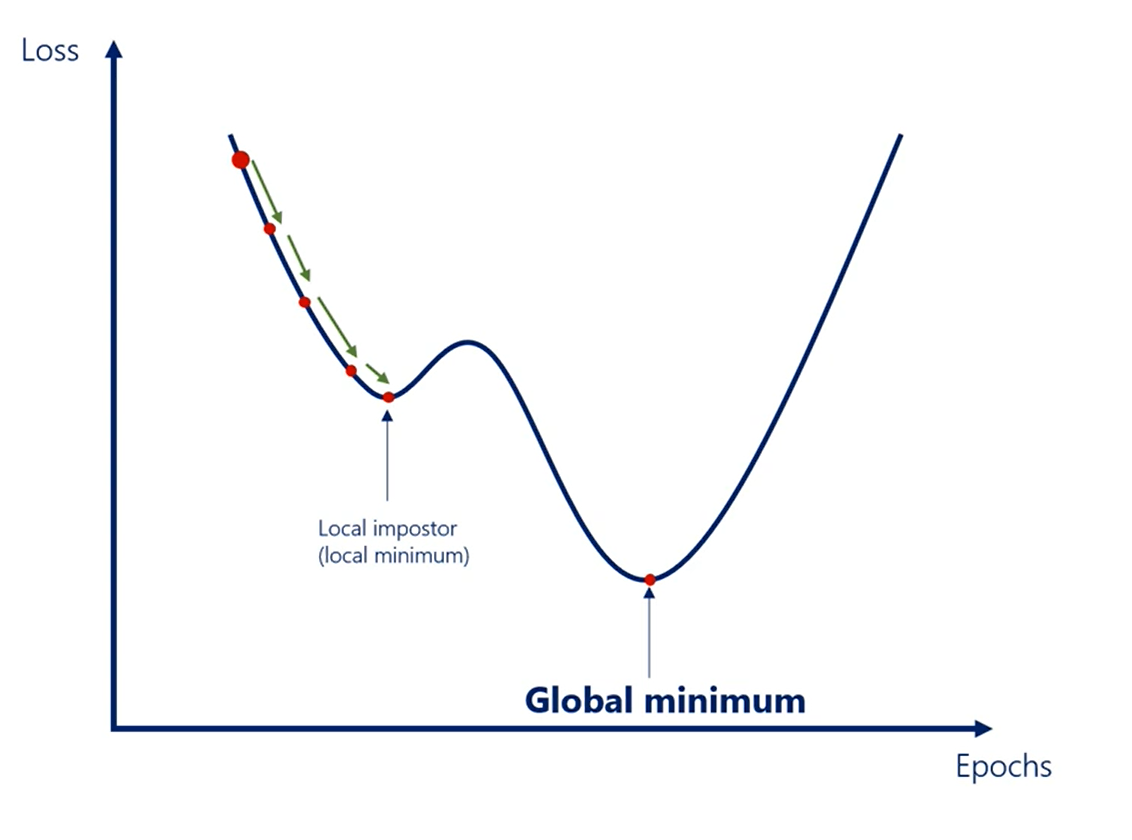

In real life though loss functions are not so regular. What if I told you this was not the whole graph of the last function. It was just one of its minima.

A local imposter rather than the sought extremum zooming out.

We see that actually the global minimum of the loss is this point.

Each local minimum is a suboptimal solution to the machine learning optimization.

Gradient descent is prone to this issue. Often it falls into the closest minimum to the starting point rather than the global minimum.

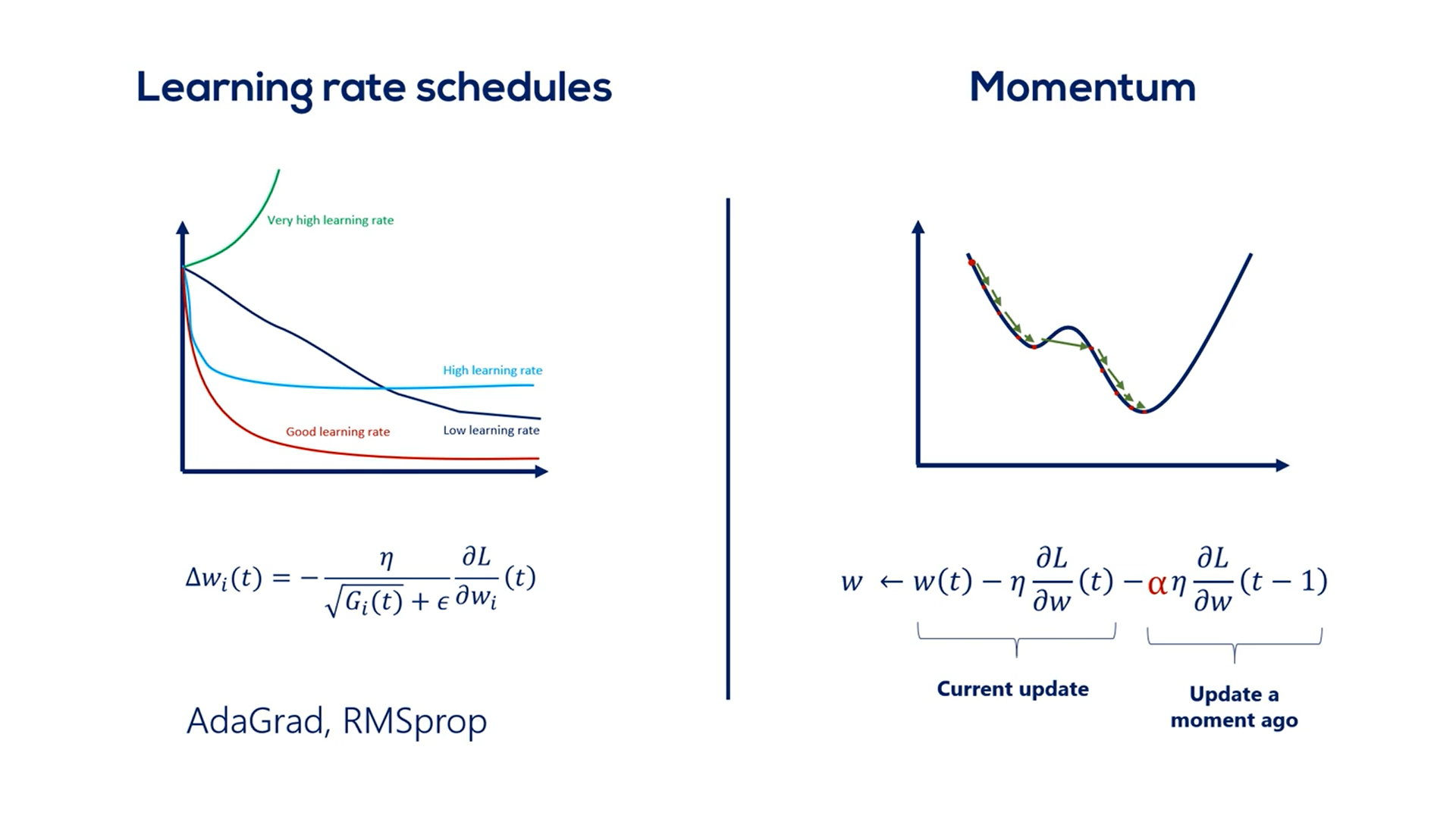

# Momentum

The gradient descent and the stochastic gradient descent are good ways to train our models.

We need not change them. We should simply extend them the simplest extension we should apply.

It is called momentum.

An easy way to explain momentum is through a physics analogy.

Imagine the gradient descent as rolling a ball down a hill. The faster the ball rolls the higher is its momentum.

A small dip in the grass would not stop the ball it would rather continue rolling until it has reached a flat surface out of which it cannot go.

The small dip is the local minimum while the Big Valley is the global minimum.

If there wasn't any momentum the ball would never reach the desired final destination. It would have rolled with some none increasing speed and would have stopped in the dip.

The momentum accounts for the fact that the ball is actually going downhill.

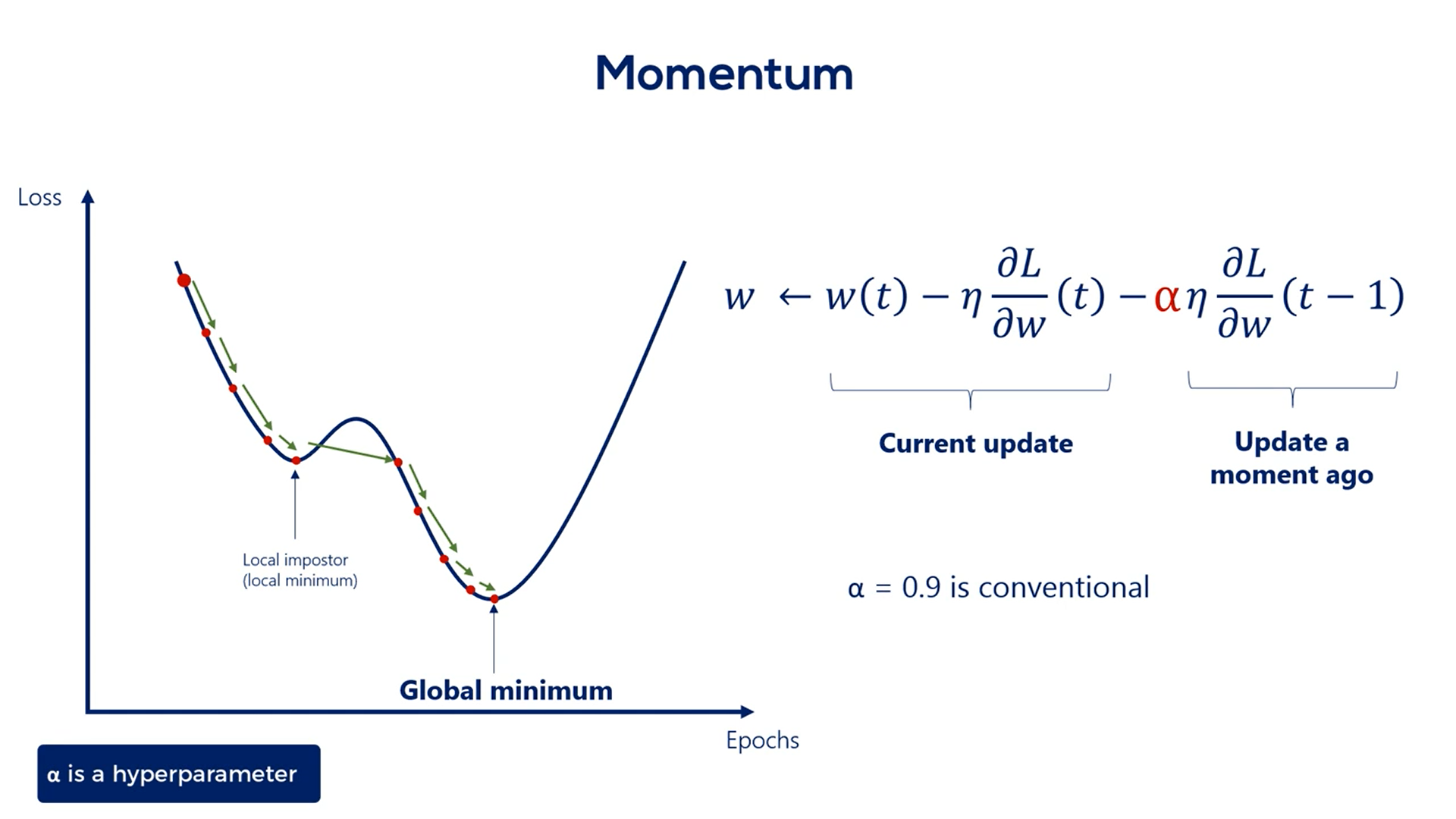

So how do we add momentum to the algorithm?

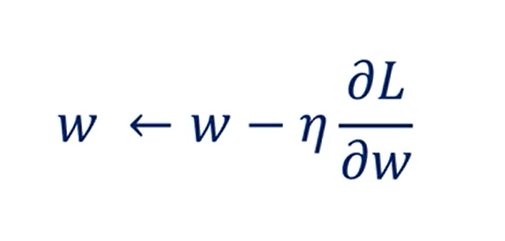

The rule so far was:

Including momentum, we will consider the speed with which we've been descending so far.

For instance, if the ball is rolling fast the momentum is high otherwise the momentum is low.

The best way to find out how fast the ball rolls is to check how fast it rolled a moment ago.

That's also the method adopted in machine learning.

We add the previous update step to the formula. We want to multiplied by some coefficient. Otherwise, we would assign the same importance to the current update and the previous one.

Usually, we use an alpha of 0.9 to adjust the previous update. Alpha is a hyper parameter and we can play around with it for better results.

0.9 is the conventional rule of thumb.

# Learning Rates Schedules

# Learning rate ( )

must be small enough so we gently descend through the last function instead of oscillating wildly around the minimum and never reaching it, or diverging to infinity.

It also had to be big enough, so the optimization takes place in a reasonable amount of time.

A smart way to deal with choosing the proper learning rate is adopting a so-called learning rate schedule.

There are two basic ways to do that:

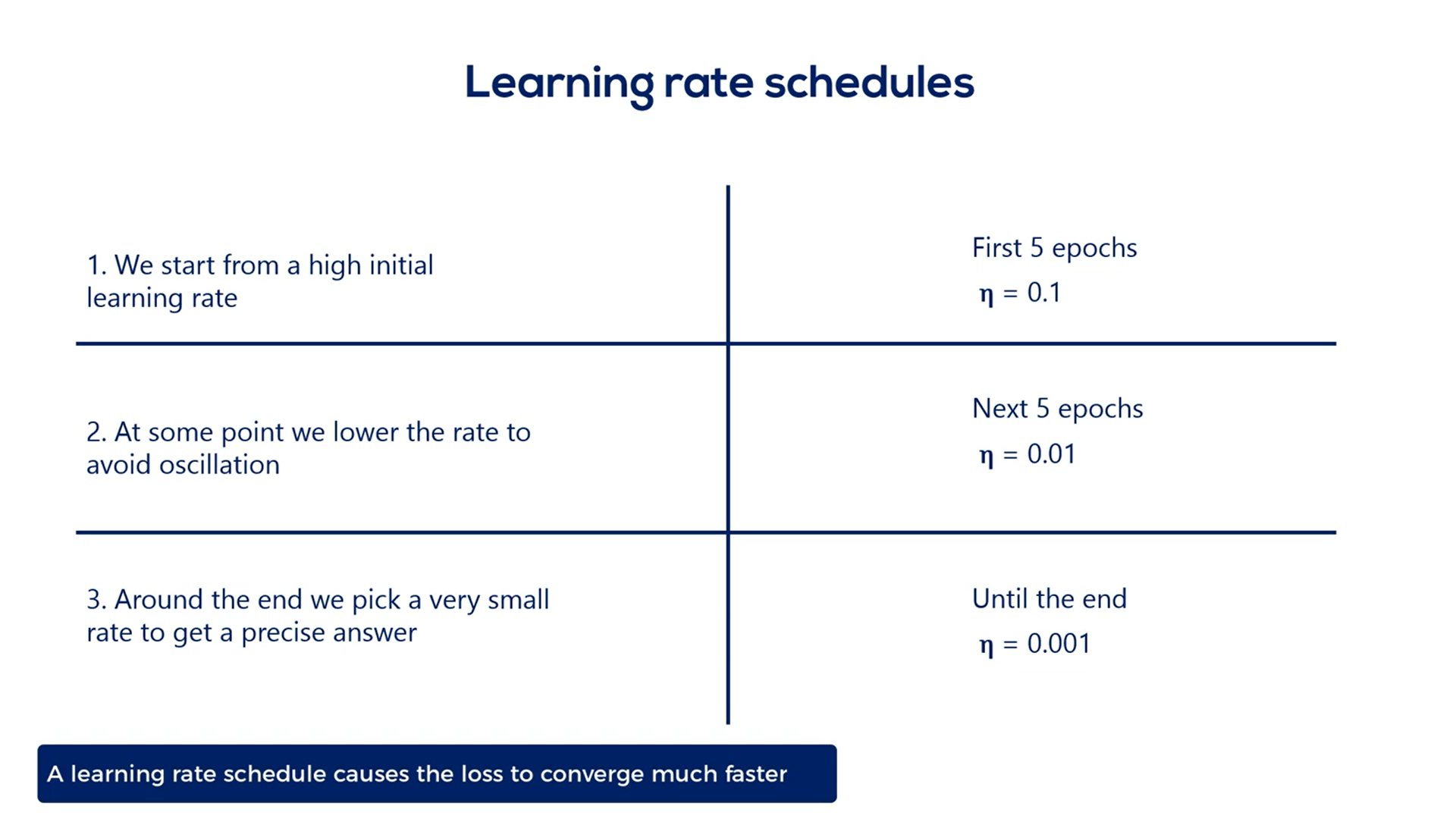

The simplest one is setting a pre-determined piecewise constant learning rate.

For example, we can use a learning rate of 0.1 for the first five epochs then 0.01 for the next five and 0.00 one until the end.

This causes the loss function to converge much faster to the minimum and will give us an accurate result.

Although, it is crude, as it requires us to know approximately how many Epochs it will take the last to converge. Still, beginners may want to use it as it makes a great difference compared to the constant learning rate.

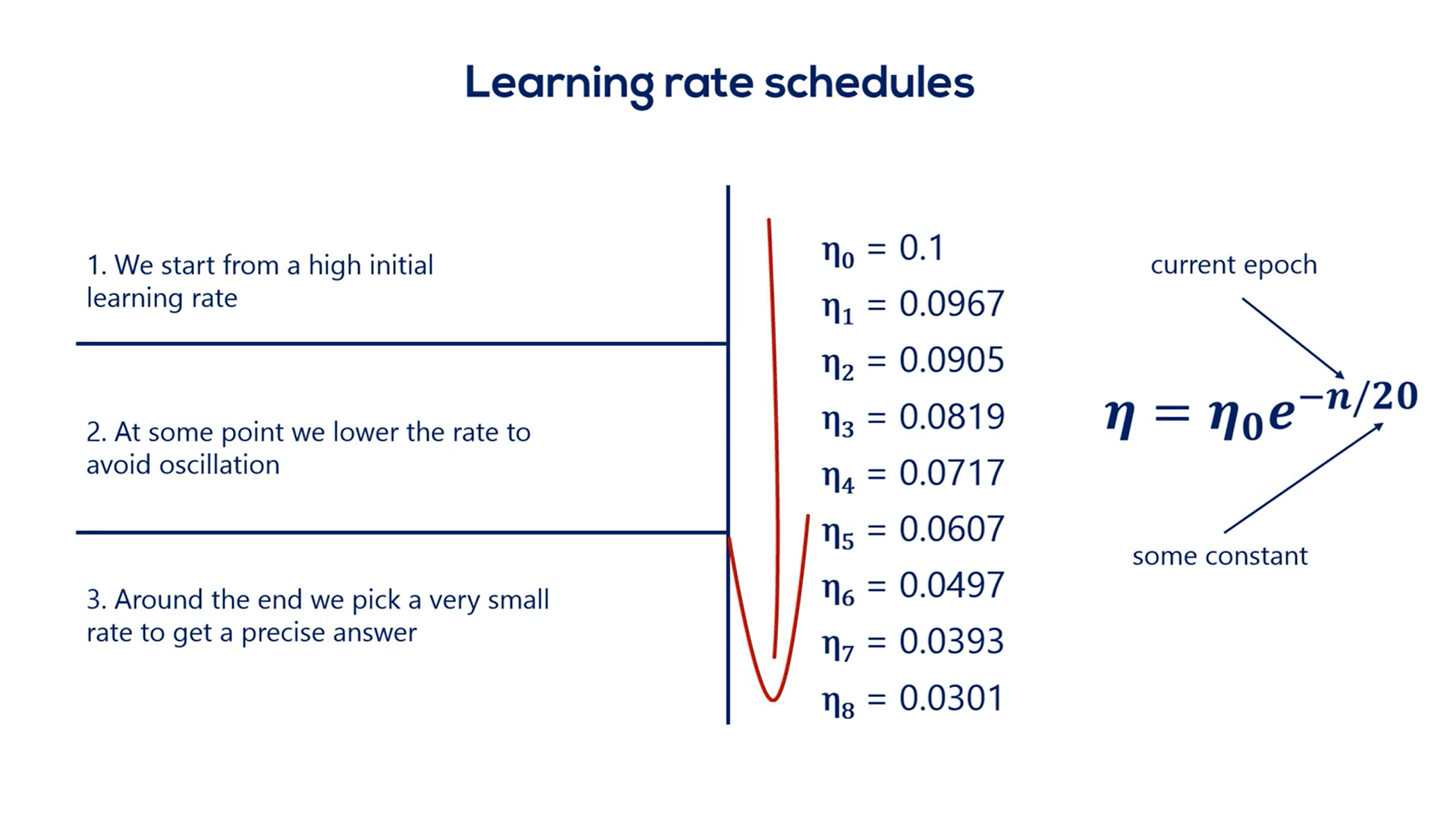

A second much smarter approach is the exponential schedule which is a much better alternative as it smoothly reduces or decays the learning rate.

We usually start from a high value such as . Then we update the learning rate at each epoch using the rule.

In this expression n is the current epoch while c is a constant.

Here's the sequence of learning rates that would follow for a c equal to 20.

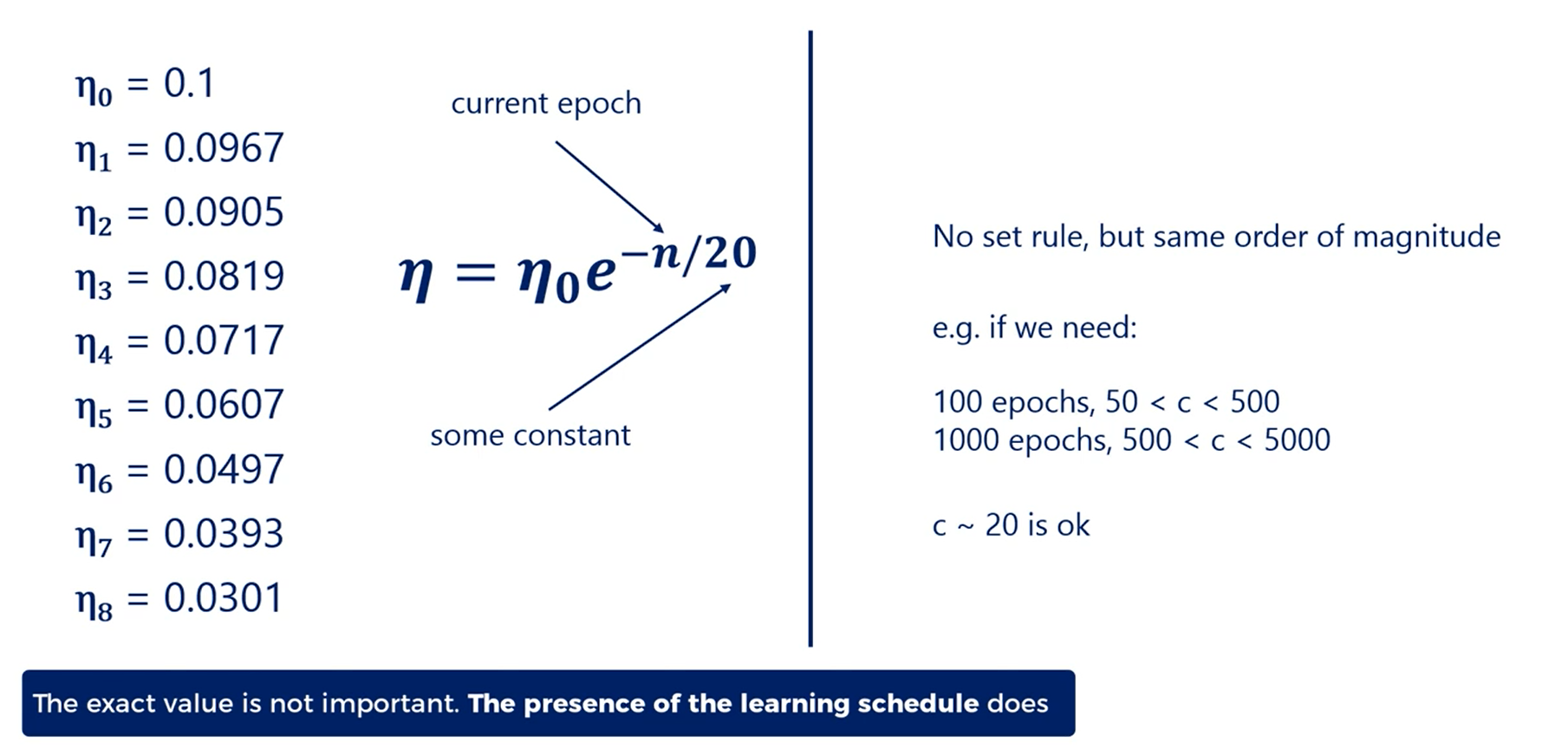

There is no rule for the constant c, but usually it should be the same order of magnitude as the number of epochs needed to minimize the loss.

For example, if we need 100 epochs values of c from 50 to 500 are all fine.

If we need 1000 values from 500 to 5000 are alright.

Usually we'll need much less.

So a value of c around 20 or 30 works well.

However,the exact value of c doesn't matter as much.

What makes a big difference is the presence of the learning schedule itself.

c is also a hyper parameter.

As with all hyper parameters it may make a difference for your particular problem.

You can try different values of c and see if this affects the results you obtain.

It's worth pointing out that all those cool new improvements such as learning rate schedules and momentum come at a price. We pay the price of increasing the number of hyper parameters for which we must pick values.

Generally, the rule of thumb values work well, but bear in mind that for some specific problem of yours they may not.

It's always worth it to Explore several hyper parameter values before sticking with one

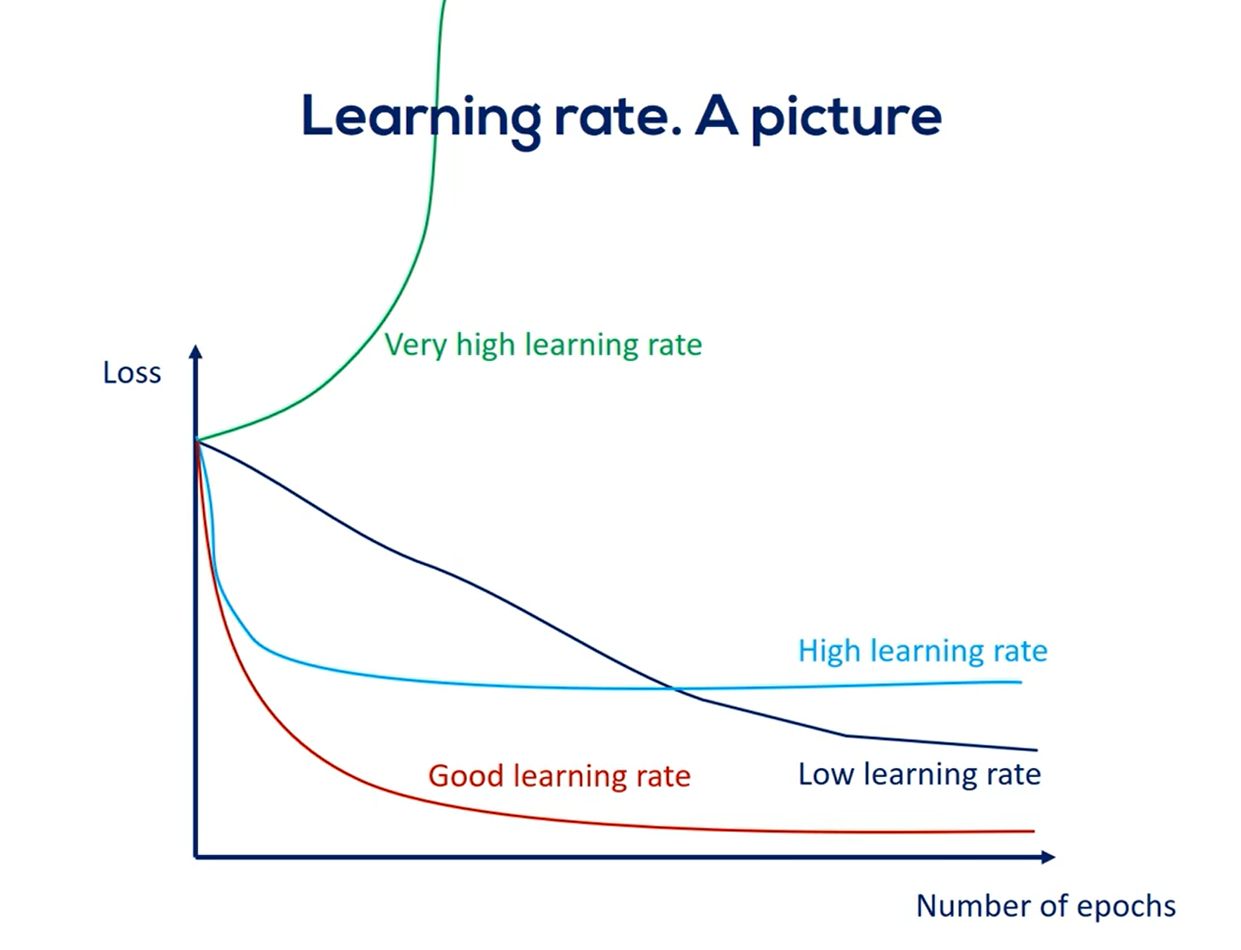

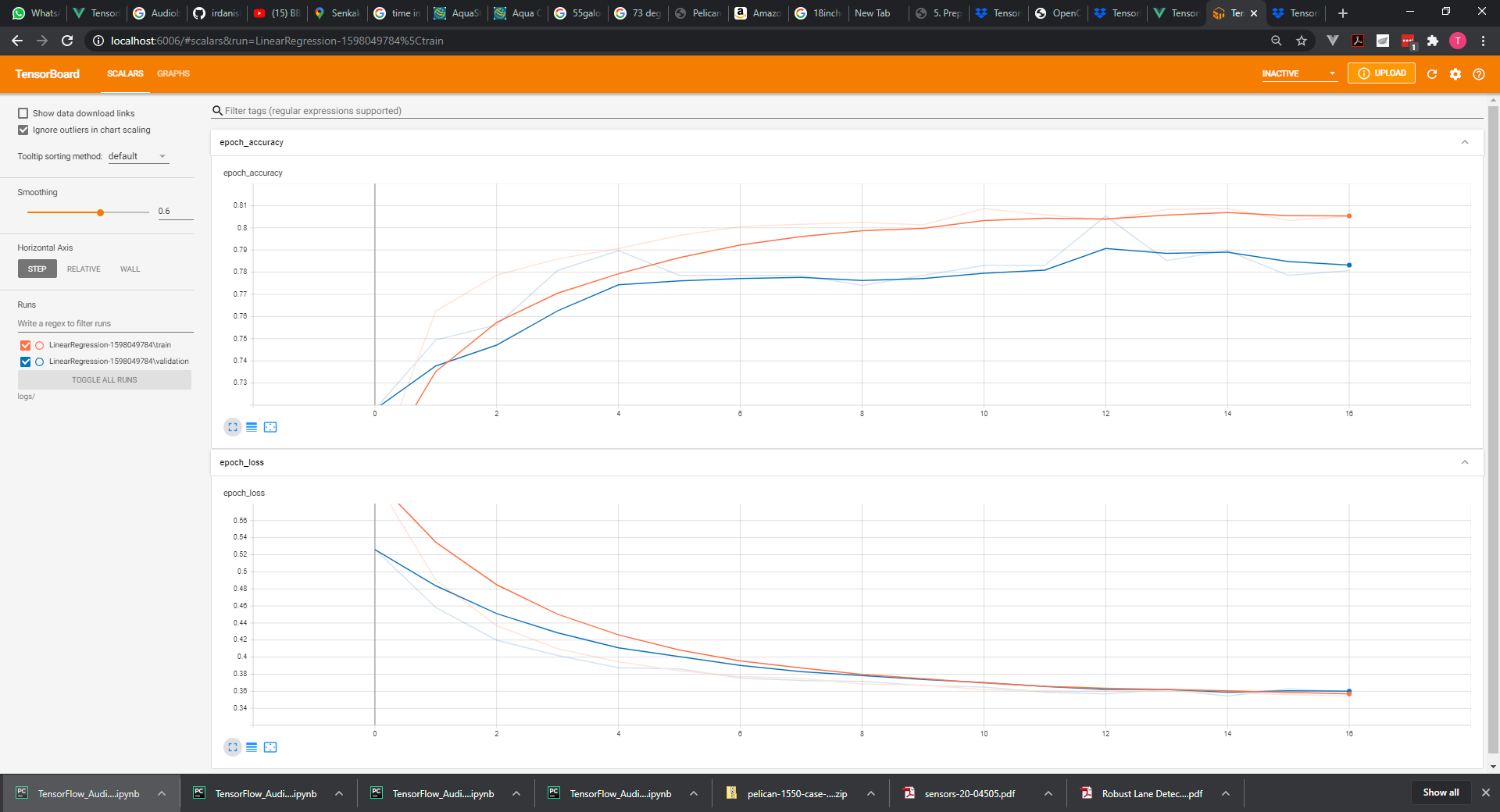

We can see a graph of the loss as a function of the number of epochs.

A well-selected learning rate such as the one defined by the exponential schedule would minimize the loss much faster than a low learning rate.

Moreover, it would do so more accurately than a high learning rate.

Naturally, we are aiming at the good learning rate.

Problem is we don't know what this learning rate is for our particular data model.

One way to establish a good learning rate is to plot the graph we're showing you now for a few learning rate values and pick the one that looks the most like the good curve.

Note that high learning rates may not minimize the loss a low learning rate eventually converges with a good learning rate, but the process will take much longer.

# Adaptive learning rate schedules

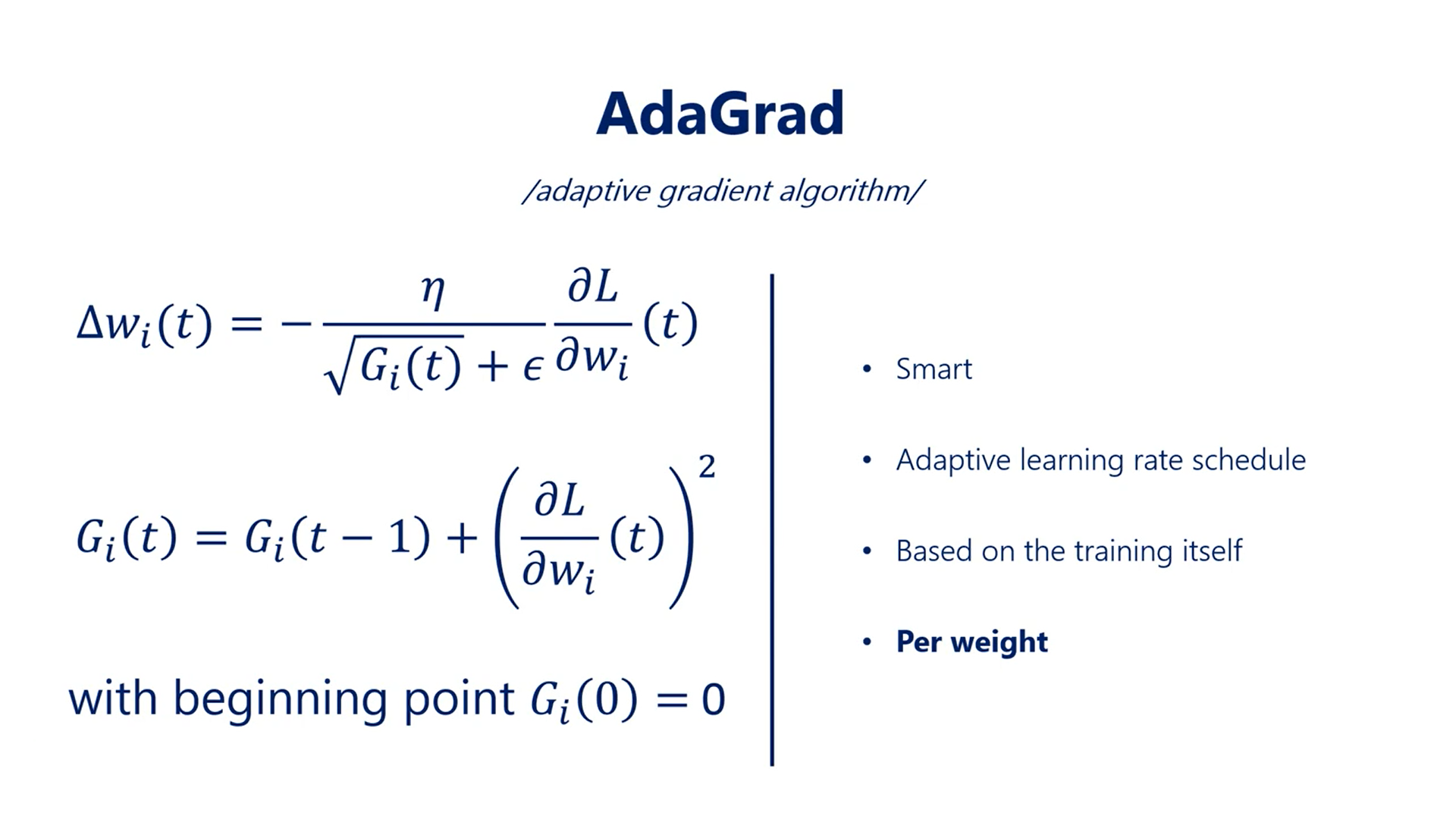

# AdaGrad:

It is basically a smart adaptive learning rate scheduler. Adaptive stands for the fact that the effective learning rate is based on the training itself.

It is not a pre-set learning schedule like the exponential one, where all the values are calculated regardless of the training process.

Another very important point is that the adaptation is per weight. This means every individual weight in the whole network keeps track of its own G function to normalize its own steps.

It's an important observation as different weights do not reach their optimal values simultaneously.

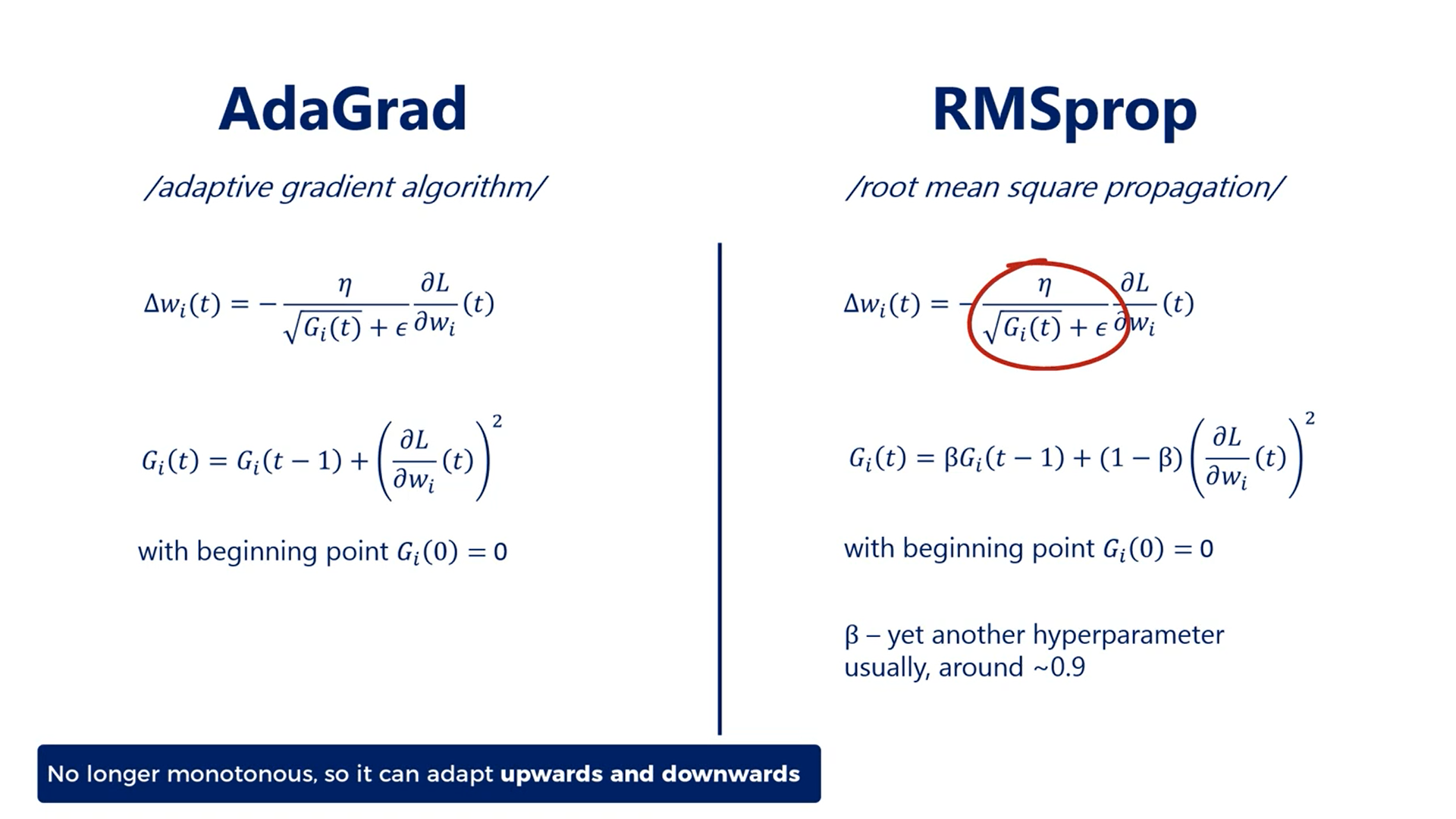

The second method is RMSprop or the root mean square propagation.

It is very similar to AdaGrad, the update rule is defined in the same way, but the G function is a bit different.

Empirical evidence shows that in this way the rate adapts much more efficiently.

Both methods are very logical and smart.

However, there is a third method based on these two which is superior.

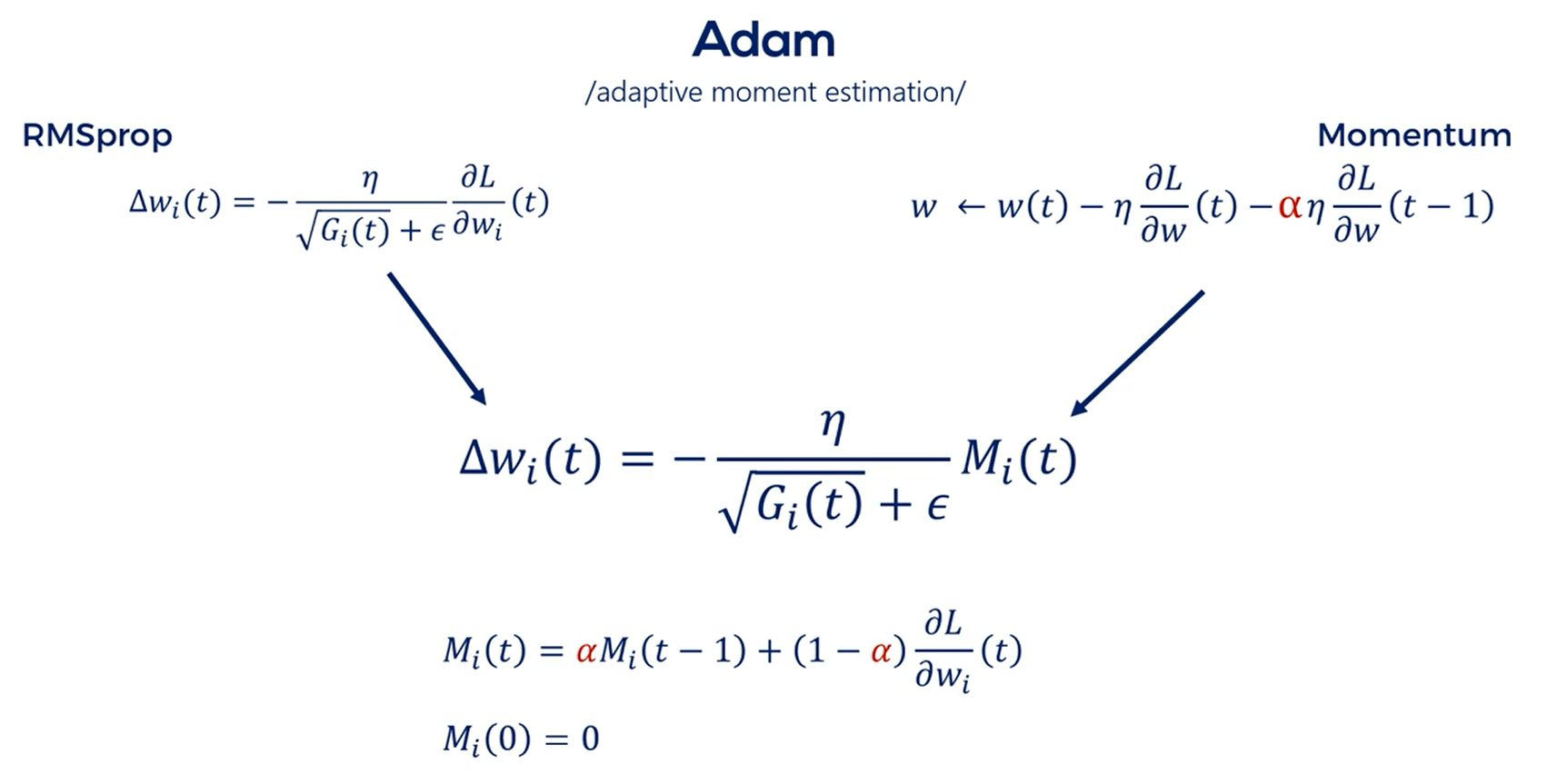

# Adaptative Moment Estimation (Adam)

So far we saw two different optimizers each brought a new bright idea to the update rule.

It would be even better if we can combine these concepts and obtain even better results right.

Adam is short for adaptive moment estimation.

If you noticed the AdaGrad and the RMSprop did not include momentum.

Adam steps on RMSprop and introduces momentum into the equation.

TIP

Always use Adam, as it is a cutting edge machine learning method.

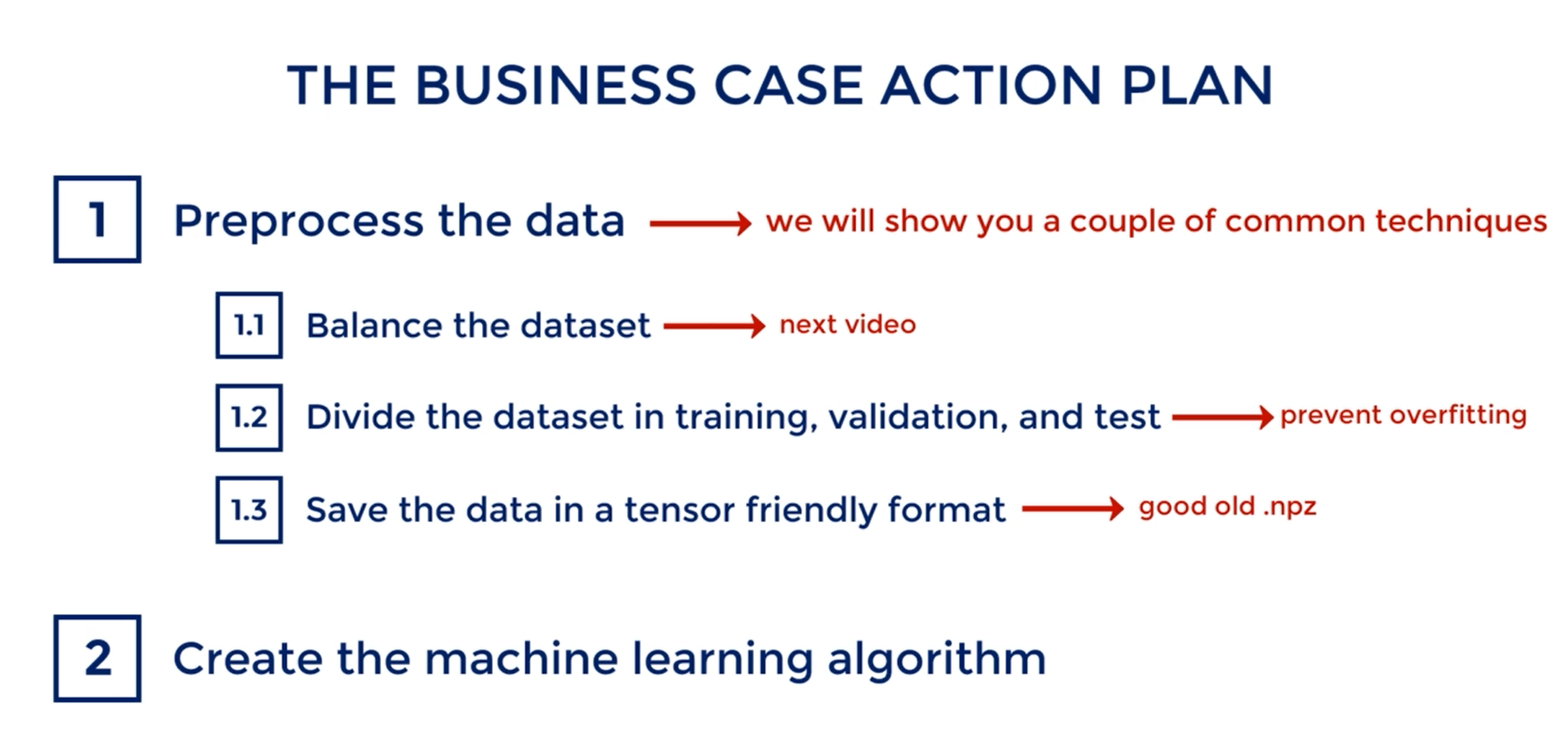

# Preprocessing

Pre-processing refers to any manipulation we apply to the data set before running it through the model.

Everything we saw so far was conditioned on the fact that we had already preprocessed our data in a way suitable for training.

You've already seen some pre-processing in the TensorFlow intro we created an .npz file all the training we did came from there.

So if you must work with data in an Excel file, csv or whatever saving into an .npz file would be a type of pre-processing.

In this section though we will mainly focus on data transformations rather than reordering as before.

What is the motivation for pre-processing. There are several important points.

The first one is about compatibility with the libraries we use. As we saw earlier TensorFlow works with tensors and not Excel spreadsheets. In Data Science you will often be given data in whatever format, and you must make it compatible with the tools you use.

Second we may need to adjust inputs of different magnitude.

A third reason is generalization.

Problems that seem different can often be solved by similar models standardizing inputs of different problems allows us to reuse the exact same models.

# Basic Preprocessing

We all start with one of the simplest transformations.

Often we are not interested in an absolute value but a relative value that's usually the case when working with stock prices.

If you open Google and type Apple's stock price what you'll get is Apple's stock price but with red or green numbers the relative change in Apple's stock price.

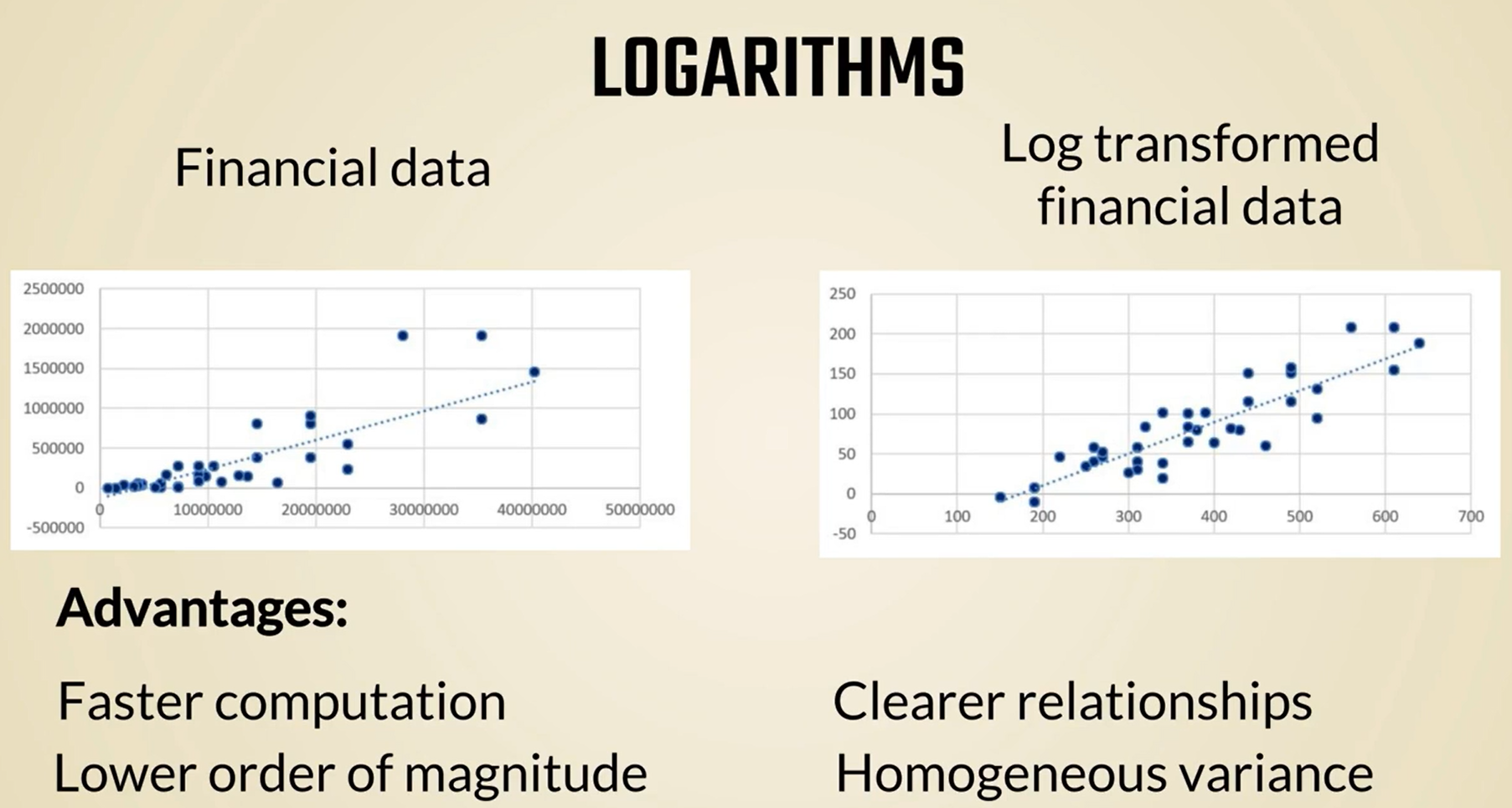

This is an example of pre-processing that is so common we don't consider it as such relative metrics are especially useful when we have a time series data like stock prices Forex exchange rates and so on still in the world of finance we can further transform these relative changes into logarithms many statistical and mathematical methods take advantage of logarithms as they facilitate faster computation in machine learning log transformations are not as common but can increase the speed of learning OK.

So this is one type of pre-processing we wanted to give as an example.

# Standardization

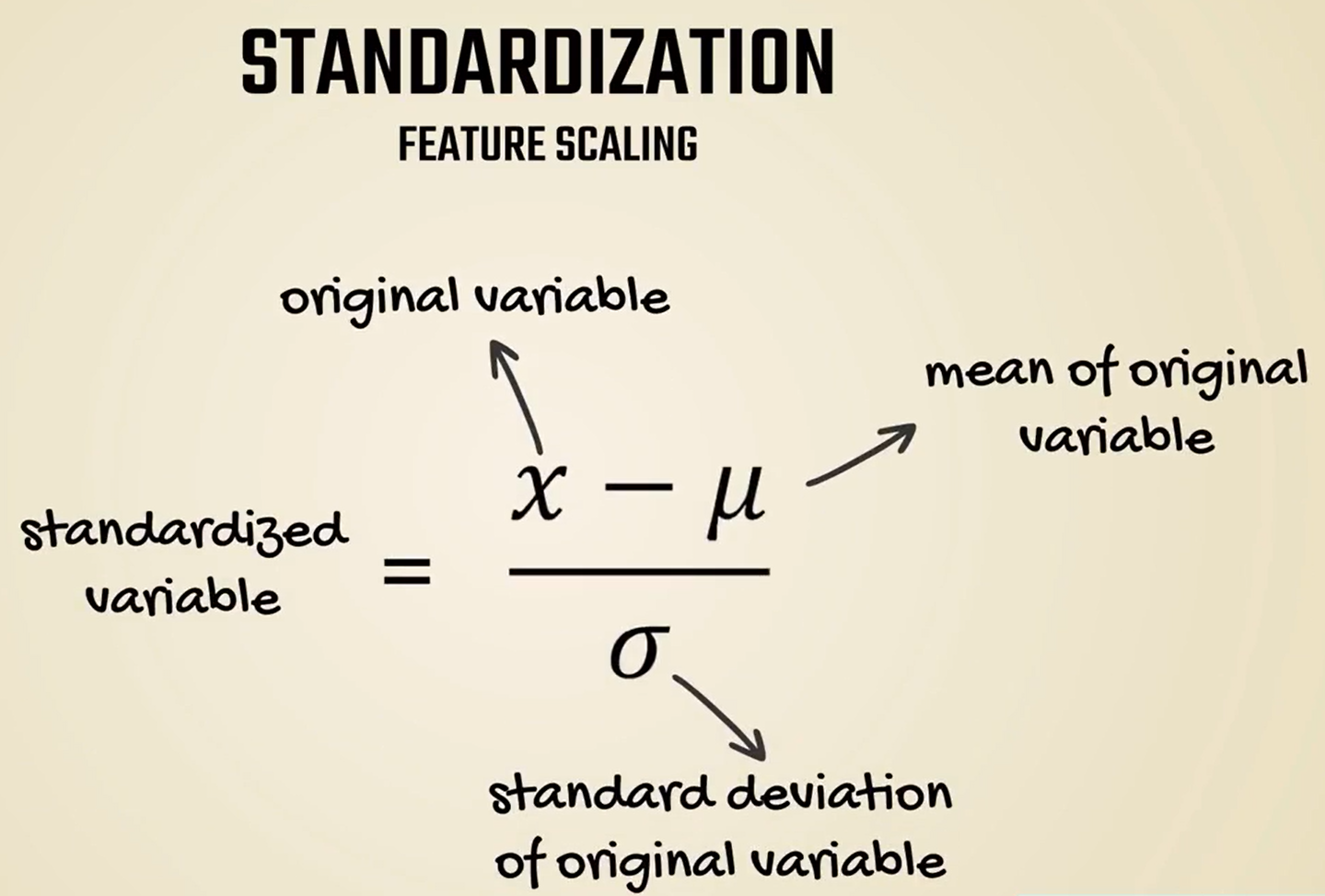

The most common problem when working with numerical data is about the difference in magnitudes.

As we mentioned in the first lesson an easy fix for this issue is standardization.

Other names by which you may have heard this term are features scaling and normalization.

However, normalization could refer to a few additional concepts even within machine learning, which is why we will stick with the term standardization and feature scaling.

Standardization or feature scaling is the process of transforming the data we are working with into a standard scale.

A very common way to approach this problem is by subtracting the mean and dividing by the standard deviation.

In this way, regardless of the dataset, we will always obtain a distribution with a mean of zero.

Besides standardization, there are other popular methods too.

We will surely introduce them without going too much in detail.

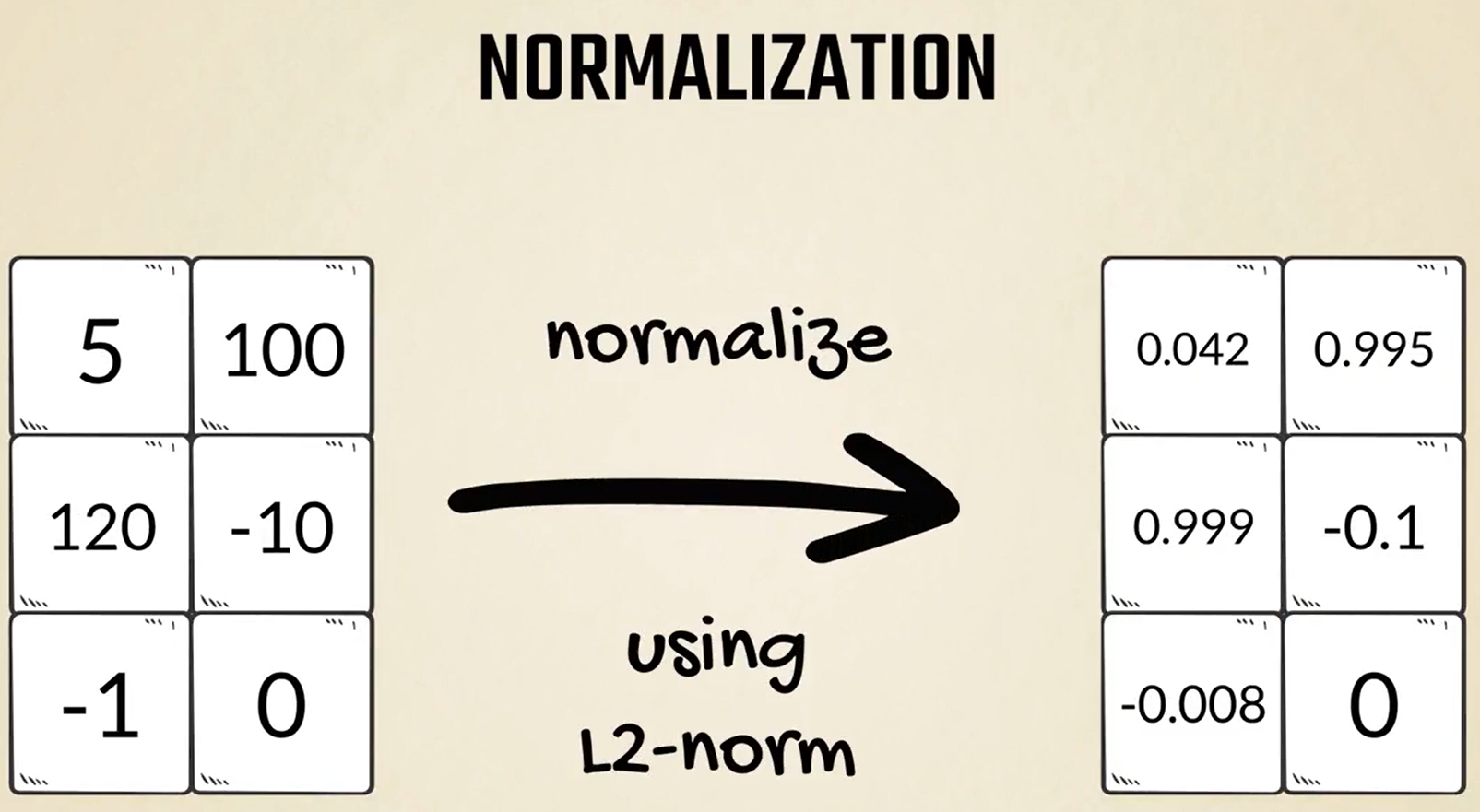

Initially, we said that normalization refers to several concepts.

One of them, which comes up in machine learning, often consists of converting each sample into a unit length vector using the L1 or L2 norm.

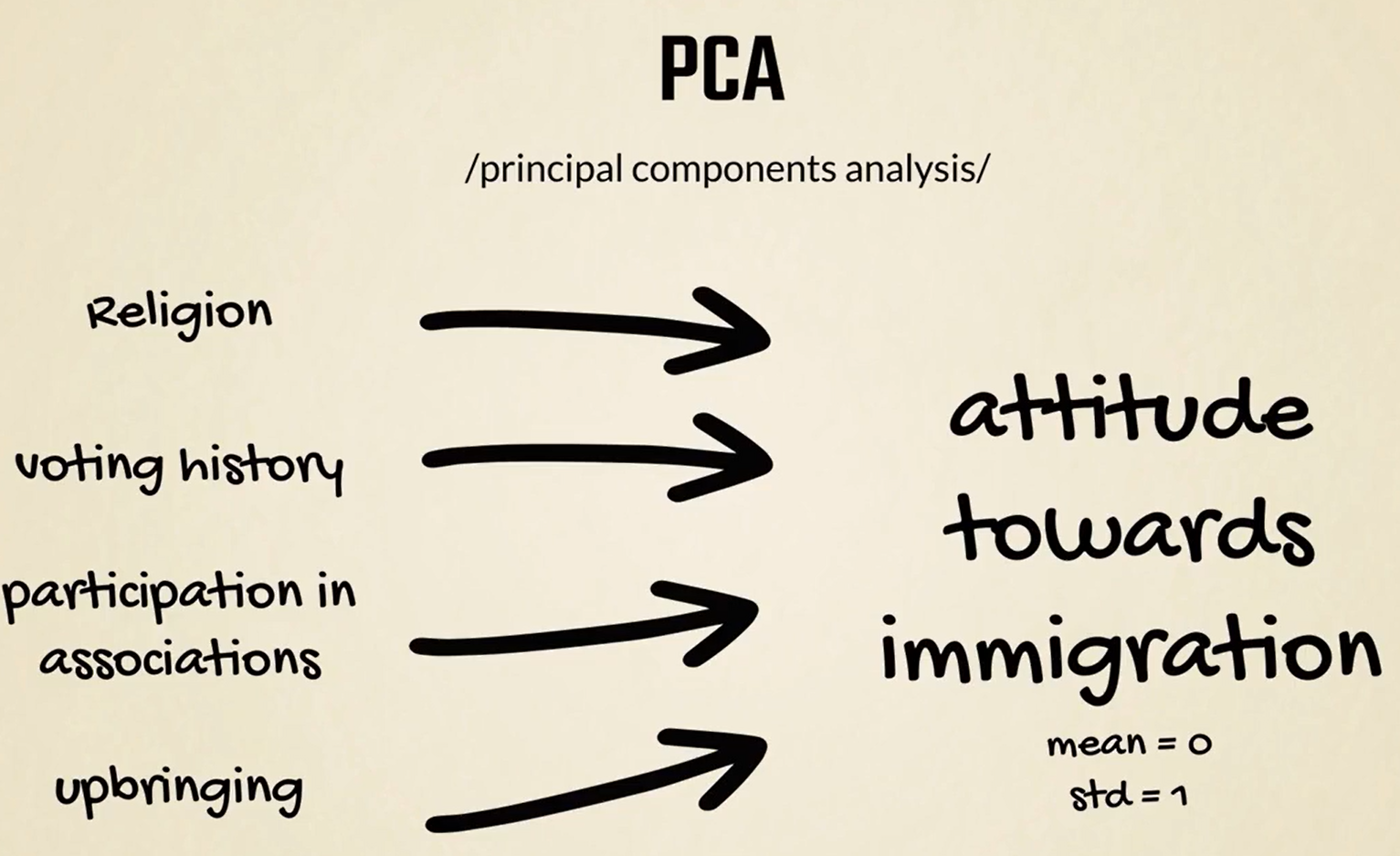

Another preprocessing method is PCA standing for principal components analysis.

It is a dimensioned reduction technique, often used when working with several variables referring to the same bigger concept or latent variable.

For instance, if we have data about one's religion voting history participation in different associations and upbringing, we can combine these four to reflect his or her attitude towards immigration.

This new variable will, normally, be standardized in a range with a mean of 0 and a standard deviation of 1.

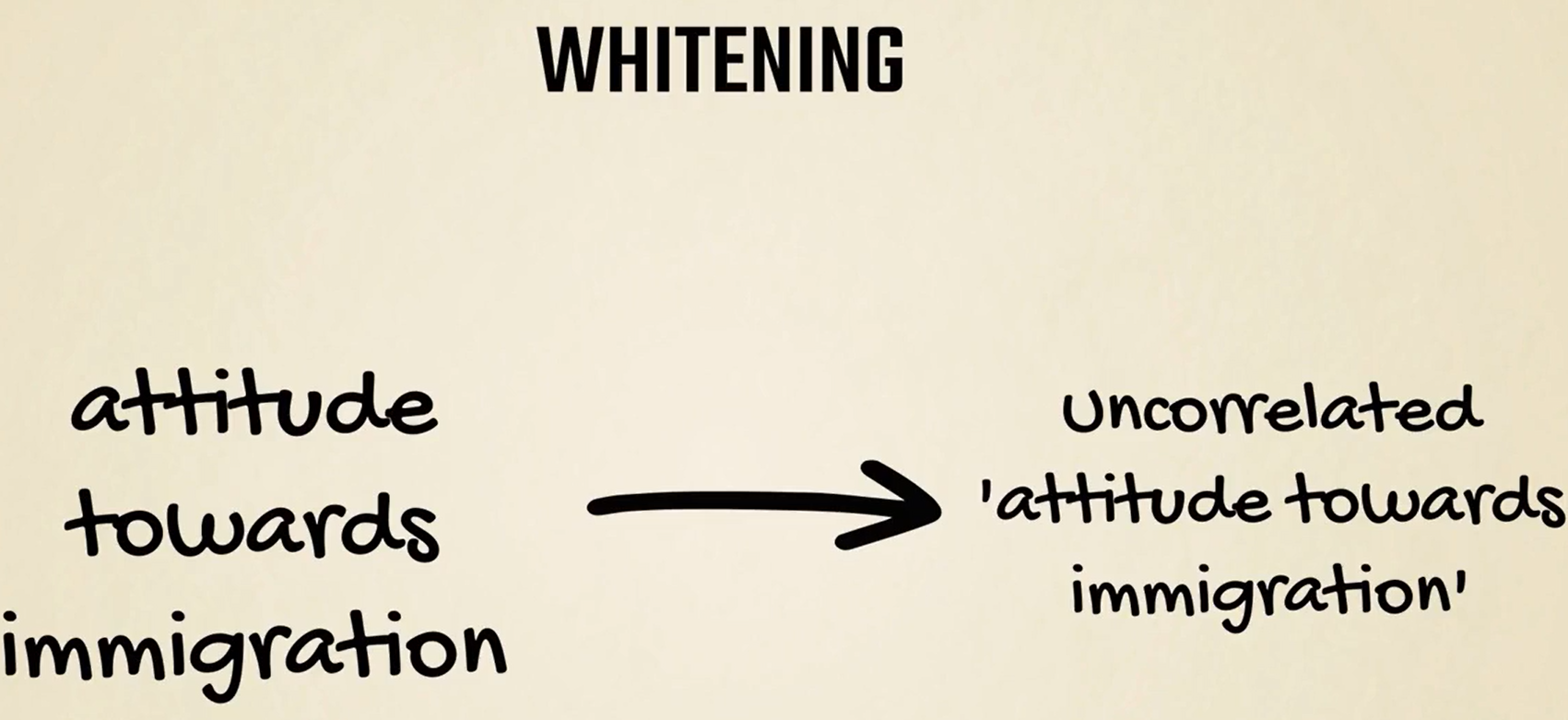

Whitening is another technique frequently used for pre-processing.

It is often performed after PCA, and removes most of the underlying correlations between data points.

Whitening can be useful when, conceptually, the data should be uncorrelated but that's not reflected in the observations.

We can't cover all the strategies as each strategy is problem specific. However, standardization is the most common one, and is the one we will employ in the practical examples.

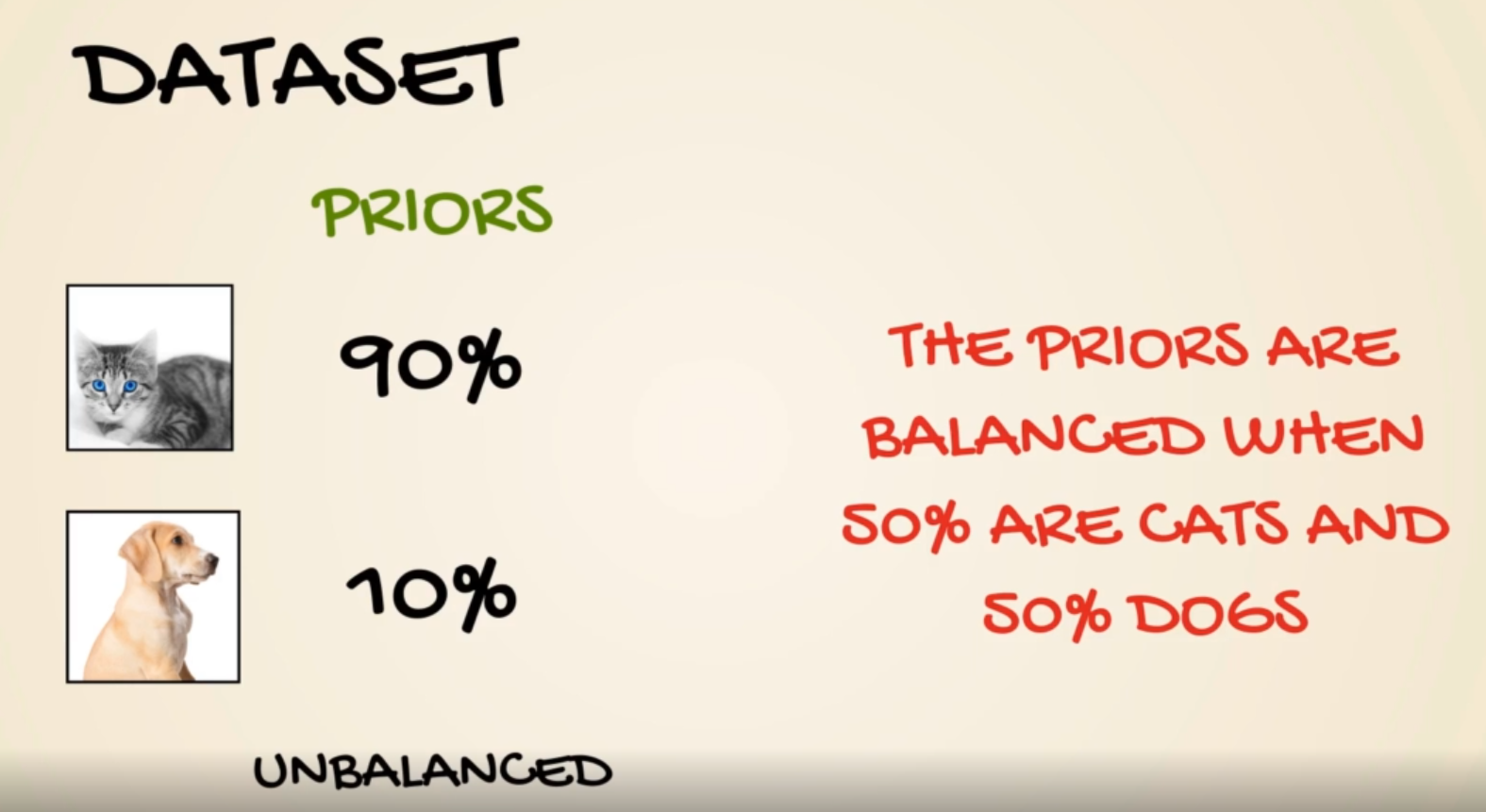

# Categorical Data

Often though we must deal with categorical data in short categorical data refers to groups or categories, such as our cat dog examples.

But the machine learning algorithm takes only numbers as values, doesn't it.

Therefore, the question when working with categorical data is: How to convert a cat category into a number, so we can input it into a model or output.

In the end, obviously, a different number should be associated with each category right or better a Tensor.

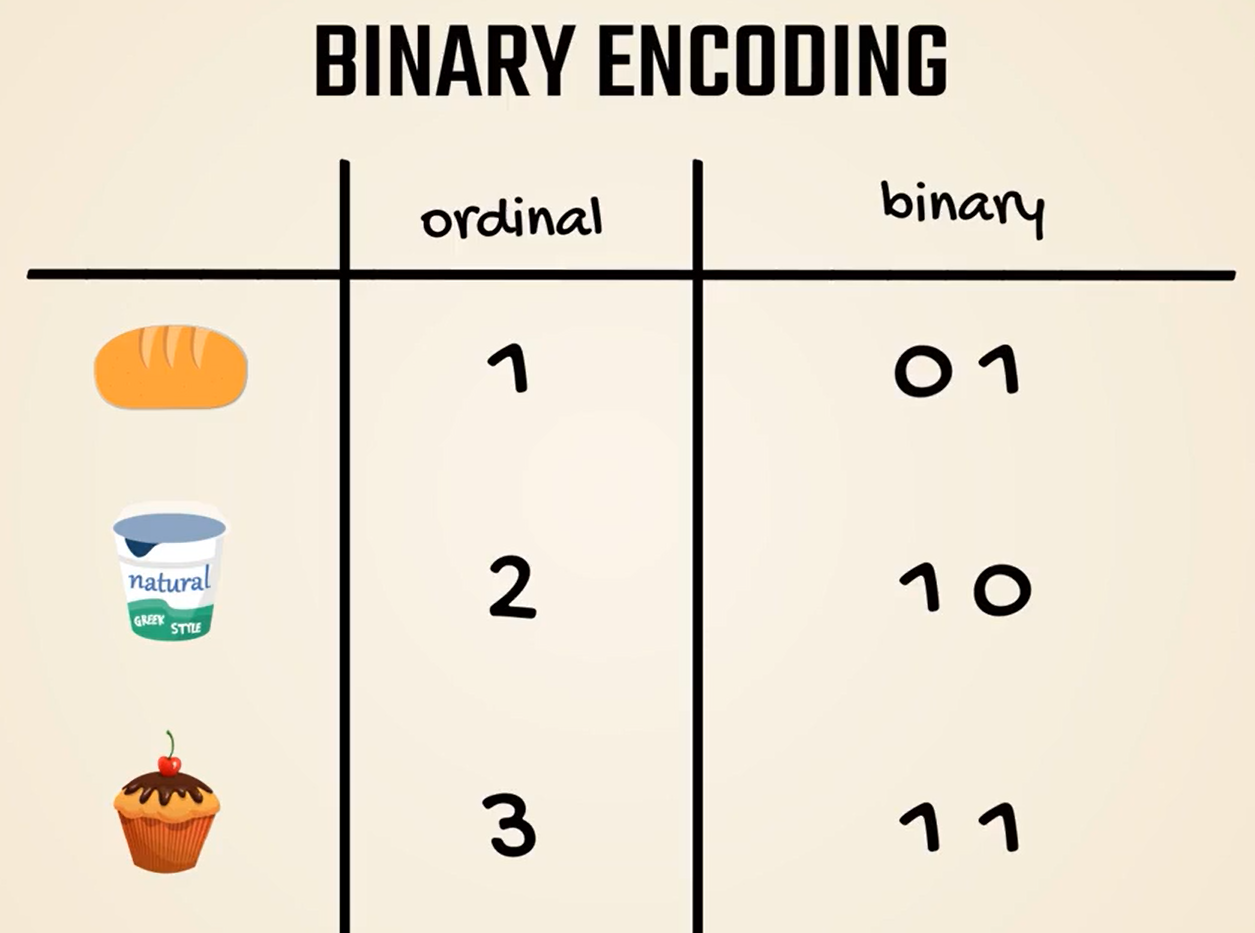

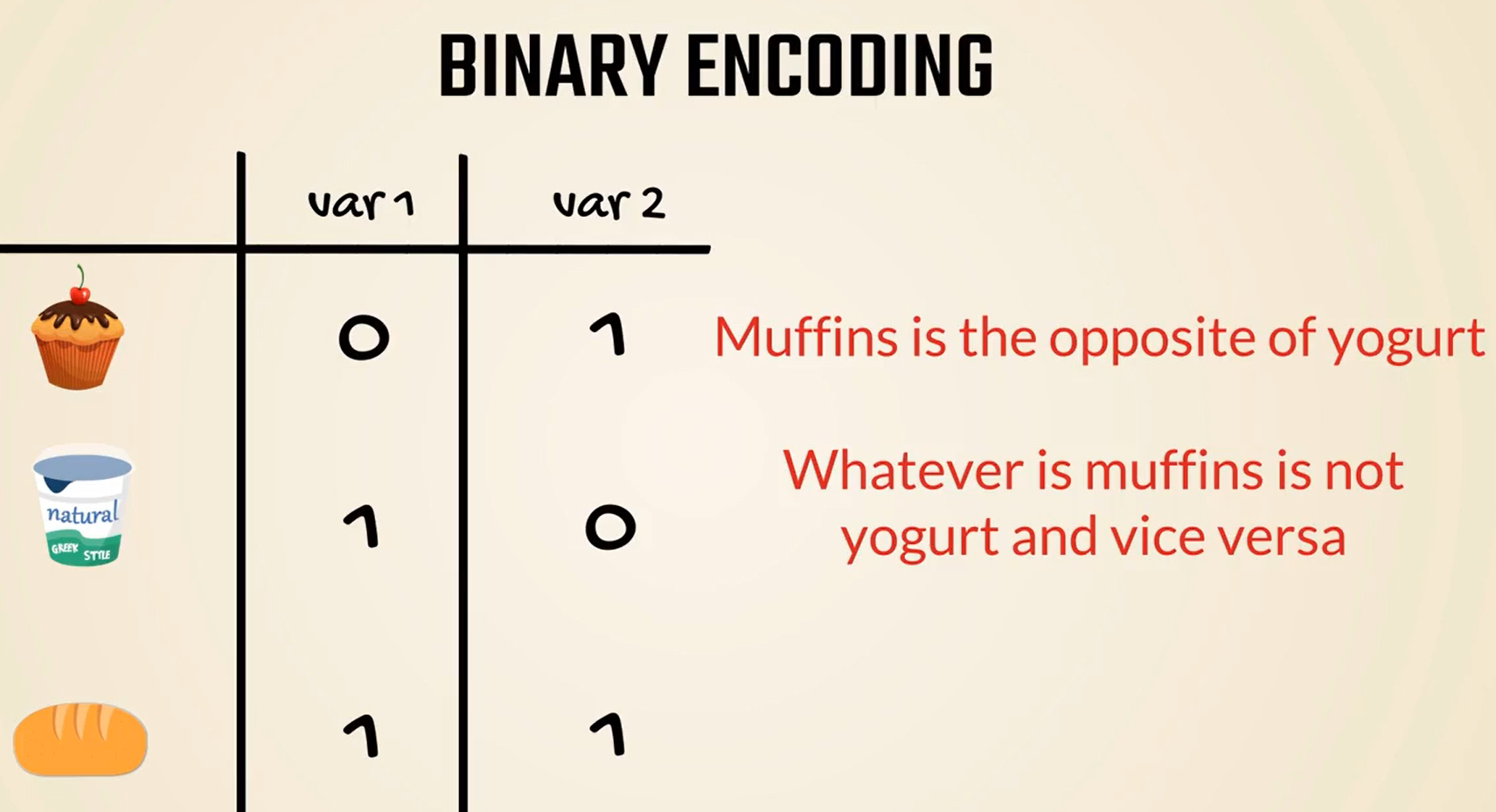

# Binary encoding

Let's introduce binary encoding.

We will start from ordinal numbers.

Bread is represented by the number 1, yogurt by the number 2 and muffin is designated with 3.

Binary encoding implies we should turn these numbers into binary.

The next step of the process is to divide these into different columns as if we were creating two new variables.

We have differentiated between the three categories and have removed the order.

However, there are still some implied correlations between them.

For instance, bread and yogurt seem exactly the opposite of each other.

Its like we are saying whatever is bread is not yogurt and vice versa.

Even if this makes sense if we encode them in a different way, this opposite correlation would be true for muffins and yogurt but no longer for bread.

Therefore, binary encoding proves problematic, but is a great improvement regarding the initial ordinal method.

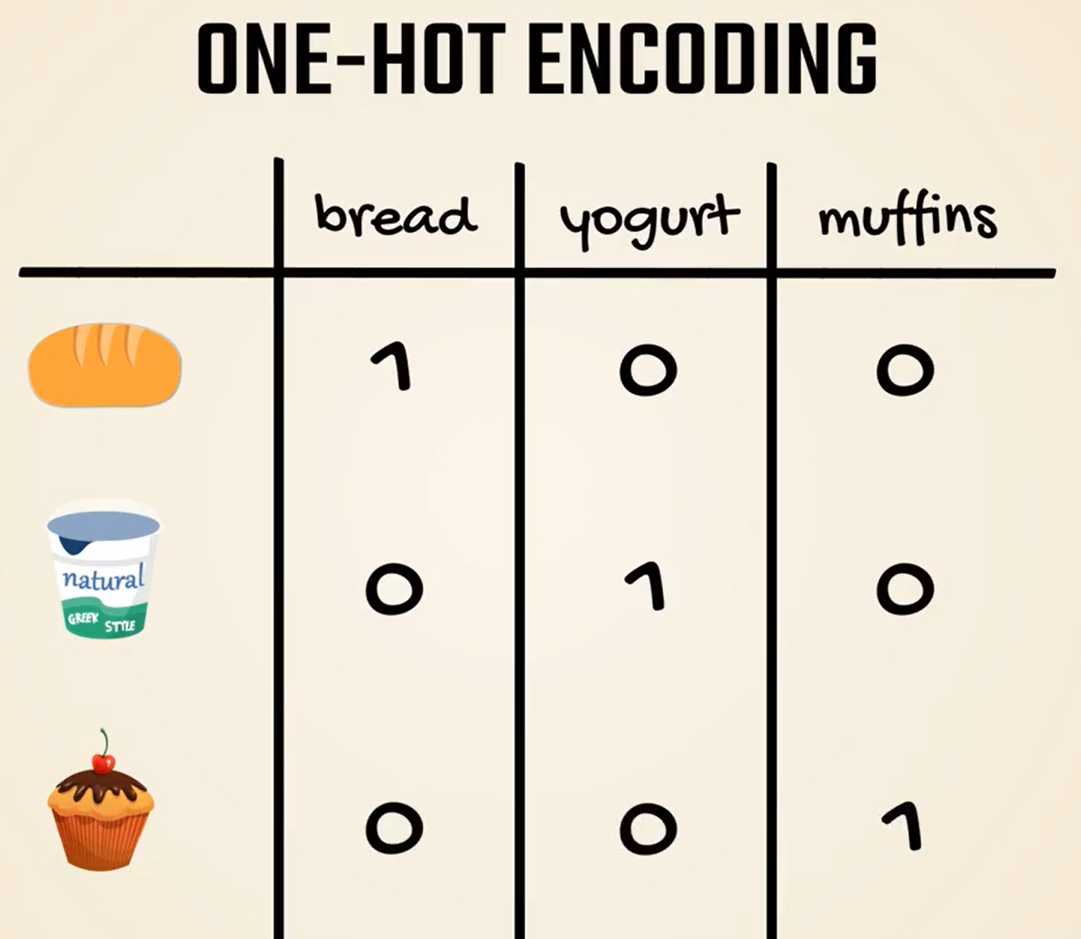

# One-Hot encoding

Finally, we have the so called One-Hot encoding one ha is very simple and widely adopted.

It consists of creating as many columns as there are possible values.

Here we have three products thus we need three columns or three variables.

Imagine these variables as asking the question: Is this product bread? Is this product yogurt? and is this product Muffin?

One means yes and Zero means no.

Thus, there will be only one value one and everything else will be zeroed.

This means the products are uncorrelated and unequivocal which is useful and usually works like a charm.

There is one big problem with one high encoding though one encoding requires a lot of new variables.

There is one big problem with one high encoding though one encoding requires a lot of new variables.

For example, Ikea offers around thousand products.

Do we want to include 12000 columns in our inputs.

Definitely not. If we use binary the 12000 products would be represented by 16 columns only since the 12000 product would be written like this in binary.

This is exponentially lower than the 12000 columns we would need for one high encoding.

In such cases we must use binary even though that would introduce some unjustified correlations between the products.

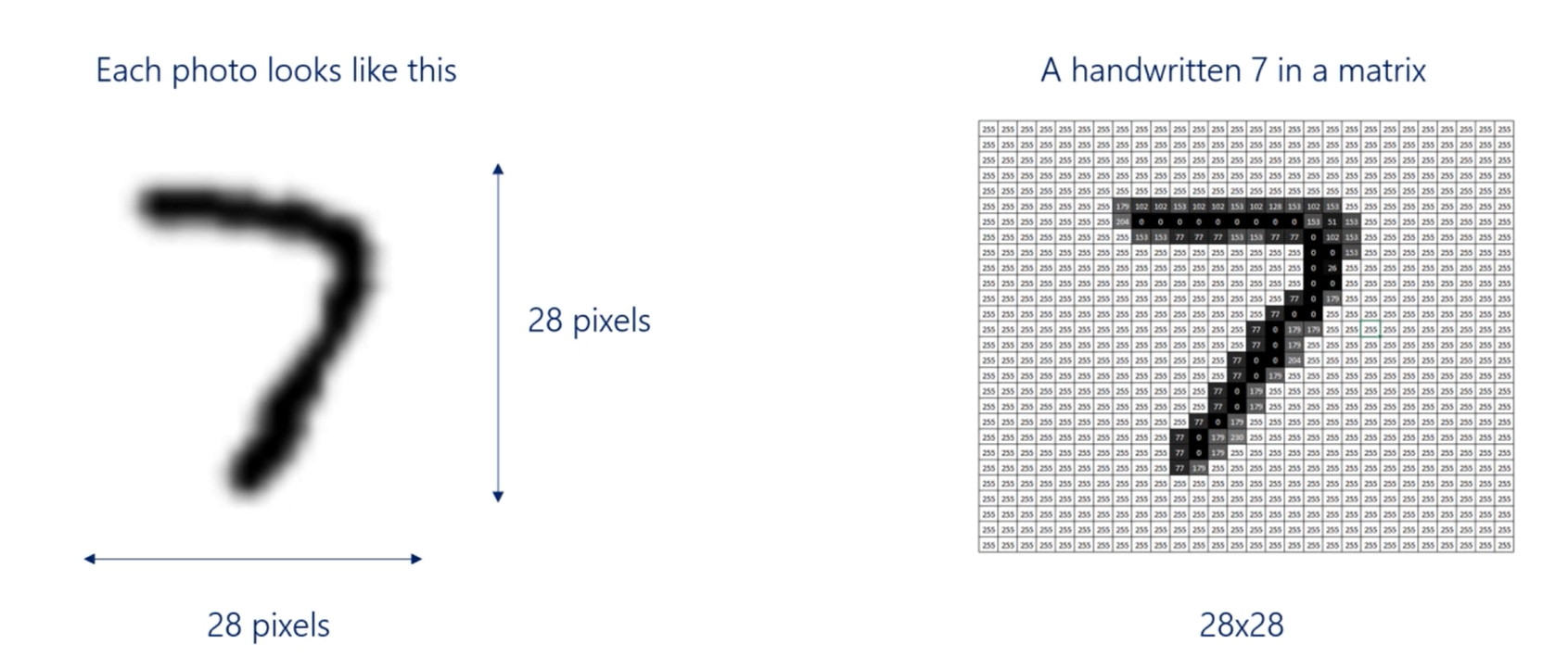

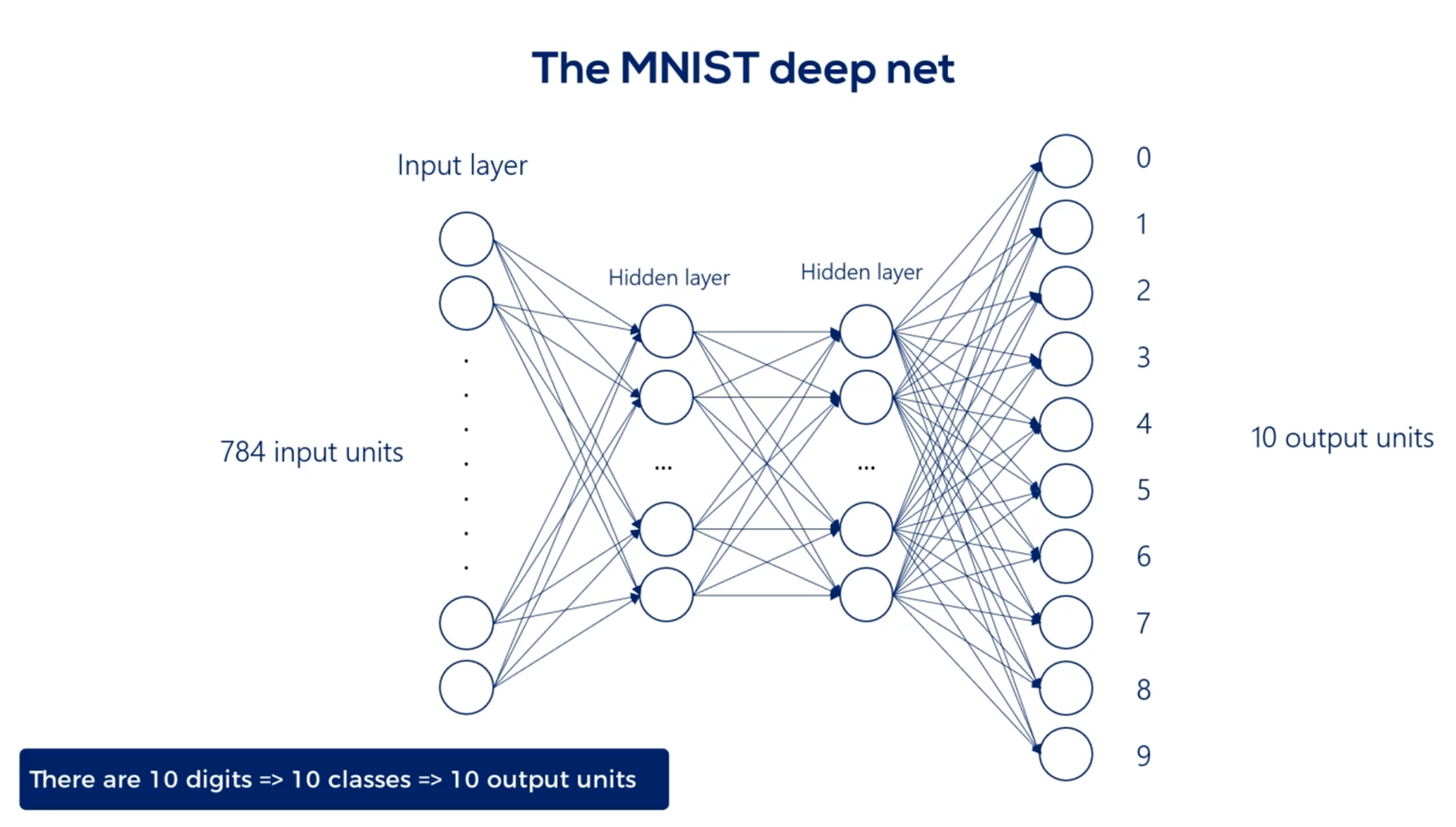

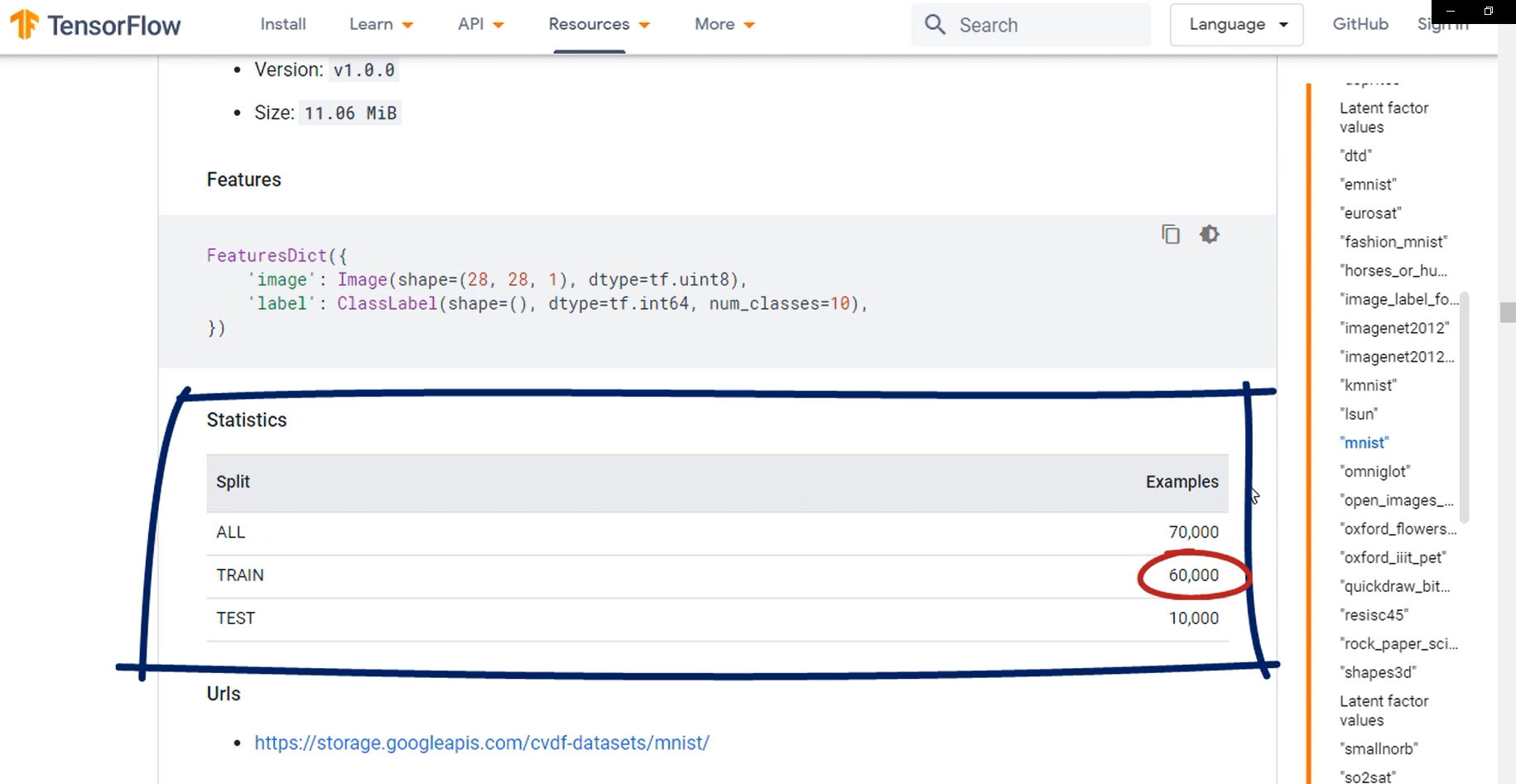

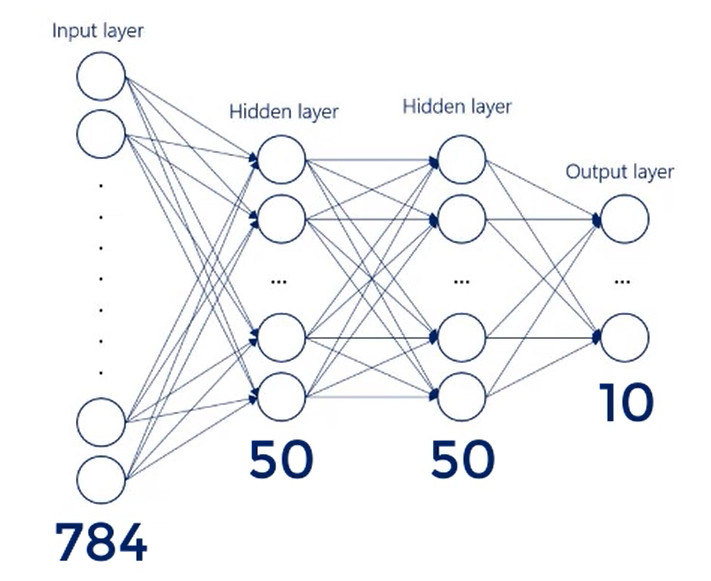

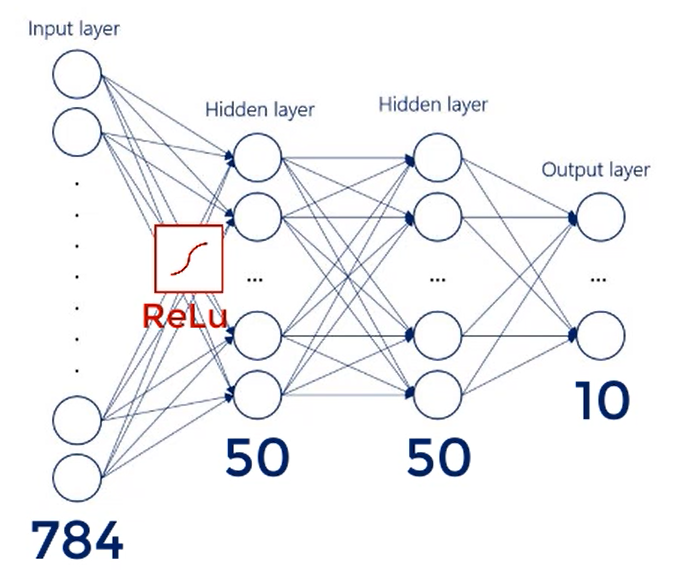

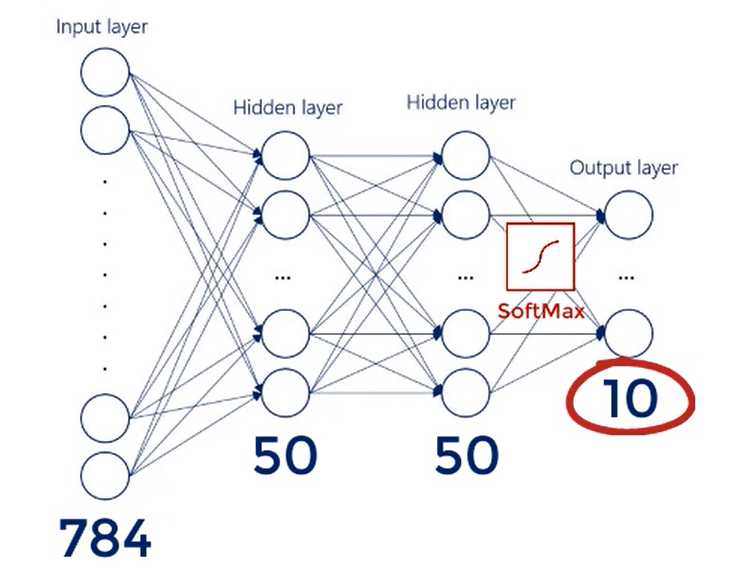

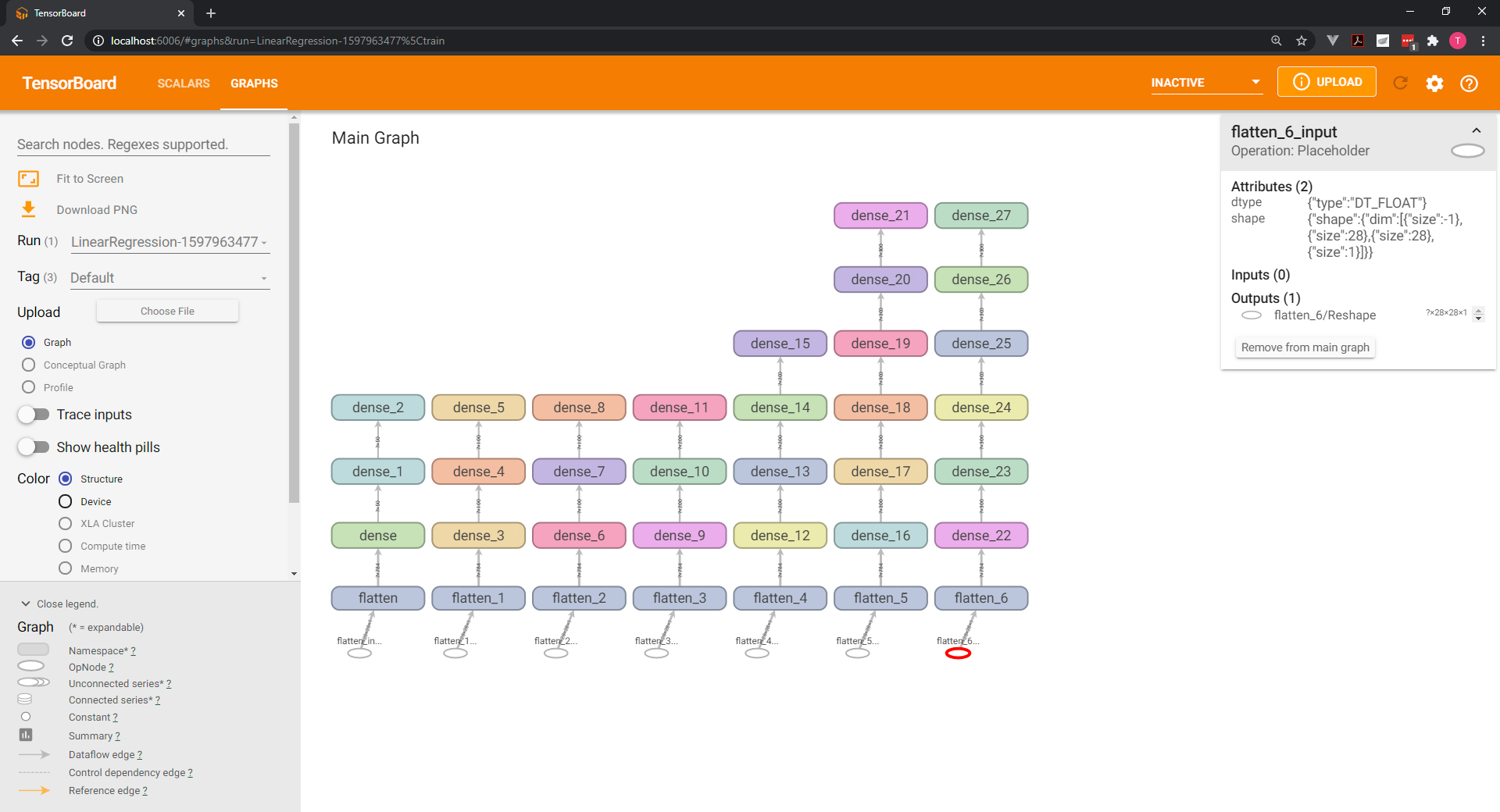

# The MNIST problem

How are we going to approach this image recognition problem.

Each image in the amnesty data set is 28 pixels by 28 pixels. It's on a gray scale, so we can think about the problem as a 28 by 28 matrix, where input values are from 0 to 255, 0 corresponds to purely black and 255 to purely white.

For example, a handwritten seven and a matrix would look like this that's an approximation.

But the idea is more or less the same. Now because all the images are of the same size, a 28 by 28 photo will have 784 pixels.

The approach for deep feed forward neural networks is to transform or flatten each image into a vector of length 784, so for each image we would have 784 inputs.

Each input corresponds to the intensity of the color of the corresponding pixel.

We will have 784 input units in our input layer, then we will linearly combine them and add a non linearity to get the first hidden layer.

For our example, We will build a model with two hidden layers. Two hidden layers are enough to produce a model with very good accuracy.

Finally, we will produce the output layer.

There are 10 digits so 10 classes.

Therefore, we will have 10 output units in the output layer

The utput will then be compared to the targets.

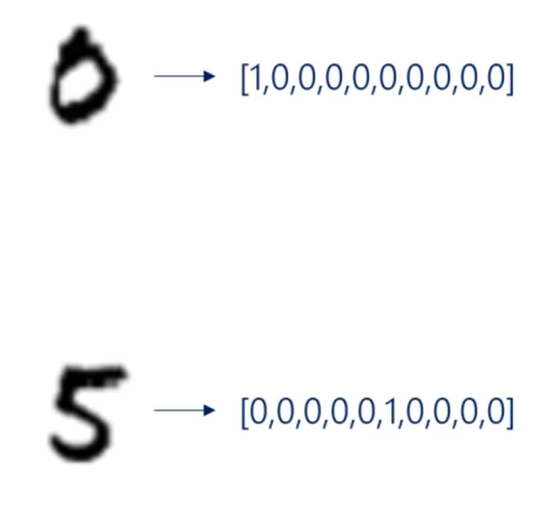

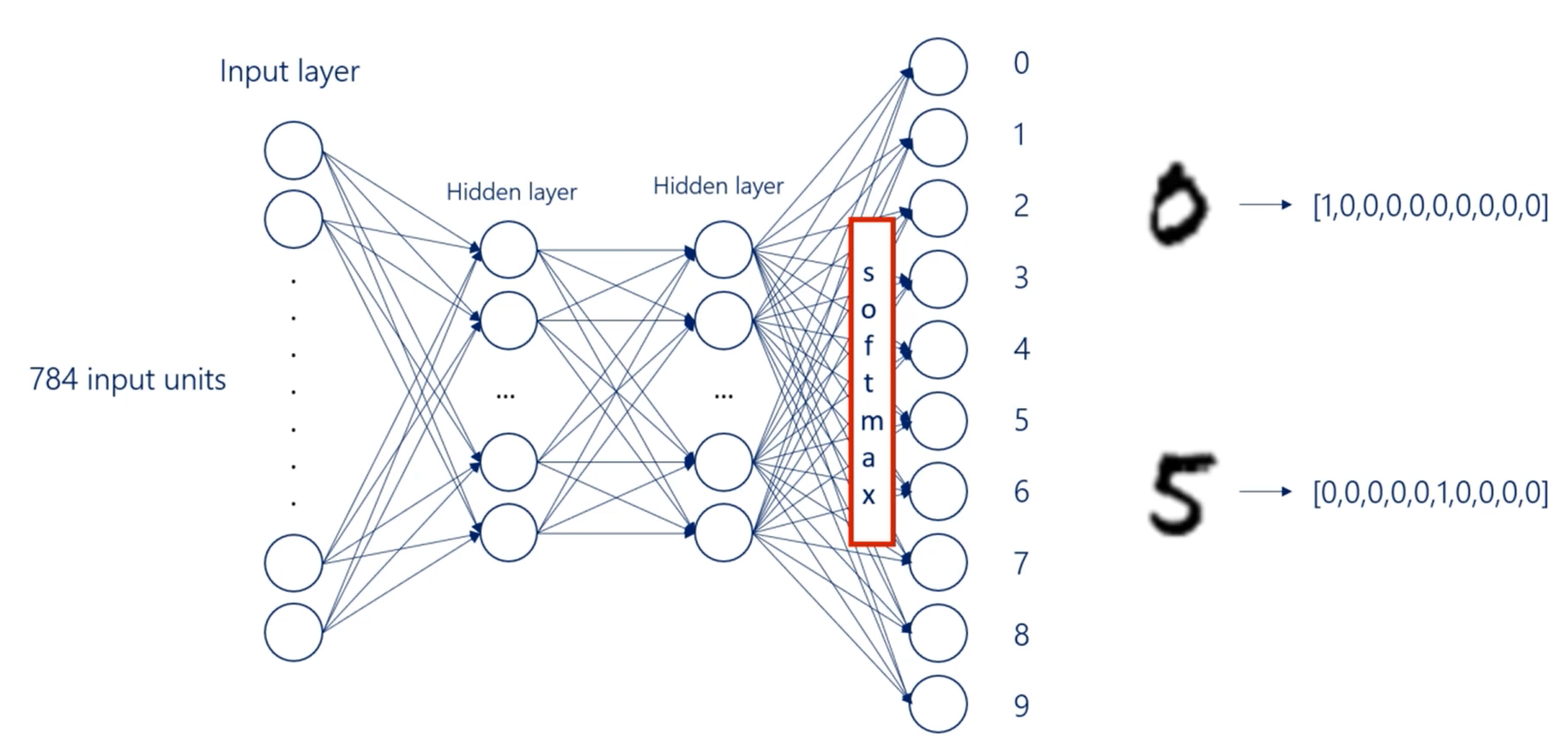

It will use one hot encoding for both the outputs and the targets.

For example, the digit 0 will be represented by this vector while the digit 5 by that one.

Since we would like to see the probability of a digit being rightfully labeled, we will use a soft Max activation function for the output layer.

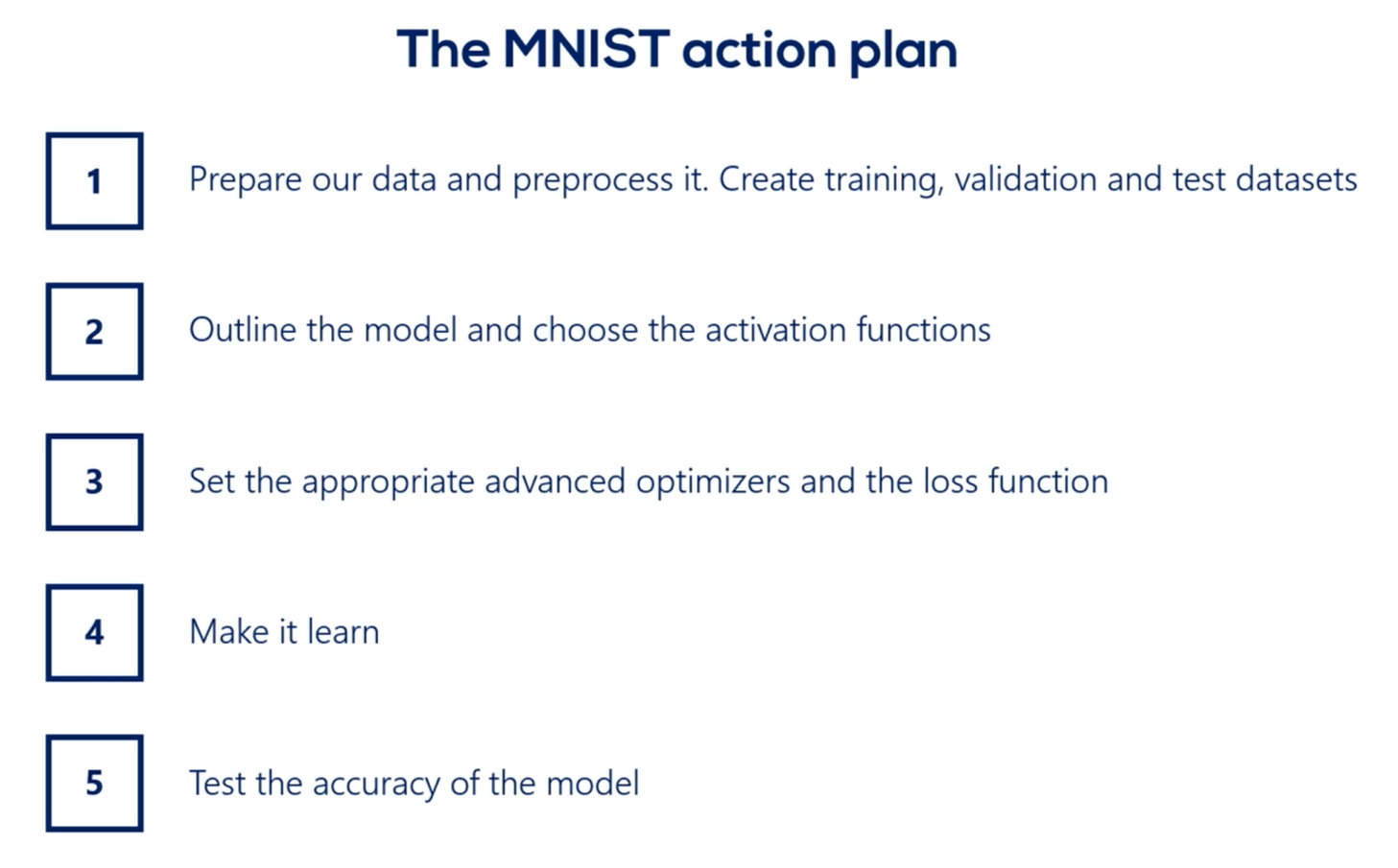

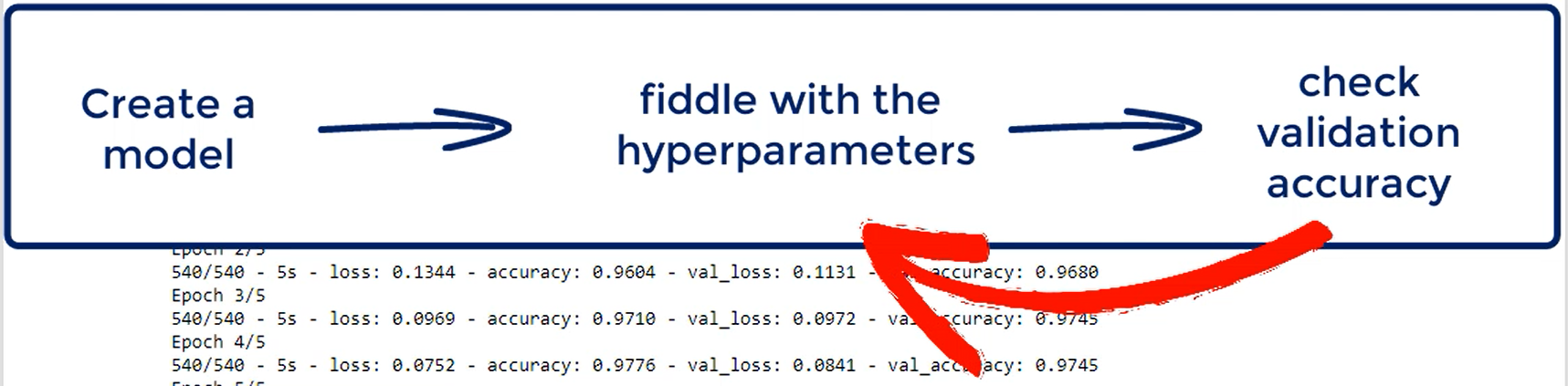

let's walk through the action plan.

First, we must prepare our data and pre process it a bit. We will create training validation and test data sets as well as select the batch size.

Second, we must outline the model and choose the activation functions we want to employ.

Third, we must set the appropriate advanced optimizers and the loss function.

Fourth, we will make it learn the algorithm will back propagate its way to accuracy at each epoch we will validate.

Finally, we will test the accuracy of the model regarding the test dataset.

# MNIST example

# Import the relevant packages

import numpy as np

import tensorflow as tf

import tensorflow_datasets as tfds

2

3

TIP

pip install tensorflow_datasets if pycharm doesn't have it in the project libraries to be installed.

# Data

There are two tweaks we'd rather make.

First, we can set the argument as_supervised=True.

This will load the data set in a two tuple structure input and target.

In addition, let's include one final argument with_info=True and stored in the variable mnist_info.

This will provide us with a tuple containing information about the version, features, and number of samples of the dataset.

TIP

tfds.load(name, with_info, as_supervised) Loads a dataset from TensorFlow datasets .

-> as_supervised = Trues, loads the data in a 2-tuple structure [input,target].

-> with_info = True, provides a tuple containing info about version, features, #samples of the dataset

mnist_dataset, mnist_info = tfds.load(name='mnist',

with_info=True,

as_supervised=True)

2

3

# Preprocessing the data

It's time to extract the train data and the test data.

Lucky for us, there are built in references that will help us achieve this.

mnist_train, mnist_test = mnist_dataset['train'], mnist_dataset['test']

Where is the Validation Dataset?

By default, TensorFlow MNIST has training and testing datasets, but no validation dataset.

Sure that's one of the more irritating properties of the tensor flow data sets module, but, in fact, it gives us the opportunity to actually practice splitting datasets on our own.

The train data set is much bigger than the test one. So we'll take our validation data from the train data set.

The easiest way to do that is to take an arbitrary percentage of the train data set to serve as validation. So let's take 10 percent of it.

We should start by setting the number of validation samples. We can either count the number of training samples, or we can use the amnesty info variable we've created earlier.

num_validation_samples = 0.1 * mnist_info.splits['train'].num_examples

2

OK, so we will get a number equal to the number of training samples divided by 10.

We are not sure that this will be an integer though, it may be 10000.2, which is not really a possible number of validation samples.

To solve this issue effortlessly, we can override the number of validation samples variable with:

num_validation_samples = tf.cast(num_validation_samples, tf.int64)

This will cast the value of stored in the number of validation samples variable to an integer, thereby preventing any potential issues.

Now, let's also store the number of test samples and a dedicated variable.

num_test_samples = mnist_info.splits['test'].num_examples

num_test_samples = tf.cast(num_test_samples, tf.int64)

2

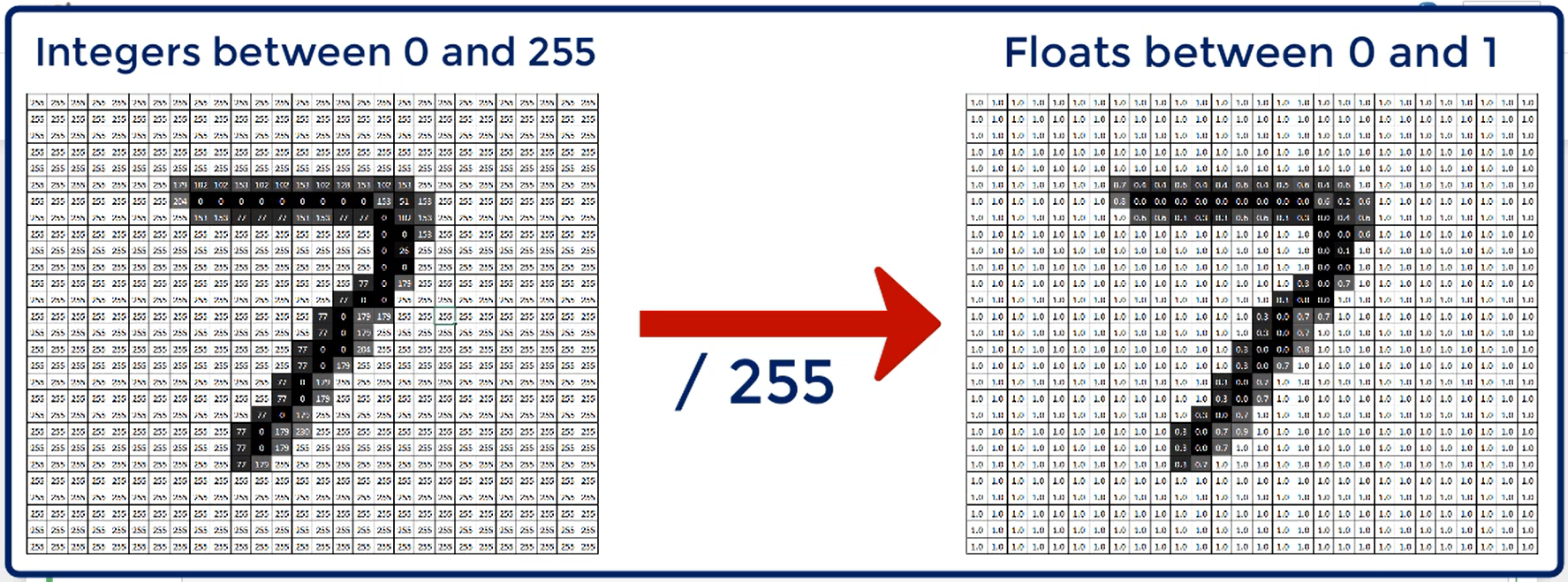

Normally, we would also like to scale our data in some way to make the result more numerically stable.

In this case, we will simply prefer to have inputs between 0 and 1.

With that said, let's define a function that will scale the inputs called scale.

It will take an MNIST image and its label.

Let's make sure all values are floats so we will cast the image local variable to a float 32.

Next, we'll proceed by scaling it. As we already discussed the MNIST images contain values from 0 to 255 representing the 256 shades of gray.

Therefore, if we divide each element by 255 we'll get the desired result. All elements will be between 0 and 1.

the dot at the end, once again, signifies that we want a result to be a float.

def scale(image, label):

image = tf.cast(image, tf.float32)

image /= 255.

return image, label

2

3

4

So, this was a very specific function to write right?

In fact there is a TensorFlow method called map, which allows us to apply a custom transformation to a given dataset.

Moreover, this map can only apply transformations that can take an input and a label and return an input and a label.

That's what we build our scale function this way.

Note that you can scale your data in other ways if you see fit.

Just make sure that the function takes image and label and returns image and label. Thus you are simply transforming the values.

We've already decided we will take the validation data from MNIST train so:

This will scale the whole train dataset and store it in our new variable.

scaled_train_and_validation_data = mnist_train.map(scale)

test_data = mnist_test.map(scale)

2

# Shuffling

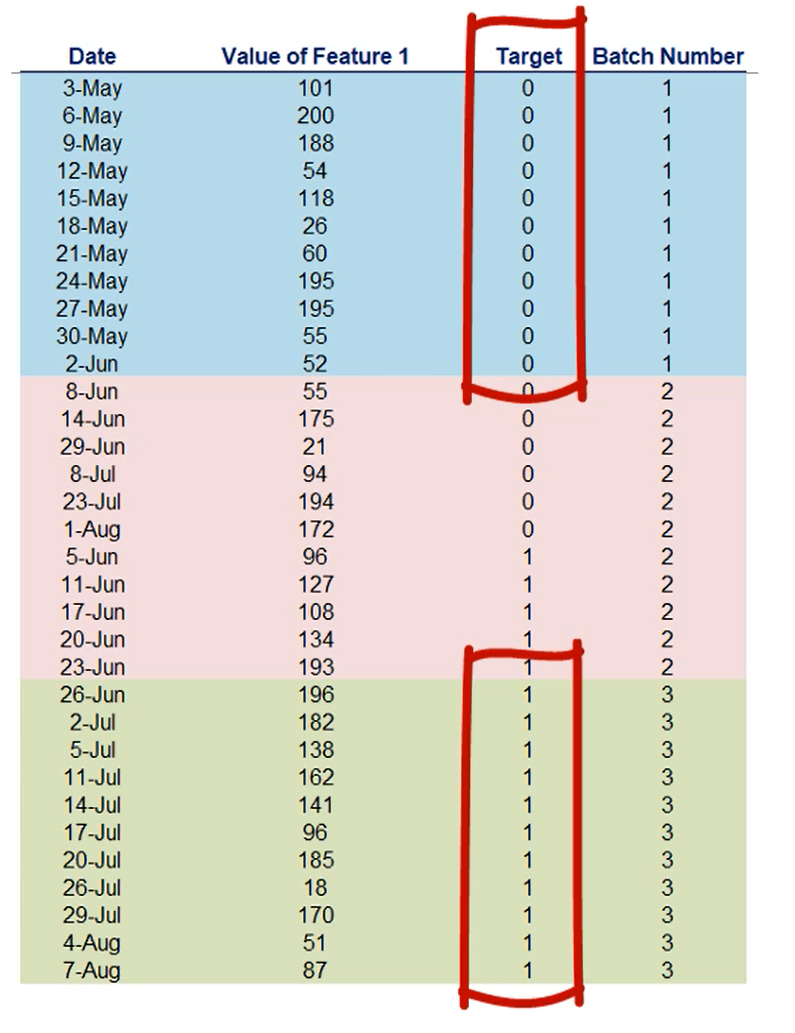

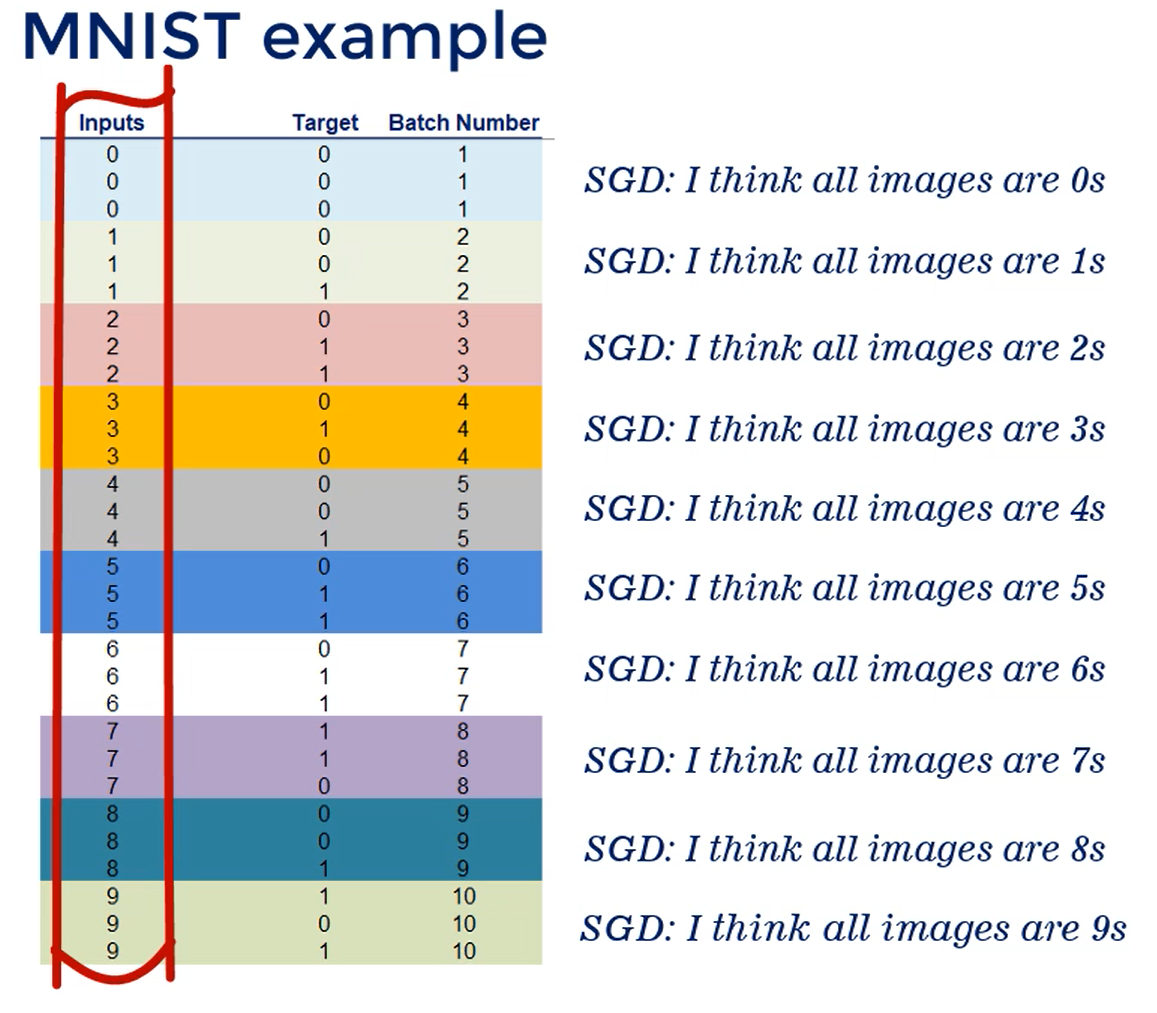

In this lecture will first shuffle our data and then create the validation dataset. Shuffling is a little trick we like to apply in the pre processing stage.

When shuffling we are basically keeping the same information, but in a different order.

It's possible that the targets are stored in ascending order resulting in the first X batches having only 0 targets and the other batches having only 1 as a Target.

Since we'll be batching we'd better shuffle the data, it should be as randomly spread as possible, so that batching works as intended.

Let me give you an unrelated example.

Imagine the data is ordered and we have 10 batches each batch contains only a given digit.

So the first batch has only zeros, the second only ones the third only twos etc..

This will confuse the stochastic gradient descent algorithm, because each batch is homogenous inside it but completely different from all other batches, causing the loss to differ greatly.

In other words, we want the data shuffled.

OK we should start by defining a buffer size, to say, 10000.

BUFFER_SIZE = 10000

This buffer size parameter is used in cases when we are dealing with enormous datasets.

In such cases, we can't shuffle the whole data set in one go, because we can't possibly fit it all in the memory of the computer.

So, instead, we must instruct TensorFlow to take samples ten thousand at a time, shuffle them and then take the next ten thousand.

Logically, if we set the buffer size to twenty thousand, it will take twenty thousand samples at once.

Note that if the buffer size is equal to 1, no shuffling will actually happen.

So, if the buffer size is equal or bigger than the total number of samples shuffling will take place at once and shuffle them uniformly.

Finally, if we have a buffer size that's between one and the total sample size we'll be optimizing the computational power of our computer.

All right, time to do the shuffle.

Luckily for us, there is a shuffle method readily available, and we just need to specify the buffer size.

shuffled_train_and_validation_data = scaled_train_and_validation_data.shuffle(BUFFER_SIZE)

Once we have scaled and shuffle the data we can proceed to actually extracting the train and validation data sets.

Our validation data will be equal to 10 percent

of the training set, which we have already calculated and stored in num_validation_samples.

We can use the method take() to extract that many samples.

validation_data = shuffled_train_and_validation_data.take(num_validation_samples)

We have successfully created a validation dataset.

In the same way we can create the train data by extracting

all elements but the first X validation samples, an appropriate method here is skip().

train_data = shuffled_train_and_validation_data.skip(num_validation_samples)

We will be using Mini-batch gradient descent to train our model.

As we explained before, this is the most efficient way to perform deep learning, as the tradeoff between accuracy and speed is optimal.

To do that, we must set a batch size and prepare our data for batching.

Remember:

batch size = 1 = Stochastic gradient descent (SGD)

batch size = # samples = (single batch) GD

So, ideally we want:

1 < batch size < # samples = mini-batch GD

So, we want a number relatively small with regard to the data set but reasonably high. So what would allow us to preserve the underlying dependencies

let's set the BATCH_SIZE = 100. that's yet another hyper parameter that you may play with when you fine tune the algorithm.

There is a method batch we can use on the data set to combine its consecutive elements in the batches. Let's start with a train data we just created.

I'll simply overwrite it, as there is no need to preserve a version of this data that is not batched.

This will add a new column to our tensor that would indicate to the model how many samples it should take in each batch.

train_data = train_data.batch(BATCH_SIZE)

What about the validation data?

Well, since we won't be back propagating on the validation data, but only forward propagating we don't really need to batch.

Remember that batching was useful in updating weights, only once per batch, which is like 100 samples rather than at every sample.

Hence, reducing noise in the training updates.

So, whenever we validate or test we simply forward propagate once. When batching, we usually find the average loss and average accuracy.

During validation and testing we want the exact values.

Therefore, we should take all the data at once.

Moreover, when forward propagating we don't use that much computational power so, it's not expensive to calculate the exact values.

However, the model expects our validation set in batch form too. That's why we should overwrite validation data with:

validation_data = validation_data.batch(num_validation_samples)

Here will have a single batch with a batch size equal to the total number of validation samples.

In this way, we'll create a new column in our tensor indicating that the model should take the whole validation dataset at once, when it utilizes it.

To handle our test data, we don't need to batch it either. We'll take the same approach we use with the validation set.

test_data = test_data.batch(num_validation_samples)

Finally, our validation data must have the same shape and object properties as the train and test data.

The MNIST data is iterable and in 2-tuple format, as we set the argument as_supervised=True. Therefore, we must extract and convert the validation inputs and targets appropriately.

Let's store them in validation_inputs, validation_targets and set them to be equal to next(iter(validation_data)).

validation_inputs, validation_targets = next(iter(validation_data))

TIP

iter() creates an object which can be iterated one element at a time (e.g. in a for loop or while loop).

iter() is the python syntax for making the validation data an iterator.

By default, that will make the data set iterable, but will not load any data.

next() loads the next batch. Since there is only one batch, it will load the inputs and the targets.

# Model

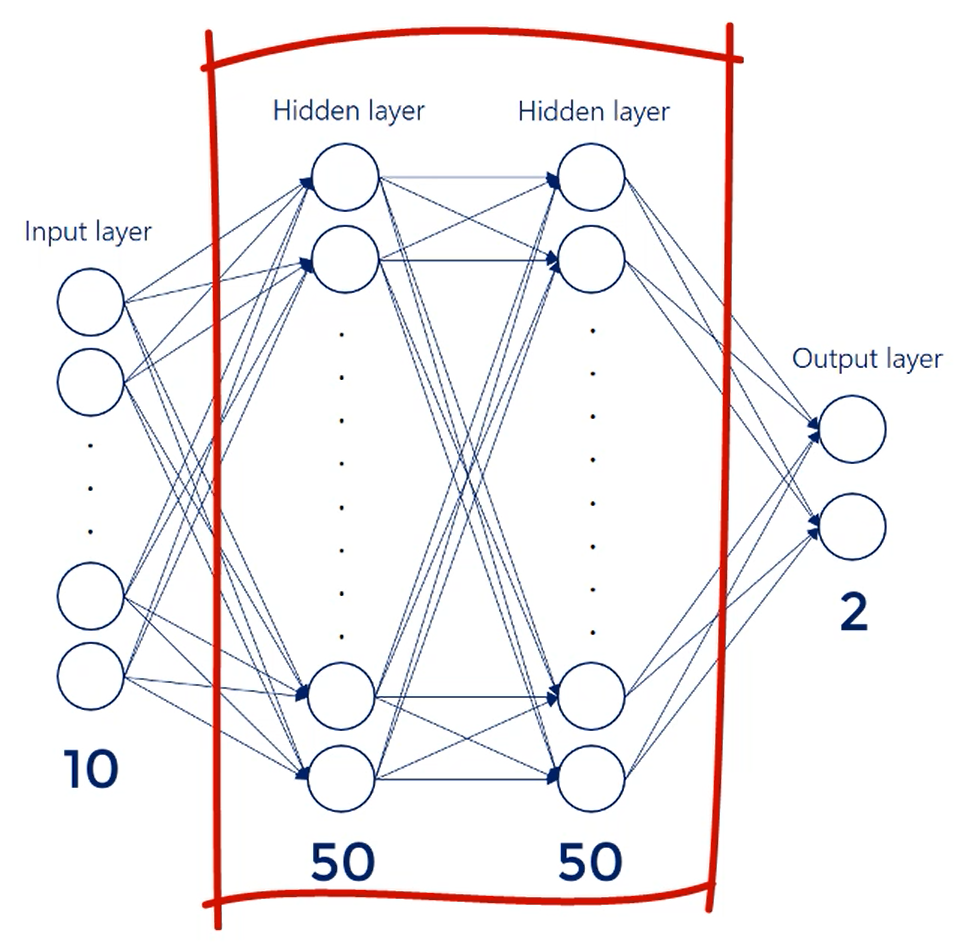

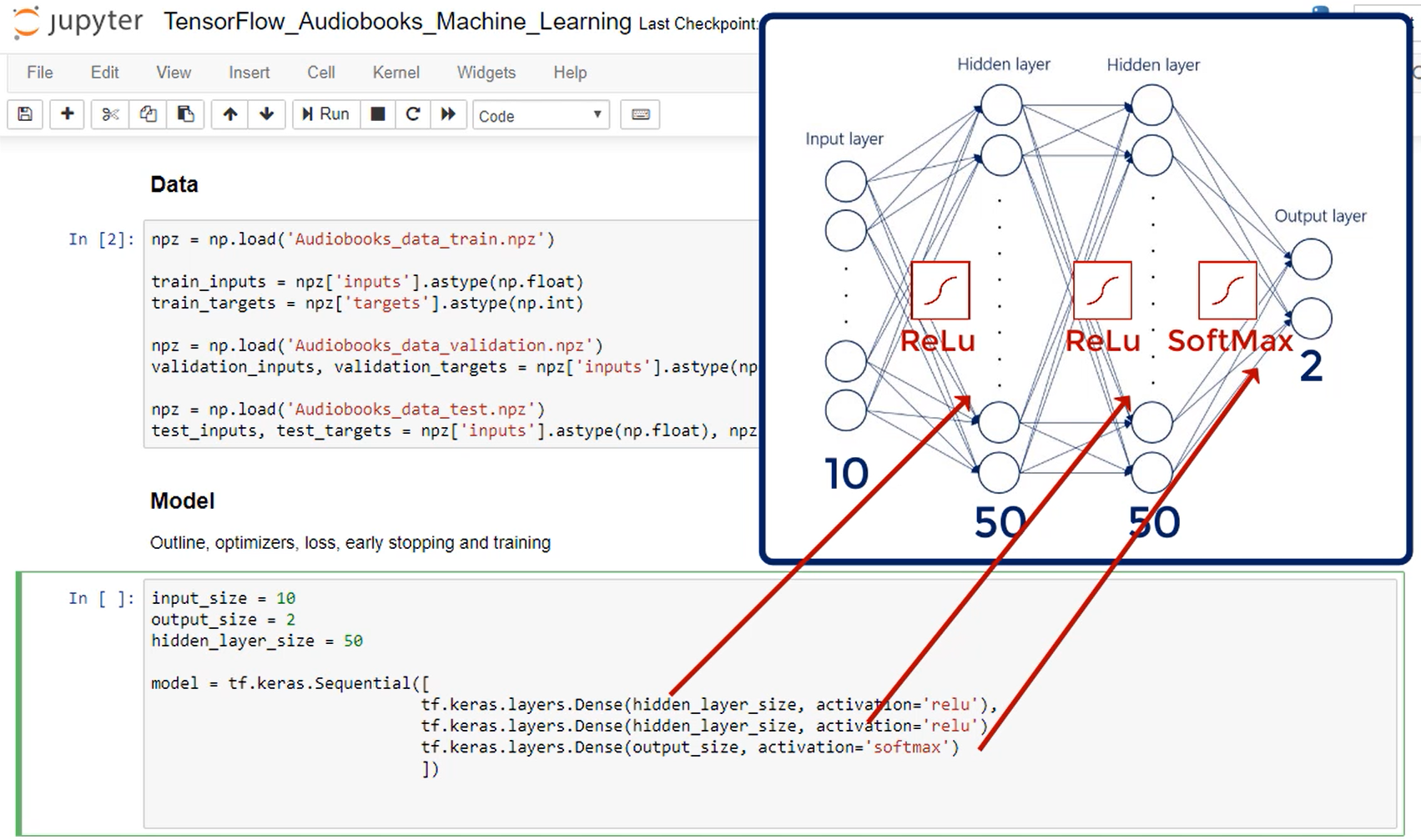

There are 784 inputs. So that's our input layer.

We have 10 outputs nodes one for each digit.

We will work with 2 hidden layers consisting of 50 nodes each.

As you may recall the width and the depth of the net are hyper parameters. I don't know the optimal width and depth for this problem but I surely know that what I've chosen right now is suboptimal.